Prompt Engineering

-

What is Context Window in AI and LLMs? [Non-Technical Explainer + FAQs Solved]

![What is Context Window in AI and LLMs? [Non-Technical Explainer + FAQs Solved]](https://appliedai.tools/wp-content/uploads/2025/12/image-1-e1764926978383.png)

The context window defines an AI’s information processing limits, impacting its problem-solving capabilities and influencing AI industry economics.

-

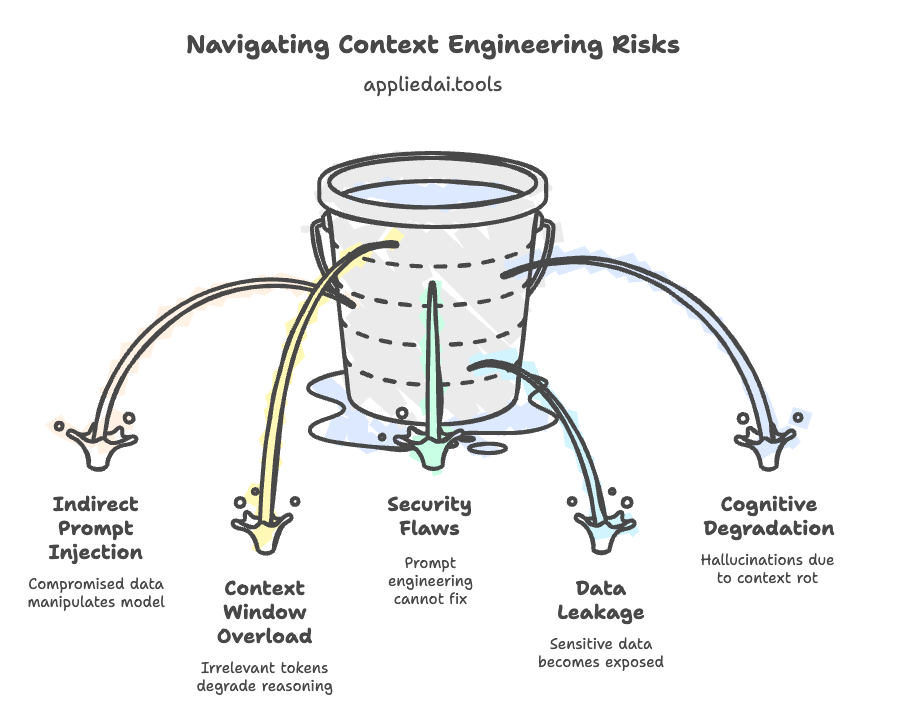

8 Context Engineering Risks with Mitigation Strategies Explained

Context engineering’s rise presents critical infrastructure challenges for AI agents, exposing them to new security risks like indirect prompt injection, data leakage, and cognitive degradation, necessitating tailored mitigation strategies.

-

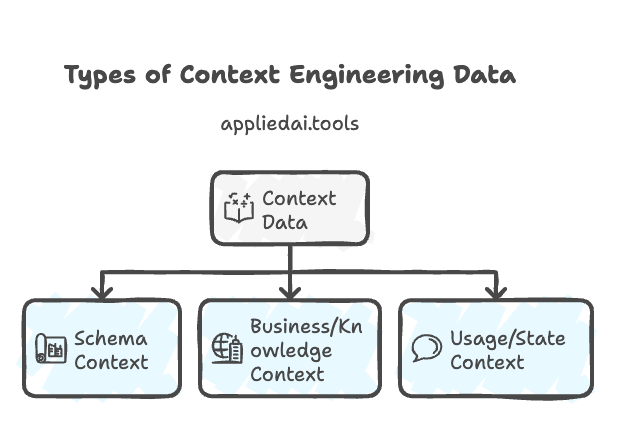

Types of Context Engineering With Examples Explained

The transition from prompt to context engineering in AI aims to improve interaction by optimizing information environments for LLMs. This shift emphasizes strategies like writing, selecting, compressing, and isolating data effectively.

-

Learn Context Engineering: Resource List (Lectures, Blogs, Tutorials)

Context engineering is vital for developing effective AI agents. This post compiles resources ranging from beginner to advanced levels, covering various aspects of context management. By following structured learning paths, practitioners evolve from prompt writers to context architects, enhancing AI system capabilities.

-

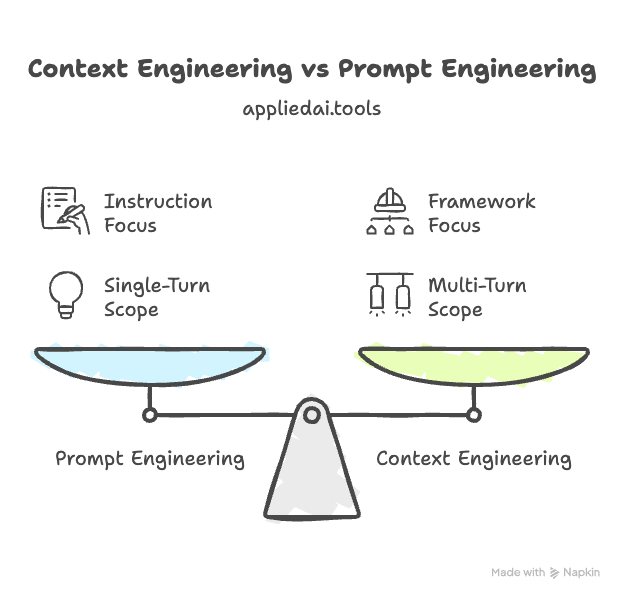

What is Context Engineering? – Learn Approaches by OpenAI, Anthropic, LangChain

Context engineering is crucial for developing reliable AI agents, managing their entire information pipeline beyond just prompt engineering. It encompasses instructions, memory, knowledge retrieval, and tools, divided into four pillars. Effective management of context prevents degradation of AI performance, making sophisticated AI assistants effective in task completion.

-

Make the Most of Claude File Creation Feature

Claude’s new file creation capabilities revolutionize productivity by allowing users to create Excel, Word, PowerPoint, and PDF files directly through conversation. This transformation shifts AI from a simple advisor to an active collaborator, enabling complex tasks to be completed efficiently. Users can articulate needs and receive professional-quality outputs instantly, enhancing workplace efficiency.

-

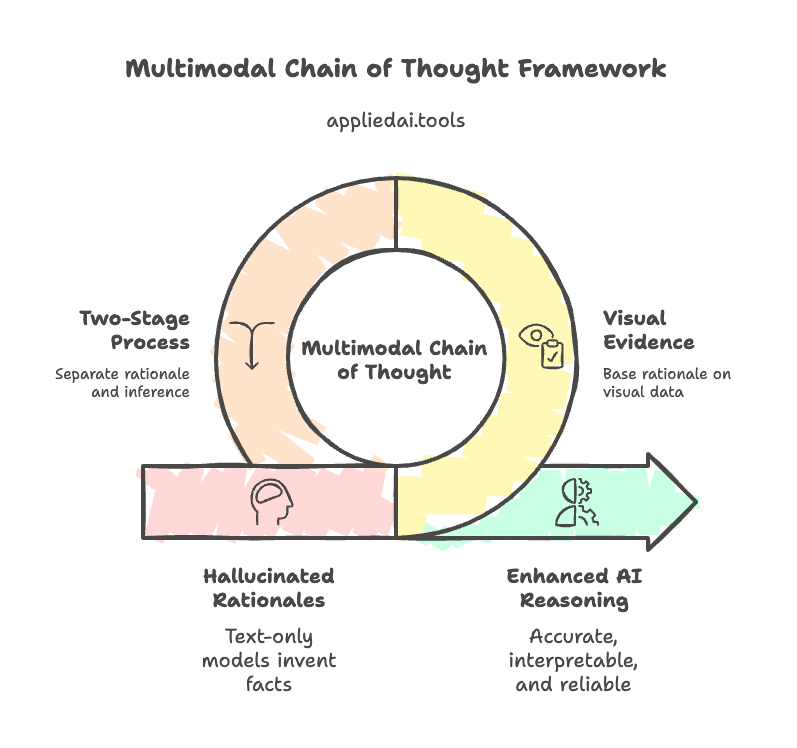

What is Multimodal Chain of Thought (CoT) Prompting? – Examples and FAQs Solved

Learn the evolution of artificial intelligence from basic text-only models to advanced Multimodal Large Language Models (MLLMs) that are capable of complex reasoning through a technique known as Multimodal Chain of Thought Reasoning. Explore the steps and benefits of this approach, with an emphasis on accurate interpretations across diverse data types.

-

Microsoft AI-Safe Jobs Study Explained: Use Insights to AI-Proof Career in 2025

Microsoft’s research on Generative AI and jobs, based on 200,000 conversations, identifies a labor market split. Knowledge-based roles face high risks from AI, while jobs requiring physical skills and empathy remain secure. The study emphasizes AI as a tool for augmentation, urging professionals to adapt and master AI for career resilience.

-

What Is Prompt Chaining? – Examples And Tutorials

Prompt chaining is a technique in generative AI that breaks down complex tasks into manageable steps. By linking smaller prompts sequentially, it enhances AI’s accuracy and reliability. This method allows better task control, clearer processes, and easier debugging, making it ideal for addressing intricate projects effectively.

-

What Is Self-Consistency Prompting? – Examples With Prompt Optimization Process

Self-consistency prompting involves asking an AI the same question multiple times to enhance the accuracy and reliability of its answers. By generating various responses, the most frequently occurring answer is selected, akin to gathering multiple opinions. Combining this approach with Chain-of-Thought prompting improves reasoning and correct responses for complex tasks.