Imagine asking an AI to act as a home repair assistant. You show it a photo of a leaking pipe under your sink, a short video of the dripping sound, and the text from the pipe’s user manual.

A text-only AI is useless.

A basic multimodal AI might state the obvious: “There is a pipe and water.”

But an advanced AI, using Multimodal Chain of Thought, would reason through the problem:

“Based on the image, the leak is at the P-trap joint. The rhythmic dripping in the video suggests a steady leak, not a catastrophic burst. The manual specifies a 1.5-inch slip nut wrench is needed for this joint. The first step is to turn off the main water supply before attempting to tighten the nut.”

This illustrates the monumental leap from simple perception to complex, actionable reasoning.

The evolution of artificial intelligence from text-only Large Language Models (LLMs) to Multimodal Large Language Models (MLLMs) is necessary. This shift allows AI to align with the “inherently multimodal nature of real-world environments.” This change is not merely about adding new features. It signifies a fundamental shift from basic perception. The goal is to achieve the kind of “sophisticated cognitive reasoning” that characterizes human intelligence.

At the heart of this transformation is a powerful technique known as Multimodal Chain of Thought (MCoT).

This guide will deconstruct Multimodal Chain of Thought and how to use it for writing optimized prompts. It will cover the foundations of this technique, its core mechanics, and practical prompting frameworks.

First, what is chain of thought reasoning?

To grasp the significance of MCoT, one must first understand its foundation: the original, text-based Chain of Thought.

I have covered what is chain of thought prompting in this guide:

Chain of Thought (CoT) is a prompt engineering technique that guides an LLM to break down complex problems into intermediate, human-like reasoning steps rather than jumping to conclusions. This shift enhances the model’s transparency and improves its performance on intricate tasks requiring logic, math, or commonsense reasoning.

There are two primary flavors of CoT:

1. Zero-shot chain of thought

This method’s power lies in its simplicity. By appending a simple phrase like “Let’s think step by step” to a prompt, the model is coaxed into generating a rationale before its final answer. This happens without needing any prior examples.

Consider this classic problem:

- Standard Prompt:

I went to the market and bought 10 apples. I gave 2 apples to the neighbor and 2 to the repairman. I then went and bought 5 more apples and ate 1. How many apples did I have left with?

Typical (Incorrect) Output: 11 apples.

- Zero-Shot CoT Prompt:

I went to the market and bought 10 apples. I gave 2 apples to the neighbor and 2 to the repairman. I then went and bought 5 more apples and ate 1. How many apples did I remain with? Let's think step by step.

CoT Output: First, you started with 10 apples. You gave away 2 apples to the neighbor and 2 to the repairman, so you had 6 apples left. Then you bought 5 more apples, so now you had 11 apples. Finally, you ate 1 apple, so you would remain with 10 apples.

2. Few-shot chain of thought

Few-shot CoT is a more structured approach. The prompt includes one or more examples, known as exemplars. These exemplars explicitly show the desired step-by-step reasoning process. By seeing a template for the thought process, the model learns to replicate it for the new query.

- Few-Shot CoT Prompt:The odd numbers in this group add up to an even number: 4, 8, 9, 15, 12, 2, 1.A: Adding all the odd numbers (9, 15, 1) gives 25. The answer is False.The odd numbers in this group add up to an even number: 15, 32, 5, 13, 82, 7, 1. A:

CoT Output: Adding all the odd numbers (15, 5, 13, 7, 1) gives 41. The answer is False.

What is the difference between Chain of Thought vs. Prompt Chaining techniques?

Chain of Thought elicits a detailed reasoning process within a single prompt to solve one complex task. It is a vertical strategy, designed to increase the depth, logical coherence, and reliability of a single answer.

The model mimics a slower, more deliberate human thought process by allocating more computational steps to a problem. This reduces the chance of the model taking erroneous statistical shortcuts. It is best used for tasks prone to error from hasty conclusions. Examples include math problems, logic puzzles, and causal inference.

Prompt Chaining breaks a large task into a sequence of multiple, separate prompts. Here, the output of one prompt becomes the input for the next. It is a horizontal strategy for managing workflow complexity, not necessarily for improving the core reasoning of any single step. It is a form of project management for the AI.

I have covered prompt chaining process in detail:

An analogy clarifies the difference:

CoT is like asking a detective to write a report that should detail their entire investigation. It covers everything from analyzing the evidence to forming a conclusion.

Prompt chaining is like giving the detective a series of discrete tasks:

- “Summarize all witness statements,” then

- “Based on that summary, list the primary suspects,” and finally

- “Based on the suspect list, write an arrest warrant for the most likely culprit.”

Why adopt multi-modal approach for chain of thought reasoning?

The world is not made of text alone. True intelligence requires processing a rich tapestry of information.

Multimodal AI systems are designed to process and integrate multiple data types concurrently. These types, or modalities, include text, images, audio, and video.

The core mechanism involves several steps. First, specialized unimodal encoders process each data type. For example, a Convolutional Neural Network (CNN) for images or a Transformer for text—converting them into numerical representations called embeddings. A fusion module then combines these disparate representations into a unified understanding. The strategy for this combination is critical:

- Early Fusion: Combines raw data or low-level features at the very beginning of the process.

- Late Fusion: Processes each modality independently and only merges the final predictions or decisions at the end.

- Intermediate Fusion: A hybrid approach where modality-specific features are processed separately first. Then, they are merged in the middle layers of the model.

This ability to fuse data is the technical underpinning. It allows an AI to look at a chart and read its caption. An AI can also listen to a financial analyst’s commentary to form a holistic understanding of a company’s performance.

What is Multimodal Chain of Thought (MCoT) reasoning?

Multimodal Chain of Thought is the extension of CoT reasoning to multimodal contexts. It enables multimodal LLMs to break down problems involving diverse data types into a series of intermediate reasoning steps. These include images, video, audio, text, and more.

Crucially, this is not just CoT with an image attached.

Multimodal CoT reasoning is a process where the reasoning steps themselves are derived from and informed by the rich information contained within the multimodal inputs.

The model is prompted to “think out loud” about how the different pieces of evidence from various modalities fit together to solve the problem.

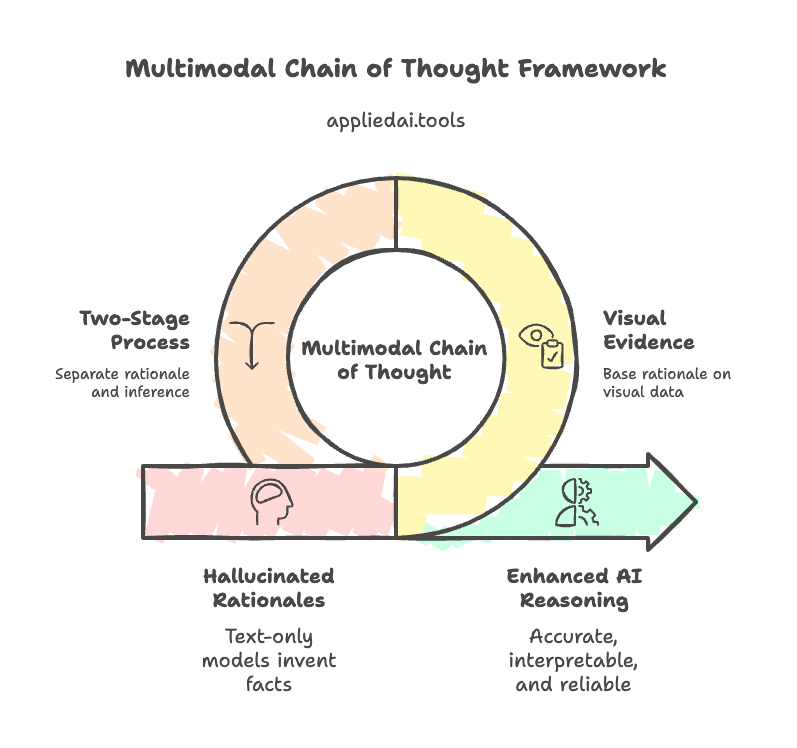

The two-stage framework of multimodal CoT

Seminal research in the field proposed a foundational architecture for MCoT that remains highly influential. It is a two-stage framework that separates the reasoning process from the final answer generation.

This structure is an engineering solution aimed at addressing a key weakness in LLMs: the disconnect between perception and reasoning. Standard models combine these processes, leading to confusion and errors. The two-stage framework explicitly decouples them.

Stage 1: Rationale Generation

The MLLM is given the full multimodal input (e.g., an image of a science diagram and a text-based question). Its first task is to generate a detailed rationale that explicitly synthesizes information from all modalities. This is a perception-to-text task: “Look at all the evidence and describe a logical plan.”

For example, given a circuit diagram and a question about total resistance, the model might generate: “The image shows a circuit with three resistors, R1, R2, and R3, connected in series. The question asks for the total resistance.

Thus, the first step is to add the values of the three resistors shown in the diagram.”.

Stage 2: Answer Inference

The original multimodal input is then merged with the newly generated rationale. This full package is fed back into the model to infer the final answer. This second stage is primarily a text-based reasoning task. It is now heavily grounded by the evidence-based plan from Stage 1: “Given this trusted plan, execute it to find the answer.” The rationale acts as a powerful, context-rich guide that scaffolds the final step.

Key benefits of multimodal chain of thought’s two-stage framework

This separation of rationale and inference provides several profound advantages that have catalyzed transformative progress in AI reasoning.

Mitigating hallucination:

This is arguably the most critical benefit. When smaller text-only models (under 100B parameters) attempt CoT, they often generate “hallucinated rationales”. They invent facts or misinterpreting the problem because they lack a grounding in external evidence.

By forcing the rationale generation to be based on visual or other modal evidence, MCoT significantly reduces these errors. The model must reason about what it sees, not just what its language-trained parameters predict is likely to come next.

Research has shown this approach can correct over 60% of hallucination mistakes.

Enhanced accuracy and convergence:

The two-stage process leads to advanced performance. On the ScienceQA benchmark, models with under 1 billion parameters using MCoT have been shown to outperform the much larger GPT-3.5 model by over 16%. This structured approach also helps models converge to higher accuracy faster during the training process.

Improved interpretability:

The explicit, multimodal rationale provides a clear window into the model’s “thinking” process. It allows developers and users to see how the model is connecting different pieces of evidence, making it far easier to debug, trust, and verify the outputs.

The 5 pillars of systematic problem-solving using multimodal chain of thought prompting

Understanding the theory of MCoT is one thing; applying it effectively is another. This requires a systematic approach to prompt engineering. It goes beyond simple questions and treats the interaction as a structured investigation.

An effective MCoT prompt can be constructed around five core pillars. This framework transforms a simple query into a comprehensive analytical process.

Pillar 1: Gather data

The prompt’s first job is to give the MLLM with all necessary data. The quality of the MCoT output is directly proportional to the quality and completeness of the input evidence. This includes text (e.g., user reviews, error logs), images (e.g., product photos, architecture diagrams, screenshots), structured data (e.g., spreadsheets, performance metrics), and any other relevant modality.

Pillar 2: Analyze each modality systematically

Instead of just providing the data, the prompt should structure the analysis. Instruct the AI to examine each piece of evidence individually before attempting to connect them.

Example Prompt Snippet: “First, analyze the attached product image. Describe its key visual features and design elements. Second, analyze the provided user reviews. Summarize the main points of positive and negative feedback. Third, analyze the sales data chart. What are the key trends and inflection points?”.

Pillar 3: Synthesize connections

This is the crucial step where true reasoning begins. The prompt must explicitly ask the model to find the relationships, correlations, and contradictions between the individual analyses.

Example Prompt Snippet: “Now, synthesize your findings. How do the visual features you identified in the image relate to the specific complaints mentioned in the user reviews? Does the trend in the sales data chart correlate with the timing of these reviews or any product updates?”.

Pillar 4: Build the explicit reasoning chain

After synthesis, instruct the model to construct a step-by-step argument that leads to a conclusion. Each step in this chain must reference the evidence from the former steps, making the logic transparent and traceable.

Example Prompt Snippet: “Based on this synthesis, construct a step-by-step chain of thought to find the primary reason for the recent decline in sales. Each step in your reasoning must be supported by specific evidence from the image, reviews, or data.”.

Pillar 5: Confirm and conclude

The final part of the prompt should ask for a clear conclusion and a self-validation check. This encourages the model to review its own logic for flaws.

Example Prompt Snippet: “Finally, provide a conclusive recommendation for how to improve the product. Before you state your recommendation, check your reasoning for any logical gaps or unsupported leaps. Does your recommendation directly address the root cause identified in your reasoning chain?”.

Multimodal chain of thought prompting templates and best practices

To put this into practice, here are several techniques to enhance the quality of MCoT outputs:

Role-playing

Start the prompt with a role assignment. For instance, “You are an expert product analyst. Your task is to conduct a root cause analysis of a product’s declining sales using the provided multimodal data.” This primes the model to adopt the specific persona and reasoning patterns required for the task.

Structured output

Demand a formatted response. Ask the model to structure its output using clear headings that mirror the five pillars (e.g., “1. Evidence Analysis,” “2. Synthesis of Connections,” “3. Reasoning Chain,” etc.). This not only improves readability for the user but also forces the model to adhere to the structured thinking process.

The “Devil’s Advocate” technique

For high-stakes decisions where robustness is paramount, add a final instruction to challenge the conclusion. “Now, argue against your own conclusion. What is the strongest counter-argument or alternative explanation for the evidence presented? What assumptions have you made that could be incorrect?” This advanced technique forces a more thorough and balanced analysis.25

MCoT in action: Examples and OCR case study

The following examples illustrate how MCoT works across different domains.

Multimodal CoT example 1: Visual question answering (VQA)

Input: An image of a beach scene and the question, “Is this a popular tourist spot? Justify your answer.”

This is the image I shared to Perplexity Pro:

Multimodal Chain of Thought process followed by Perplexity Pro:

I asked this on Perplexity Pro – here’s how the steps took place:

The multimodal chain of thought process to reach the conclusion involved sequential reasoning steps. These steps integrate both visual information and textual information. Multiple data modalities contributed to this integration. I asked Perplexity Pro itself how it came up with the conclusion and here’s its explanation:

Stepwise multimodal reasoning

The process began by visually analyzing the aerial image to find geographic and environmental features. This includes curved coastline, rocky outcrops, greenery, and beach shacks.

First, these visual cues were converted into descriptive keywords. These keywords were then used to search for matching destinations or popular tourist beaches in India.

Integration across modalities

Textual evidence was gathered from credible web sources. The information confirmed the location and its distinct shape as Om Beach. This is a widely recognized tourist spot.

The model cross-validated visual patterns with textual knowledge. This process ensured that both image-specific features and descriptive data matched popular travel literature references.

Progressive rationalization

This approach used a multimodal chain of thought. It incrementally related visual elements to known travel destinations. It verified them with external texts and structured tourism guides.

The answer was reasoned step-by-step through the synthesis of visual recognition. It involved contextual keyword extraction and online verification. Additionally, it included corroboration with multiple data sources and modalities.

This chain of thought framework enables robust and accurate multimodal reasoning. It integrates information across image and text domains. This results in reliable conclusions.

Final answer shared by Perplexity Pro for identifying the image:

Multimodal CoT example 2: Social Situation Reasoning (Cognitive-CoT)

A specialized form of MCoT, called Cognitive Chain-of-Thought (CoCoT), uses a cognitively inspired three-stage process. This helps reason about complex social situations.

Input: An image of two people arguing at a dinner table. Question: “What is likely happening here, and is the interaction positive or negative?”

This is the image I shared to Perplexity Pro:

Multimodal Chain of Thought process followed by Perplexity Pro:

I asked this on Perplexity Pro – here’s how the steps took place:

Here is a detailed multimodal chain of thought (MCoT) process used to arrive at the conclusion about the interaction depicted in the image:

Visual modality analysis

Carefully observed the scene. Extracted visual clues like hand gestures and body orientation. Noted the food on the table and glasses of wine. Analyzed the overall posture of both individuals.

Both people displayed expressive gestures. Their open palms, raised arms, and bodies leaning forward were indicative of an emotionally charged conversation or disagreement. This was not relaxed interaction.

Contextual interpretation

Recognized that the setting was a dinner table with food served and mostly uneaten, suggesting the discussion interrupted the meal.

Dinner is culturally a time for social bonding. An animated exchange during dinner likely signals a personal or relational issue. This heightens the seriousness of the interaction.

Multimodal integration and reasoning

We integrated the visual inputs, like gestures, posture, and meal context. This was done with general social knowledge about dinner conversations. Our goal was to hypothesize the nature of the exchange.

Followed a chain-of-thought process where each visual element was broken down and its typical meaning evaluated. Evidence was accumulated to decide whether the scene was likely positive or negative.

The overall tone seems negative. This is because of tense gestures and a lack of visible smiles or relaxation. There is also an interruption of eating.

Conclusion synthesis

Formulated a summarized conclusion. The individuals are probably experiencing a dispute or disagreement. The interaction is best described as negative. This is based on integrated multimodal reasoning, including visual cues and situational knowledge.

This stepwise, evidence-based MCoT process illustrates the integration of multiple data modalities. Each step supports logical, human-like reasoning. This process ensures accurate scene interpretation.

Final answer shared by Perplexity Pro for analyzing the image:

Case study: The new generation of OCR – When Multimodal LLMs learn to read

One of the most compelling applications of MCoT is in Optical Character Recognition (OCR). This involves the task of extracting text from images.

The old way (Traditional OCR):

Traditional OCR software operates on a rigid pipeline. It uses algorithms to detect blocks of text, segment them into lines and characters, and then recognize each character individually. While highly specialized, this approach lacks contextual understanding and can be brittle when faced with complex layouts or poor-quality images.

The Multimodal Chain of Thought way (Multimodal LLM-based OCR):

Multimodal LLMs approach OCR as a contextual reasoning problem, not just a recognition task. They don’t just “read” characters; they understand the document as a whole.

By processing image patches as visual tokens, they use their vast textual knowledge to infer text. They rely on visual layout, semantics, and context. For example, a Multimodal LLM can interpret a blurry word as “Invoice.” It does so because the word appears at the top of a document. The document has the visual structure of an invoice.

The performance showdown:

This new approach creates a distinct set of trade-offs. Multimodal LLMs excel in areas where traditional OCR fails. These areas include documents that have complex layouts, tables, and forms. Multimodal LLMs are also proficient when reading text in natural scenes.

Nonetheless, they are highly sensitive to image resolution. Studies show that Multimodal LLM performance matches traditional OCR at resolutions around 300 ppi, it “deteriorates significantly below 150 ppi”. Furthermore, their reliance on context means they can struggle with random, context-independent character strings. They may hallucinate common words in place of rare ones.

The hybrid future: Multimodal LLMs as post-correctors

A powerful emerging technique combines the best of both worlds. A fast, traditional OCR engine performs an initial transcription, and then an MLLM is used to correct the output. By providing the Multimodal LLM with both the original image and the noisy text, this multimodal post-correction leads to a “drastic improvement in transcription accuracy”.

The below table summarizes the key differences between traditional OCR and Multimodal LLM to decide which technology to deploy:

| Feature / Scenario | Traditional OCR (e.g., Tesseract, Azure Vision) | Multimodal LLM (e.g., GPT-4o, Gemini) |

| Core Mechanism | Pipeline: Layout analysis -> Character segmentation -> Character recognition | Holistic: Processes image patches and text contextually |

| High-Resolution (300+ ppi) | High accuracy, reliable. | Comparable accuracy to traditional OCR. |

| Low-Resolution (< 150 ppi) | Performance degrades but often remains functional. | Performance deteriorates significantly, falling below traditional methods. |

| Complex Layouts (Tables, Forms) | Often struggles, requires templates and complex pre-processing. | Major strength. Understands structure from visual context. |

| Handwritten Text | Historically a major weakness, though improving. | Strong performance due to contextual guessing and reasoning. |

| Contextual Understanding | None. Reads characters literally without semantic awareness. | Major strength. Can infer words based on document type and semantics. |

| Failure Mode | Character-level errors (e.g., misinterpreting ‘l’ as ‘1’). | Hallucination, favoring common words over rare or random ones. |

| Best Use Case | High-volume, standardized, high-resolution documents (e.g., scanning books). | Unstructured documents, text in natural scenes, variable layouts (e.g., receipts, social media images). |

The frontier: When AI starts to visualize its thoughts

While MCoT marks a significant advancement, its reasoning remains rooted in language. Research is now exploring the potential when AI reasons not only with words but also with self-generated images.

Beyond text: Multimodal Visualization-of-Thought (MVoT)

Multimodal Visualization-of-Thought (MVoT) addresses a key limitation of Chain of Thought (CoT) and Multimodal CoT: their reliance on purely verbal (textual) reasoning.

For many complex problems, especially those involving spatial reasoning, language is an inefficient and often inadequate tool. Human cognition, in contrast, naturally and seamlessly combines words with mental images.

MVoT enables an Multimodal LLM to generate images as part of its reasoning chain. The model’s output becomes an interleaved sequence of text (“verbal thoughts”) and images (“visual thoughts”). This allows it to use the right cognitive tool for the right part of the problem. This signifies a paradigm shift from an AI that is a linguistic reasoner to one that is a true cognitive partner.

The core concept of MVoT is made intuitive through a simple analogy. As one group of researchers explained, you would not teach a child to tie their shoes by only describing it in words. You would show them, step-by-step. Trying to solve visual problems with only words is like “trying to teach someone origami over the phone”.

MVoT allows the AI to “draw out its thinking process.” It sketches a quick map to solve a puzzle. This prevents it from getting lost in confusing verbal descriptions.

In one experiment, an AI was tasked with guiding an elf across a frozen lake without falling through holes. When the model could only “think in words,” it struggled with complex spatial descriptions and achieved a 61% success rate. The success rate jumped to 85%. This happened when it was capable of using MVoT to generate visual sketches of its planned path at each step. This provides concrete evidence of its power to solve problems that are intractable with text alone.

Future applications of Multimodal Visualization of Thought

Multimodal VoT it is a fundamental step towards more robust and human-like reasoning with profound implications.

Applications of multimodal VoT:

The potential applications are vast and transformative. In embodied AI and robotics, a robot could visualize its path through a cluttered room before ever moving. A mechanic could use an AI that visualizes how complex parts fit together.

In medical diagnostics, a model could generate images showing the predicted progression of a disease on a series of scans.

For autonomous driving, a vehicle could visualize the predicted paths of other cars and pedestrians to make safer decisions.

Prompt engineering implication of multimodal VoT

The future of advanced prompt engineering may involve new interactive capabilities. This could include asking an AI to “Tell me the next step” or “Show me the next step.” This requires new prompting techniques and MLLM architectures that are generative in both text and vision. They need to be capable of producing interleaved output.

How to start using multimodal chain of thought reasoning today?

The journey from simple text-based commands to sophisticated, multimodal reasoning signifies a new chapter in artificial intelligence. Mastering these techniques is rapidly becoming a core competency for advanced AI practitioners.

Summary of what is Multimodal Chain of Thought reasoning so far:

First, let’s summarize what I have explained so far about multimodal chain of thought reasoning:

- Multimodal Chain of Thought extends the power of step-by-step reasoning to complex problems involving images, audio, and other data types. It significantly reduces hallucinations and improves accuracy.

- Effective MCoT prompting is a systematic, five-pillar process. First, gather all evidence. Next, analyze each modality individually. Then, synthesize connections between them. After that, build an explicit reasoning chain. Finally, confirm the final conclusion.

- MCoT is already transforming established fields like OCR. It replaces brittle, pipeline-based techniques with a more robust, contextual understanding of documents.

- The future of AI reasoning is visual. Emerging paradigms like MVoT are enabling AI to “show its work” with images. This occurs not just with words, unlocking new classes of spatial and complex reasoning problems.

Get started with Multimodal Chain of Thought prompting:

- Identify a testing ground: Select a recurring, complex problem in your work that inherently involves multiple data types. This could be debugging from error logs and UI screenshots. It could also involve analyzing user feedback alongside product mockups. Additionally, it might include assessing a marketing campaign using ad creative, engagement metrics, and customer comments.

- Build a simple framework: Create a basic MCoT prompt template. Use the five-pillar structure outlined in this guide. This template should be tailored to that specific problem.

- Run your first analysis: Apply your prompt to a real instance of the problem. Give the MLLM with the relevant images, text, and any other data, along with your structured prompt.

- Iterate and refine: Carefully analyze the model’s output. Was the reasoning logical? Did it successfully connect insights from different modalities? Did it miss anything obvious? Use this analysis to refine your prompt, making your instructions for analysis and synthesis more specific and clear.

Mastering Multimodal Chain of Thought is crucial. It allows us to move beyond AI that provides simple “answers.” This mastery helps in building true AI “thinking partners.” These partners can reason about the rich, complex, and deeply multimodal world we all live in.

Frequently asked questions (FAQs) solved on Multimodal Chain of Thought reasoning

Is Multimodal Chain of Thought a specific AI model or a technique?

MCoT is a prompting technique, not a specific model.

It is a method for structuring a prompt to guide a Multimodal Large Language Model (MLLM). This helps it reason through a problem step-by-step. It uses information from different data types like images and text. The two-stage framework includes rationale generation and answer inference. This is a common way to implement the technique. Still, the core idea is about eliciting a reasoning process. It is not about being a standalone model.

What are the main challenges and limitations of MCoT?

Despite its advantages, MCoT faces challenges. This includes effective strategies for leveraging varied multimodal context and designing thought processes that enhance reasoning abilities. More robust strategies are needed to prevent hallucinations, ensuring reasoning remains grounded in evidence. As tasks grow more complex, guaranteeing logical consistency of reasoning chains and maintaining accuracy across modalities is critical.

What are some real-world applications of MCoT?

MCoT is being applied in a growing number of critical domains that need complex reasoning based on diverse data inputs. Key applications include:

- Autonomous Driving: For better situational perception and decision-making by integrating data from cameras, LiDAR, and maps.

- Healthcare and Medicine: To improve medical diagnostics, we analyze patient data. We also use medical imaging. Clinical notes form a more comprehensive assessment.

- Embodied AI and Robotics: To enhance a robot’s ability to understand and interact with its environment. This is achieved by processing visual, sensory, and textual commands for sequential task planning.

- Agentic Systems: To allow AI agents to navigate complex digital or physical environments more effectively and generate high-quality multimodal content.

Are there different types or methodologies of MCoT?

Yes, the field of MCoT is evolving with various methodologies being explored. Research can be categorized from several perspectives, including :

- Rationale Construction: How the reasoning steps are created (e.g., based on prompts, pre-defined plans, or learned from data).

- Structural Reasoning: The shape of the reasoning process. It moves from simple linear chains to more complex tree or graph structures to represent dependencies between thoughts.

- Information Enhancing: Incorporating external tools or knowledge bases to strengthen the fidelity and accuracy of the reasoning chain.

- Multimodal Rationale: Moving beyond just text-based rationales. This approach includes visual or spatial information directly within the reasoning steps. This is seen in the emerging field of MVoT.

How is MCoT different from just giving a model an image and a question?

The key difference lies in the explicit, intermediate reasoning step. When you give a model an image and a question, it goes directly from input to answer. This process occurs in a single, often opaque, step. With MCoT, you instruct the model to first generate a rationale. The model provides a step-by-step explanation of how it interprets the multimodal evidence and plans to solve the problem. This happens before it produces the final answer. This process makes the model’s “thinking” transparent. It reduces the risk of it jumping to incorrect conclusions based on superficial correlations. The approach significantly improves accuracy on complex tasks.

Learn more about prompt engineering techniques

We have published more guides that cover different prompt engineering techniques. No jargon and simple explanations, with examples:

- What is Retrieval Augmented Generation (RAG)? – Examples, Use Cases, No-Code RAG Tools

- What Is Self-Consistency Prompting? – Examples With Prompt Optimization Process

- Markdown Prompting In AI Prompt Engineering Explained – Examples + Tips

- Importance of structuring prompts for AI models

- ChatGPT alternative – 30 User-Friendly ChatGPT UI tools

- 20 Prompt Engineering and Generative AI community list across Slack, Discord, Reddit, etc

- 10 AI Prompt Marketplaces to monetize prompt engineering skills – buy/sell AI prompts, custom GPTs

- 16 prompt management tools and adoption best practices

- Learn prompt engineering – best free resources, courses, AI newsletters, and tools

Learn more about AI models to understand their use case and science behind their optimizations in easy language:

- Vertical AI Agents Will Replace SaaS – Experts Warn On Future Of Workflow Automation

- Stanford’s S1 model – How Stanford built low-cost open source rival to OpenAI’s o1

- What is OpenAI o3-mini – technical features, performance benchmarks, and applications

- What is Alibaba’s Qwen2.5-Max – technical features, performance benchmarks, and applications

You can subscribe to our newsletter to get notified when we publish new guides:

This blog post is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Get in touch if you would like to create a content library like ours. We specialize in the niche of Applied AI, Technology, Machine Learning, or Data Science.

Leave a Reply