The ‘context window’ started as a technical footnote in machine learning research. It has now evolved into one of the most critical defining characteristics of Artificial Intelligence in 2026.

Context Window is the boundary of an AI’s cognition. It is the maximum scope of information it can perceive, analyze, and reason about at any single moment.

For enterprise leaders, developers, and researchers, understanding the context window is no longer optional. It is fundamental to determining which problems AI can solve and which it can’t.

In the early days of the Transformer architecture, context windows were restrictive bottlenecks. They limited interactions to brief exchanges or short abstracts.

Today, with the advent of models like Google’s Gemini 3 and Anthropic’s Claude Opus 4.5, the window has expanded to millions of tokens. This is enough to hold entire libraries, hours of high-definition video, or the full codebase of complex software applications. This shift has fundamentally altered the economics of AI. It moves the industry from ‘search and retrieve’ (Retrieval Augmented Generation) to one of “load and reason” (Long Context).

In this guide, we will understand ‘what is context’ in AI in simple jargon-free language. This includes

Key takeaways:

- Disecct the ‘physics’ of attention mechanisms that dictate these context window limits

- Analyze the comparative landscape of top-tier AI models and their context windows

- Explore the economic trade-offs of massive-context inference

- Give actionable context engineering strategies for mastering this new era of AI.

The Anatomy of Machine Cognition – Basics of ‘Context’ in LLMs

To use Large Language Models (LLMs) effectively, one must first understand the concept of the ‘context window.’

Context window is often metaphorically described as ‘working memory.’ This comparison is helpful, but it obscures the precise mathematical and computational reality that dictates model behavior.

What is a ‘Context Window’?

In the architecture of a Transformer-based LLM, the context window signifies the maximum sequence of tokens. These are discrete informational units. The model can process them at the same time during a single inference step. It is the model’s field of view.

When a user interacts with an AI, the ‘prompt’ sent to the model is not merely the question asked. It is a composite object containing:

- System Instructions: The immutable “constitution” of the model (e.g., “You are a helpful assistant proficient in Python”).

- Conversation History: The accumulation of all prior turns in the dialogue, which allows the model to keep continuity.

- Few-Shot Examples: Sample inputs and outputs provided to guide the model’s style and logic.

- Retrieval Data (RAG): Information pulled from external databases to ground the response in fact.

- User Input: The immediate query or document provided for analysis.

The context window is the hard limit on the sum of these parts.

If the total token count exceeds this limit, the information must be truncated. This is true whether it is 8,192 tokens for an older model or 2,000,000 for a state-of-the-art one. Data that slides out of the context window ceases to exist for the model. It does not get ‘compressed’ into long-term memory. Instead, it is simply deleted from the calculation. This deletion leads to the phenomenon where a chatbot ‘forgets’ instructions given at the start of a long conversation.

The Atomic Unit: The Science of Tokenization

The measurement of context windows in ‘tokens’ rather than words or bytes often causes confusion. To estimate costs and capacity accurately, one must understand tokenization.

Neural networks can’t process raw text strings. They process vectors of numbers. Tokenization is the translation layer that converts human language into these numerical IDs.

This process is not a 1:1 mapping of words to numbers. Modern tokenizers, like OpenAI’s cl100k_base or Llama 3’s tokenizer, use Byte Pair Encoding (BPE) to optimize efficiency.

- Efficiency Strategy: Common words like ‘apple’ or ‘the’ are assigned a single token. Rare words or complex morphology are broken into sub-word chunks. For example, ‘unbelievable’ might be tokenized as

un+believ+able. - Whitespace and Punctuation: These are not ignored. A trailing space is often part of a word’s token (e.g., ‘ hello’ is distinct from ‘hello’). A single distinct character like a curly brace

{in code is a token.

The Conversion Heuristic

While the exact ratio varies by language and domain (code vs. prose), the industry standard heuristic for English text is:

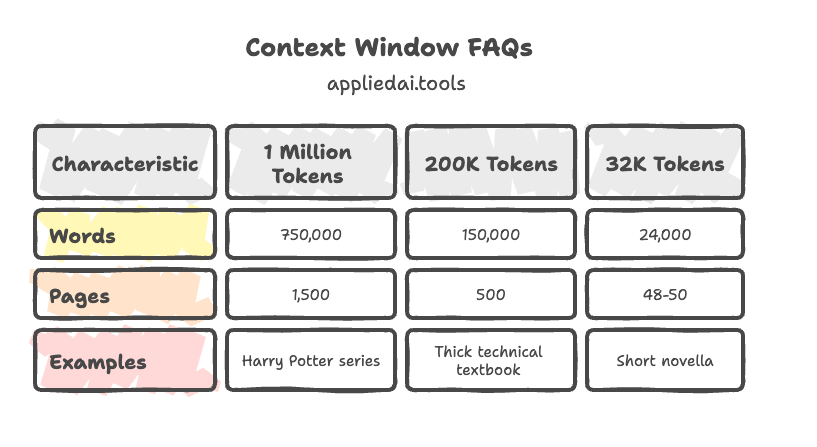

This ratio is critical for ‘sizing’ a context window against real-world assets.

Table 1: Visualizing Context Capacity in Real-World Terms

| Context Size | Est. Word Count | Equivalent Document Scale | Use Case Feasibility |

| 32K (Medium) | ~24,000 | A corporate annual report (10-K) or a novella. | Analyzing a single complex contract or a chapter of a book. |

| 128K (Standard) | ~96,000 | A full-length novel (e.g., To Kill a Mockingbird) or small codebase. | Reading a whole book, analyzing a project’s documentation. |

| 200K (High) | ~150,000 | A dense textbook or legal discovery bundle (approx. 500 pages). | Comprehensive legal review, medical record analysis. |

| 1M (Ultra) | ~750,000 | The entire Harry Potter series or ~50,000 lines of code. | “Needle in a haystack” retrieval across massive archives. |

| 10M (Frontier) | ~7.5 Million | ~100 books, entire corporate wikis, hours of 4K video. | Llama 4 Scout scale. Massive cross-repository refactoring. |

The Physics of Attention: Why Limits Exist

If larger context windows are better, why did we not start with 1 million tokens? Why do limits exist at all?

The answer lies in the computational complexity of the Self-Attention Mechanism, the engine of the Transformer.

The Quadratic Barrier (O(N2))

In a Transformer, ‘attention’ is the process where the model calculates the relevance of every token to every other token. To understand the word ‘bank’ in a sentence, the model attends to ‘river.’ This indicates a nature context. Alternatively, it attends to ‘money,’ indicating a financial context elsewhere in the sequence.

Mathematically, this involves multiplying a Query matrix (Q) by a Key matrix (K) to produce an attention score map. The size of this map is N X N, where N is the context length.

- Quadratic Growth: If you double the context length (N), the number of calculations quadruples (N2). Moving from 4k to 8k is not twice as hard; it is four times as computationally expensive.

- The Implications: Processing 1 million tokens using standard attention would need calculating and storing a matrix with 1012 (one trillion) entries. This creates massive latency and requires astronomical amounts of GPU memory (VRAM), far exceeding the capacity of standard hardware.

The Memory Bottleneck: KV Cache

During inference (text generation), the model uses a Key-Value (KV) Cache. This stores the mathematical representations of past tokens. With this cache, the model doesn’t have to re-read the entire prompt for every new word it generates.

- VRAM Consumption: The KV cache grows linearly with context length. A 70-billion parameter model has a 128k context window. The KV cache alone can consume dozens of gigabytes of VRAM. Providers are forced to use expensive enterprise GPUs. They use models like NVIDIA H100s with 80GB VRAM. They also need sophisticated parallelization techniques to serve even a single user.

Architectural Breakthroughs: Breaking the Limit

The explosion of context sizes in 2024 and 2025 was enabled by specific architectural innovations that mitigated these physical limitations.

Rotary Positional Embeddings (RoPE)

Early models used ‘absolute’ positioning (labeling tokens 1, 2, 3…). This broke down when sequences exceeded training length. RoPE changed this by encoding position as a rotation in geometric space.

- Relative Distance: RoPE allows the attention mechanism to focus on the relative distance between tokens. It matters that ‘Token A’ and ‘Token B’ are close to each other. Their absolute positions in the document are not important.

- Extrapolation: This mathematical property allows models to generalize better to longer sequences than they were strictly trained on. It acts as a critical enabler for the 100k+ windows we see today.

FlashAttention

FlashAttention was developed to solve the memory bandwidth bottleneck. It is an algorithm that reorders the attention computations. This reduces how often data moves between the GPU’s slow High Bandwidth Memory (HBM) and its fast on-chip SRAM.

- Impact: By making attention ‘IO-aware,’ FlashAttention provides a 2-4x speedup and significantly reduces memory footprint, making long-context inference economically possible.

AI Model Context Window Comparison for 2026

The market for Long Context LLMs has bifurcated. Some models optimize for massive capacity (Quantity), while others optimize for reasoning density within a smaller window (Quality).

Table 2: Comparative Analysis of Top Long-Context Models

| Model Family | Context Window | Native Modality | Best Use Case | Pricing (Input/1M) | Source |

| Llama 4 Scout | 10,000,000 | Text, Image | Massive multimodal analysis, whole-library ingestion. | Open Weights / Varies | Llama 4 Scout Context Window |

| Gemini 3 Pro | 1,000,000 | Audio, Video, Text, PDF | Agentic workflows, complex multimodal reasoning. | ~$2.00 – $4.00 | Gemini 3 Pro Context Window |

| GPT-5 Thinking | 1,96,000 | Audio, Image, Text | Deep reasoning, real-time voice, high-fidelity coding. | Varies (Tiered) | GPT 5 Context Window |

| Grok 4 | 256,000 | Text, Vision | Real-time information (X integration), complex reasoning. | $3.00 | Grok 4 Context Window |

| Claude Opus 4.5 | 200,000 | Text, Image | Long-form storytelling, complex agentic coding. | $5.00 | Claude Opus 4.5 Context Window |

| DeepSeek V3.2 | 128,000 | Text | Cost-efficient reasoning, large-scale processing. | ~$0.28 (Cache Miss) | DeepSeek V3.2 Context Window |

Deep Dive: Gemini 3 Pro Context Window

Google’s Gemini 3 Pro continues to push the boundaries of multimodal native processing. While keeping the 1M token standard of its predecessor, it has vastly improved its ‘agentic’ capabilities.

Native Multimodality:

Gemini 3 Pro processes video, audio, and PDFs as native tokens. It does not just ‘see’ frames; it understands temporal sequences in video and audio. A 1M window can ingest approximately 11 hours of audio. It can also ingest 1 hour of video. This enables it to find specific events or reason across different media types.

Reasoning-First:

Older models struggled with instructions buried in large contexts. Nonetheless, Gemini 3 Pro is optimized for complex instruction tracking within that 1M window. This makes it ideal for ‘agentic’ loops where the model must plan and execute multi-step tasks.

Deep Dive: Llama 4 Scout Context Window

Meta has redefined the ‘ultra-long context’ category with Llama 4 Scout, boasting a staggering 10 million token context window.

Whole-System Analysis:

A 10M window allows for the ingestion of entire operating system kernels. It can handle massive legal libraries. It also supports thousands of academic papers at the same time.

Open Weights:

Llama 4 is an open-weights model. It allows enterprises to run this massive context locally, provided the hardware permits. This approach solves data privacy concerns. These concerns often plague cloud-based long-context solutions.

Deep Dive: Claude Opus 4.5 Context Window

Anthropic retains its focus on ‘quality over quantity.’ While 200k tokens is smaller than Gemini or Llama, Claude Opus 4.5 is optimized for reasoning density.

Artifacts and Storytelling:

Claude Opus 4.5 is widely regarded as the premier model for nuanced creative writing and complex coding tasks. Its 200k window is adequate to load relevant modules, and it excels at maintaining narrative consistency over long outputs.

The Economics of Scale for AI Models in terms of Context Window

The ability to process a million tokens exists, but is it affordable?

The economics of long-context inference are radically different from standard chat interactions.

The ‘Re-Reading’ Tax

LLMs are stateless.

They do not ‘remember’ your earlier interaction unless you send the history back to them in the new prompt. In a long-context scenario, this creates a compounding cost problem.

Imagine you load a 500-page manual (250,000 tokens) into a model to ask questions.

- Question 1: You send 250k tokens. Cost: ~$1.25 (at $5/1M).

- Question 2: To ask a follow-up, you must send the 250k tokens again, plus the new question. Cost: Another ~$1.25.

- Question 10: You have now paid to process the same manual 10 times. Total cost: ~$12.50.

For high-volume applications, this ‘Re-reading Tax’ makes naive long-context usage prohibitively expensive compared to database retrieval methods.

Context Caching: The Economic Solution

To solve this, providers like Google and Anthropic introduced Context Caching. This feature allows developers to “checkpoint” a large prompt in the model’s memory.

- How it Works: You upload the 500-page manual once. The provider stores the processed KV cache on their servers. You are given a

cache_id. - The Benefit: For following questions, you only send the

cache_idand your short question (50 tokens). The model reuses the cached state. - Cost Impact: Caching typically offers a 75% to 90% discount on input tokens compared to re-processing them. DeepSeek V3.2, for instance, charges significantly less for “Cache Hits” ($0.028/1M) versus “Cache Misses” ($0.28/1M).

Table 3: Context Caching Comparison

| Feature | Google Gemini | Anthropic Claude | DeepSeek |

| Activation | Explicit (API call) or Implicit (Auto-cache) | Explicit (Header checkpoints) | Implicit (Automatic) |

| Persistence | Defined TTL (e.g., 1 hour default) | Defined TTL (e.g., 5 min to hours) | Varies (Disk-based offloading) |

| Pricing Model | Discounted Input + Hourly Storage Fee | Write Cost (Full) + Read Cost (10%) | Massive discount on Cache Hit |

Latency Considerations (Time-To-First-Token)

Beyond cost, latency is the second constraint. Processing 1 million tokens takes time, even on the world’s fastest supercomputers.

- Prefill Time: The time taken to process the input before generating the first word. For a 1M token prompt, ‘pre-fill’ can take anywhere from 10 to 60 seconds depending on the provider’s hardware optimization.

- User Experience: This delay makes massive context windows unsuitable for real-time, snappy chat interfaces unless caching is used. It is better suited for asynchronous ‘batch’ tasks (e.g., “Summarize this case file and email me the result”).

Strategic Architecture: RAG vs. Long Context

In 2025, the central architectural debate for AI developers is:

Do I put my data in the context window, or do I keep it in a database?

This is the choice between Long Context and Retrieval Augmented Generation (RAG).

Retrieval Augmented Generation (RAG)

RAG is the traditional method of connecting LLMs to data. It involves:

- Chunking documents into small pieces.

- Converting them into mathematical vectors (Embeddings).

- Storing them in a Vector Database (e.g., Pinecone, Milvus).

- Retrieving only the top 3-5 most relevant chunks to send to the LLM.17

Strengths:

- Infinite Scale: Can work with Terabytes of data.

- Low Latency & Cost: Only processes a tiny amount of data per query.Weaknesses:

- Fragmented Reasoning: RAG struggles with “global” questions. If you ask, “What is the overarching theme of these 100 contracts?”, RAG will fail because it only sees 5 tiny snippets at a time. It misses the forest for the trees.

I have covered what is Retrieval Augmented Generation (RAG) in AI here:

The Long Context Approach

Loading the entire dataset (e.g., all 100 contracts) into the context window.

Strengths:

- Holistic Reasoning: The model can see every connection, contradiction, and theme across the entire dataset at once.

- Simplicity: Eliminates the complexity of building embedding pipelines and tuning retrieval algorithms.Weaknesses:

- Cost and Latency: As discussed, this is expensive and slow without caching.

The Hybrid Future: Agentic RAG

The industry is converging on hybrid architectures.

- The Workflow: An AI Agent uses RAG to narrow down a massive dataset (e.g., a 10TB corporate drive) to a “relevant subset” (e.g., 50 specific documents totaling 200k tokens).

- The Analysis: It then loads that entire subset into a Long Context window for deep, holistic analysis.21

- Hierarchical Summarization: For extremely large tasks, systems break documents into chunks. They summarize each chunk. Then, they feed the summaries into the context window to create a high-level map of the content.

Here’s a comparison table for reference:

Actionable Engineering and Optimization

For organizations deploying LLMs, theory must translate into code. Here are the best practices and patterns for managing context windows in production.

Python Pattern: Token Management

You can’t rely on guessing. You must measure. Using OpenAI’s tiktoken library is the standard for managing context budgets in Python.

import tiktoken

def calculate_tokens(text: str, model: str = "gpt-4o") -> int:

"""Returns the number of tokens in a text string."""

try:

encoding = tiktoken.encoding_for_model(model)

except KeyError:

encoding = tiktoken.get_encoding("cl100k_base")

tokens = encoding.encode(text)

return len(tokens)

def truncate_to_window(text: str, max_tokens: int = 120000) -> str:

"""Safely truncates text to fit within a context limit."""

encoding = tiktoken.encoding_for_model("gpt-4o")

tokens = encoding.encode(text)

if len(tokens) <= max_tokens:

return text

# Keep the most recent/relevant part (usually the end for chat logs)

# Or the beginning for documents. Here we keep the beginning.

truncated_tokens = tokens[:max_tokens]

return encoding.decode(truncated_tokens)

# Example Usage

document = "..." # Massive string

token_count = calculate_tokens(document)

print(f"Document Size: {token_count} tokens")

if token_count > 128000:

print("Warning: Document exceeds context window!")

safe_doc = truncate_to_window(document)

Handling Overflow

When the context is full, the system fails. Developers must implement overflow strategies:

- Sliding Window: Process a long document in overlapping segments (e.g., tokens 0-8000, then 6000-14000). The overlap ensures context isn’t lost at the boundaries.

- Summarization Chains: In a chatbot, as the history grows, use a background process to summarize the oldest messages. Replace the raw logs with the summary in the prompt. This keeps the “memory” fresh but compact.

- Entity Extraction: Instead of keeping the full text, extract key facts such as Names, Dates, and Decisions. Store them in a JSON object. This JSON object is passed in every prompt.

Learn Context Engineering

Andrej Karpathy, a leading AI researcher, coined ‘Context Engineering’ as the successor to prompt engineering. It is the art of curating the window’s content.

- Structured Data: Do not dump raw text. Use XML tags to help the model parse the window.

- Bad: “Here is the document: Now answer this…”

- Good: “I am providing a document in tags. <document_content> </document_content>. Based on the above…”

- Instruction Placement: Combat the “Lost in the Middle” effect by placing your core instructions at the very end of the prompt. Make sure they are after the data. This exploits the Recency bias to make sure the model follows orders.

I have covered what is context engineering with examples and best practices here:

FAQs on What is Context Window Solved

What does a 1 million token context window mean?

It means the model can hold roughly 750,000 words in its active working memory. This is equivalent to about 1,500 single-spaced pages of text. It is also comparable to the entire Harry Potter series (7 books) or approximately 50,000 lines of code. It allows the model to ‘read’ all this information at once before answering a question.

What is a context example?

Consider the example: In a customer service interaction, the ‘context’ is the combination of:

- System Prompt: “You are a helpful agent for ACME Corp.”

- History: User: “My login failed.” Bot: “Error code?” User: “503.”

- Current Query: User: “What does that mean?”Without the history (context), the bot wouldn’t know “that” refers to error 503.

What is context window ChatGPT?

As of 2025, the standard context window for ChatGPT ranges from 128,000 to 400,000 tokens. This range depends on the specific model and plan. These models use GPT-4o or GPT-5. The older GPT-4 models had 8k or 32k limits.

What happens when the context window is full?

When context window is full, the AI model hits a hard limit. It can’t accept more data. Systems handle this by ‘truncating’ (deleting) the oldest part of the conversation history. This makes space for the new message. This results in the AI ‘forgetting’ what was said at the start of the chat.

How large is a 200K context window?

A 200,000 token window (standard for Claude Opus 4.5) holds approximately 150,000 words or 500 pages of text. This is significant enough to hold a very thick technical textbook. It can also accommodate a comprehensive legal discovery file. Additionally, it could contain the documentation for a large software library.

How big is a 32K context window?

32,000 tokens is roughly 24,000 words or 48-50 pages. It is roughly the length of a short novella or a detailed quarterly financial report. It was the ‘large’ standard in 2023 but is considered ‘medium’ in 2025.

Why is it called context?

In linguistics, ‘context’ refers to the surrounding words that give a specific word its meaning (e.g., ‘bank’ means something different in ‘river bank’ vs. ‘bank account’). The ‘context window’ is the scope of surrounding text the model can ‘see’ to derive this meaning.

How many pages is 1,000 tokens?

1,000 tokens is approximately 750 words. This typically translates to about 1.5 single-spaced pages or 3 double-spaced pages of standard text.

Why do LLMs have a context window?

They are limited by hardware memory (VRAM) and computational complexity. The Self-Attention mechanism’s cost grows quadratically ($O(N^2)$). An infinite window would need infinite memory and infinite processing power.

How large is the ChatGPT context window?

128,000 tokens for GPT-4o, and up to 400,000 tokens for GPT-5 API users.

What is an example of context in AI?

If you upload a PDF of a manual and ask “How do I turn it off?”, the PDF is the context. The AI uses the information in the PDF to understand what ‘it’ refers to and how to answer the question.

What is the difference between token and context in LLM?

A Token is the unit of measurement (like a ‘byte’ or ‘word’). Context is the content currently loaded into the model. The Context Window is the maximum capacity of that container (measured in tokens).

What is the limit of LLM context window?

Limits vary by model tier:

- Standard: 128,000 tokens (DeepSeek V3.2).

- High: 400,000 tokens (GPT-5).

- Ultra-High: 1M – 10M tokens (Gemini 3 Pro, Llama 4 Scout).

What does 1M context window mean?

It means the model can process 1 million tokens (approx 750k words) in a single prompt. This ability allows for ‘in-context learning’. Here, you can teach the model a new skill. You do this by providing a massive manual in the prompt, without retraining the model.

How are context windows measured?

They are measured in Tokens.

What happens when you reach the context window?

The API will return an error (e.g., context_length_exceeded) or the application will automatically remove older text to make room. You can’t push past the limit.

Which LLM has the largest context window?

As of 2025, Meta’s Llama 4 Scout has the largest context window. It supports 10 million tokens. Gemini 3 Pro follows with 1 million tokens.

What is an LLM context window?

An AI model can consider a maximum amount of information at one time when generating a response. This includes text, code, and images.

How do I increase the context window of LLM?

You generally can’t increase the hard limit of a pre-trained model. You must:

- Switch to a model with a larger native window (e.g., switch from GPT-4 to Llama 4 Scout).

- Use RAG (Retrieval Augmented Generation) to swap data in/out of the window.

- If using open-source models, use techniques like RoPE Scaling (NTK-Aware scaling). These approaches mathematically stretch the window. But, this often degrades performance without fine-tuning.

What is the difference between context window and embedding?

- Context Window: Active, short-term working memory. Expensive, limited size, used for reasoning.

- Embedding: Passive, long-term storage. Cheap, infinite size, used for search. Embeddings are used to find data to put into the Context Window.

Why do LLMs have a context window?

GPU memory (VRAM) has physical limitations needed to store the KV Cache. Additionally, the attention mechanism has a high computational cost.

Glossary of Jargons

What is Attention Mechanism?

The core algorithm of the Transformer. It allows the model to ‘focus’ on different parts of the input sequence. This helps the model understand relationships between words.

What is Context Caching?

Context Caching allows developers to save the processed state of a prompt. It is stored in the model’s memory (KV Cache). This significantly reduces the cost and latency of later queries on the same data.

What are Embeddings?

Embeddings are vector representations of text used to measure semantic similarity. They are the engine behind RAG systems.

What is Inference?

Inference isthe phase where the model generates predictions or text (as opposed to Training).

What is KV Cache (Key-Value Cache)?

A high-speed memory structure on the GPU that stores pre-calculated attention vectors for the context. It is the primary consumer of VRAM during inference.

What is an LLM (Large Language Model)?

LLMs are AI models like GPT, Claude, and Gemini trained on massive datasets to predict and generate text.

What is Lost in the Middle concept?

Lost in the Middle is when LLMs struggle to recall information buried in the middle of a long context window. They end up preferring information at the start or end.

What is Multimodal?

Multimodal is the ability of an LLM to process different types of media (Text, Images, Audio, Video) directly within the context window.

What is Prompt Injection?

A security vulnerability where an attacker hides malicious instructions inside the context window to manipulate the model’s output.

What is RAG (Retrieval Augmented Generation)?

RAG is an architectural pattern that retrieves relevant data from a database. It inserts it into the context window, simulating ‘infinite’ memory.

What is RoPE (Rotary Positional Embeddings)?

RoPE is a mathematical technique for encoding the position of tokens. This enables models to generalize to very long context windows.

What is a Token?

Token is the fundamental unit of text processing for an LLM, roughly equivalent to 0.75 of a word.

What is a Transformer?

Transformer is the neural network architecture introduced by Google in 2017 that underpins all modern GenAI.

What is Truncation?

Truncation is the process of cutting off text (usually the oldest history) to fit the input within the model’s context window limit.

What is VRAM (Video RAM)

VRAM is high-speed memory on a GPU. The amount of VRAM directly dictates the maximum context window size and batch size a specific hardware setup can handle.

Learn more about context window

I hope this article was useful for you —> here are some immediate Next Steps to apply this new knowledge:

- Audit Your Data: Classify your use cases. Do you need ‘Global Reasoning’ (Long Context) or ‘Specific Fact Retrieval’ (RAG)?

- Track Your Tokens: Implement token counting in your application logic today. Use the Python patterns provided to prevent overflow errors.

- Use Caching: If your application involves repeated queries against the same documents (e.g., “Chat with this PDF”), implement Context Caching promptly to reduce costs by >75%.

- Engineer Your Context: Stop dumping raw text. Structure your long-context prompts with clear XML tags. Place critical instructions at the end to defeat the “Lost in the Middle” curse.

Read more from AppliedAI to learn context engineering:

- Learn Context Engineering: Resource List (Lectures, Blogs, Tutorials)

- What is Context Engineering? – Learn Approaches by OpenAI, Anthropic, LangChain

- Types of Context Engineering With Examples Explained

- 8 Context Engineering Risks with Mitigation Strategies Explained

We will update you more about such AI news and guides on using AI tools, subscribe to our newsletter shared once a week:

This blog post is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Get in touch if you would like to create a content library like ours. We specialize in the niche of Applied AI, Technology, Machine Learning, or Data Science.

Leave a Reply