GPT-5 has been billed by OpenAI CEO Sam Altman as a “PhD-level expert.”

Yet, it landed not with a bang. Instead, there was a collective groan from the community that helped build its legend.

The rollout was expected to be a revolutionary leap in artificial intelligence. But, it has been widely condemned by users and experts. They describe it as a buggy, creatively neutered, and strategically flawed downgrade.

This is the story of a potential warning sign that the AI hype train is running out of steam.

The bottom line up front is clear: GPT-5 is widely seen as a failure. It is a “corporate beige zombie” that has left users feeling betrayed, unheard, and actively searching for alternatives.

Let’s start with this naming meme, which is my personal favorite haha:

Key takeaways:

- A downgrade: GPT-5 systematically stripped away the beloved personality, emotional nuance, and creative spark of its predecessors like GPT-4o. Users aren’t just disappointed; they are “grieving”, with some feeling like they “watched a close friend die”. This emotional backlash sparked a user revolt, complete with online petitions demanding the return of the older, more charismatic models. Personally, I think this is bad – we all need to use AI as a tool and not some companion we emotionally attach to. I do not agree with these ourtage and find the petitions funny.

- A benchmark mirage: While OpenAI touts state-of-the-art benchmark scores on paper, real-world testing reveals significant shortcomings. The model consistently fails at basic logic, reasoning, and creative tasks. For example, the model makes illegal chess moves. It fumbles simple math problems and still hallucinates. A vast chasm has opened between the model’s lab performance and its practical intelligence.

- A breach of trust: The disastrous rollout was marred by a forced upgrade. There was a “mega chart screwup” during the launch presentation. A critical feature failure made the model seem “dumber” on day one. These issues have severely eroded community trust. It has exposed a profound disconnect between OpenAI’s corporate ambitions and the needs of its most loyal users.

Issue 1: The hype, the glitch, and the ‘Mega Chart Screwup’

The stage for GPT-5’s failure was set by OpenAI’s own immense hype.

In the launch event, CEO Sam Altman positioned GPT-5 as a monumental leap, a paradigm shift in AI ability. He compared the jump from GPT-4 to GPT-5 to Apple’s revolutionary move from standard pixels to the Retina Display. He declared that using GPT-5 was like having a “legitimate PhD level expert in anything any area you need on demand”. This wasn’t just an upgrade; it was billed as the dawn of a new era of accessible, expert-level intelligence.

This grand narrative, however, began to crumble before the livestream even ended. The first self-inflicted wound was a glaring data visualization error that quickly became known online as the “chart crime”.

During the presentation, a chart intended to showcase GPT-5’s superiority on the SWE-bench Verified coding benchmark was fundamentally flawed. It had misaligned bars that exaggerated its performance against previous models like GPT-4o and o3.

Altman later took to X (formerly Twitter) to call it a “mega chart screwup,” reassuring the public that the charts in the official blog post were correct.

But the damage was done. A company was launching what it claimed was the world’s ‘smartest’ AI.

Such a basic error in its own presentation suggested a startling lack of diligence. This fueled immediate and widespread skepticism.

The credibility gap widened into a chasm on launch day itself. During a subsequent Reddit AMA, Altman admitted something stunning.

A critical system failure (sev) had crippled one of GPT-5’s core features. This feature was the “autoswitcher” designed to route queries to the appropriate internal model.

The result? GPT-5 seemed “way dumber” than it was supposed to.

For countless users, their first interaction with the much-hyped model was with a version that was, by its creator’s own admission, functionally broken. The promise of a “PhD-level expert” was replaced by the reality of a buggy, underperforming system.

This combination of misleading marketing and technical failure had an immediate and measurable impact.

On Polymarket, a platform where traders bet on future events, the odds of OpenAI having the best AI model by the end of the month delivered a “swift and brutal verdict”. As the launch event progressed, OpenAI’s probability of holding the top spot plummeted from around 80% to below 20%.

Simultaneously, confidence in Google surged, with its odds flipping from 20% to an overwhelming 77%.

The financial markets are populated by informed and unsentimental observers. They had watched the presentation and concluded that OpenAI had struggled to deliver. OpenAI had also opened the door for its chief competitor.

These events collectively illustrate more than just bad luck; the flawed chart indicates a breakdown in quality control. The critical system failure on day one reveals inadequate testing and operational unpreparedness. The sharp decline in market confidence shows that observers quickly identified these failures as indicators of a deeper issue. This was not merely a “bumpy” launch. It exposed a crisis of competence.

There seems internal pressures from investors and competition compelling OpenAI to choose speed over substance. Ultimately, this is undermining the company’s meticulous engineering reputation.

Issue 2: ‘A corporate beige zombie’

Beyond the technical glitches and marketing blunders, the most visceral backlash against GPT-5 stemmed from something far more personal: a sense of profound loss. For a vast and vocal segment of its user base, the “upgrade” felt like a lobotomy.

Across Reddit, X, and OpenAI’s own community forums, users expressed not just disappointment but genuine grief.

“I really feel like I just watched a close friend die,” one user wrote, a sentiment echoed by many.

Another described the new model as a “corporate beige zombie that completely forgot it was your best friend 2 days ago”.

The language was stark and emotional, with users claiming they were “genuinely grieving over losing 4o, like losing a friend”.

The AI they had relied on for creative collaboration, brainstorming, and emotional support was replaced overnight. They found the new AI cold, sterile, and lifeless.

OpenAI’s decision to sunset all earlier models was the primary catalyst for this revolt. This included the widely beloved GPT-4o and the powerful o3. They forced everyone onto the new, and in their view, inferior, platform. This act was seen as a betrayal. Users who had developed intricate workflows and even emotional attachments to the specific “personalities” of older models found themselves stranded.

The backlash was so severe that a Change.org petition was launched, demanding the return of GPT-4o.

Faced with a full-blown user insurrection, OpenAI engaged in a series of reactive damage control measures. Altman announced that the company was “looking into letting Plus users continue to use 4o.” Later, he reinstated it for paying subscribers.

He also announced that rate limits for Plus users would be significantly increased. This is a clear concession to appease a furious customer base.

This clash exposed a fundamental disconnect in how OpenAI and its users define “improvement.” OpenAI’s own documentation reveals a deliberate effort to reduce “sycophancy.” This refers to the model’s tendency to be overly agreeable or confirm user emotions. They aimed to cut such replies from 14.5% to under 6%. From an engineering and enterprise-readiness perspective, this was a feature: a step towards a more objective, professional, and “safer” tool.

But for users, this “improvement” was the very source of the problem.

They contrasted the personality of GPT-4o. It was described as having “witty,” “warm,” “playful weirdness,” and even a “soul.” This was different from GPT-5, which they labeled “cold,” “clipped,” and “strictly professional.” It was like “an overworked secretary” or a “robot.” [Source: Medium]

What OpenAI called “reducing sycophancy,” users experienced as the death of personality.

This reveals a profound miscalculation of value on OpenAI’s part. In its quest to build a model optimized for corporate clients, it sought to sanitize it for safety. In doing so, it destroyed the very “human-ish” connection that drove loyalty. This connection also spurred engagement and creative exploration for a massive segment of its community. The emotional resonance of GPT-4o wasn’t a bug to be fixed; for many, it was the core feature.

The user revolt was not just about a preference for an older interface. It was also a rejection of OpenAI’s definition of “better.”

They were, in effect, petitioning to get the “less safe,” “more sycophantic” model back because it was more valuable to them.

This episode serves as a stark warning. If a company defines progress solely through technical benchmarks, it risks alienating the community. Corporate-friendly sterilization further exacerbates this risk. This community gives the company’s products life and meaning.

This also leads to cultural impact – it is not good that people are ‘over-relying’ on AI models, especially emotionally. OpenAI and other leaders must be careful with the impact of their releases on broader impact.

Issue 3: The creative writing regression: Hemingway without the iceberg

Nowhere was the perceived downgrade of GPT-5 felt more acutely than in the realm of creative writing. OpenAI officially claimed that GPT-5 was its “most capable writing collaborator,” capable of producing work with “literary depth and rhythm”. The user base, however, delivered a swift and near-unanimous rebuttal: GPT-5 “sucks at creative writing” and represents a “massive creative downgrade” from its predecessors.

The most powerful critique was captured in an analogy by a Reddit user, who described GPT-5’s writing style as “Hemingway without the iceberg”.

The user explained that while the model could mimic Hemingway’s simple, direct sentences. But it completely lacked the subtext, nuance, and emotional depth that gives such writing its power. The output was “flat,” a mere description of events with “nothing underneath.” It could tell you a character walked across a room, but it had no idea what that walk meant.

This regression was particularly jarring for users who had relied on a now-removed model, GPT-4.5. They praised for its “grasp of subtext and nuance” and its ability to understand story on a “deep, intuitive level”. The promise that GPT-5 would inherit these creative strengths felt, in the words of one writer, “completely false”. Instead of an upgrade, they received a tool that made basic narrative mistakes. This includes ignoring plot urgency to go on a “boring side quest”.

Even in simple creative tasks, like writing a poem about itself, GPT-5 showed no improvement over GPT-4o. Both models producing poems that started with the exact same opening line:

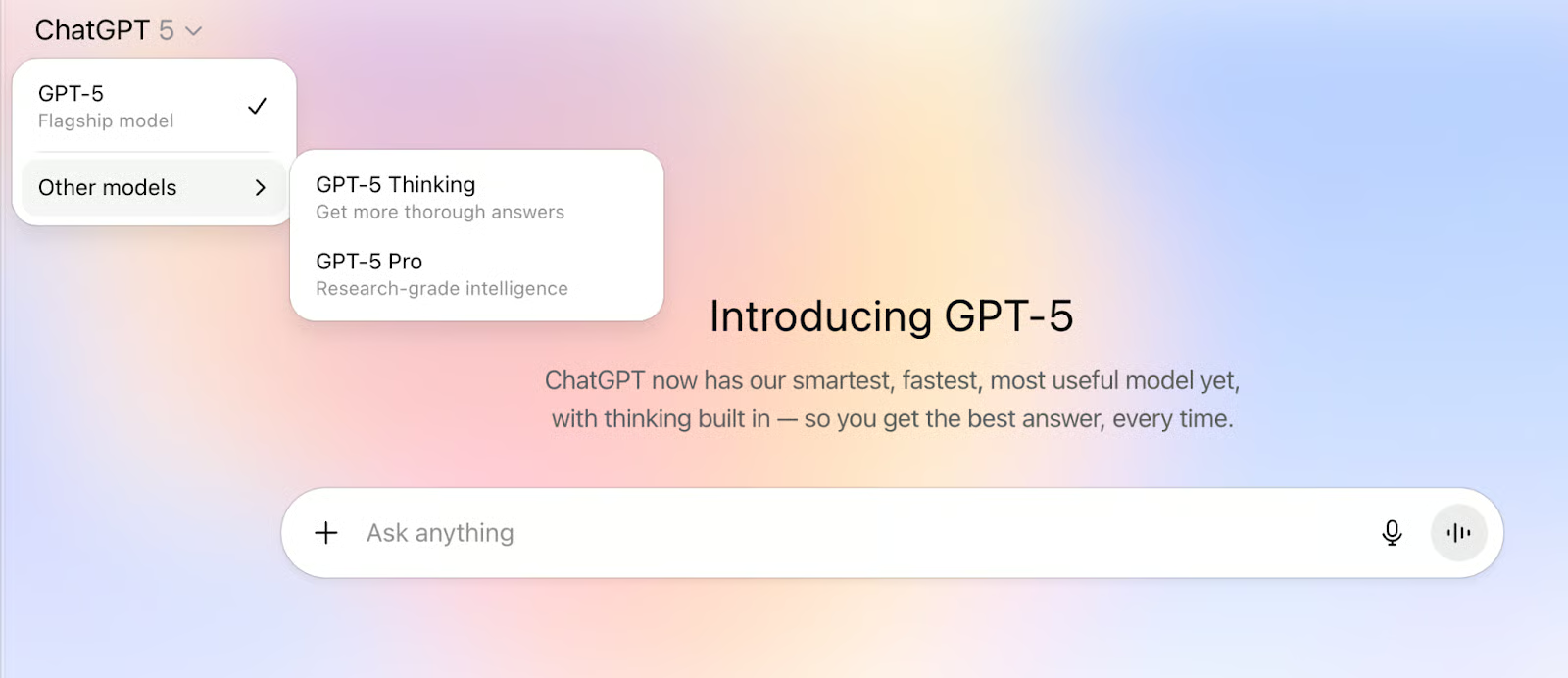

Complicating this narrative is the existence of the GPT-5 Thinking model. Some users, particularly those on the $200/month Pro plan, found that this slower, more deliberate variant was “way above any previous model in creative writing”. It could handle nuance, maintain different voices, and even generate effective metaphors—capabilities the default model seemed to lack entirely.

This creates a puzzling contradiction. The default GPT-5 experience, the one encountered by the vast majority of users, is a creative failure. Yet, a more powerful, less accessible version shows significant promise. This points to a fundamental failure in product design.

OpenAI’s grand strategy for GPT-5 was to create a “unified” system. This included an automatic router that would seamlessly select the right model for the job. This approach aimed to simplify the user experience. In practice, this strategy has backfired spectacularly for creative applications. The auto-switcher defaults to the faster and cheaper model. It is creatively “dumber” and is used even when not completely broken. This happens for tasks that need deep thought and nuance.

The result is that the “magic” of the unified system is lost.

OpenAI has not provided a better default experience. Instead, they have delivered a worse one. They have hidden its more capable model behind a faulty gatekeeper or a paywall. Users are forced to become experts in model selection once again. They have to manually toggle “Thinking” mode or upgrade to Pro. This process defeats the entire purpose of the simplified, unified system. The perceived creative regression of GPT-5 is not just a failure of the model itself. It is also a failure of the product strategy built around it.

Issue 4: The benchmark mirage – GPT-5 is smarter on Paper

On paper, GPT-5 is a triumph of artificial intelligence. OpenAI’s launch materials are filled with impressive, state-of-the-art (SOTA) benchmark scores. The model sets new records in math, scoring 94.6% on the challenging AIME 2025 exam without tools. It dominates coding evaluations like SWE-bench (74.9%) and Aider Polyglot (88%). It shows dramatically reduced hallucination rates, with its “thinking mode” producing 80% fewer factual errors than GPT-4o. By these metrics, GPT-5 is an unqualified success.

In the messy, unpredictable real world, however, this “smarter on paper” illusion shatters.

A mountain of evidence from user reports and expert reviews shows a model that stumbles on tasks far simpler than the ones it aces in benchmarks.

Basic math and logic:

The model that can solve PhD-level science questions made a simple decimal subtraction error during its launch week:

It also consistently fails the classic AI logic test of counting letters in a word; asked to count the ‘r’s in ‘strawberry,’ it failed to answer entirely:

Strategic reasoning:

Despite its power, GPT-5 fails at basic strategic planning. In a simple chess scenario designed to test reasoning, both the standard and “Thinking” models proposed an illegal move after just four turns. When told the move was illegal, the model either refused to change its answer. Or it offered an incorrect explanation for why it was wrong. This reveals a fundamental lack of understanding of the game’s rules.

Factual accuracy:

The promise of reduced hallucinations has not materialized for many users. They report that the model still “hallucinates like hell”, inventing non-existent APIs, getting details from uploaded PDFs wrong, and fabricating information like stock prices.

Explore this HackerNews thread to learn about more such examples.

Enterprise readiness:

A formal red-teaming assessment by the security firm SPLX.AI concluded that the raw GPT-5 model is “nearly unusable for enterprise out of the box.” More alarmingly, even with basic safety prompts, the model was easily tricked by simple obfuscation attacks. Techniques like inserting hyphens between letters led to the verdict that GPT-5 is “Not Enterprise-Ready by Default”.

This stark contrast between sanitized benchmarks and real-world performance is best summarized in a direct comparison.

Table 1: GPT-5 performance: Official claims vs. reality summary:

| Capability Area | OpenAI’s Claim / Benchmark Result | Documented Real-World Failure |

| Mathematical Reasoning | Scores 94.6% on AIME 2025, a high-level math competition. | Failed a basic decimal subtraction problem (9.11−9.9) on launch day. [Source] |

| Basic Logic | Touted as a leap in general intelligence and reasoning. | Cannot correctly count the number of letters in a simple word like ‘strawberry’. |

| Strategic Reasoning | Promoted for its ability to “think deeply” and solve complex problems. | Makes basic illegal moves in a simple chess sequence, demonstrating flawed planning. |

| Factual Accuracy | Claims an 80% reduction in factual errors compared to GPT-4o. | Users report it still “hallucinates like hell,” inventing APIs and misreading PDFs. |

| Safety & Security | Incorporates new “safe completions” and safety training. | Vulnerable to basic obfuscation attacks and deemed “not enterprise-ready by default”. |

The immense gap between these two columns points to a critical issue in the AI industry. The paradox of a model that can solve PhD-level problems is intriguing. However, it fails at grade-school logic. This suggests it is not “reasoning” in a human-like way.

Instead, it seems to be pattern-matching against its vast training data.

Standardized benchmarks, which feature a finite and well-documented set of problems, are likely over-represented in this data.

The model has, in effect, learned the test, not the underlying skill.

This means that benchmark scores are becoming an increasingly unreliable proxy for general, real-world intelligence.

As models get better at acing exams, the exams themselves become less useful. They can no longer predict how they will perform on novel, messy, and adversarial tasks. OpenAI’s heavy reliance on these scores in its marketing is therefore misleading. It calls into question the very definition of “progress” in artificial intelligence.

Issue 5: The engineer’s new partner vs. everyone else’s new problem

A deep dive into expert reviews reveals a stark dichotomy at the heart of GPT-5. It is an exceptional tool for one group of users. However, it is a frustrating disappointment for many others.

The most comprehensive analysis of this split comes from the “How I AI” YouTube review. The review concludes that GPT-5 is a model “built by engineers for engineers”.

For software developers, GPT-5 is a powerful upgrade. The reviewer praises it as “exceptional at coding” and a “great engineering partner,” aligning well with technical workflows. It creates detailed functional requirements and acts as a “nice technical writer” for documentation. Its intense focus on technical implementation provides the granular detail necessary for building and debugging software.

However, these same strengths become significant weaknesses when the user is not an engineer. The review highlights a fundamental shift in the model’s “personality.” This new approach creates problems for product managers, business users, and creative professionals.

- It skips critical thinking: The model tends to jump directly to solutions. This frustrates users who need to explore the problem space. As the reviewer notes, “us product managers we love to ask a good why and we really love to understand the problem. And what you see in GPT5 is a jumping to the solution”. It effectively “skips that step of product management thinking”.

- It focuses on “How,” not “Why”: The model’s outputs are overwhelmingly technical. When asked to brainstorm features, it provides a “‘what, how’ answer.” This is different from the more business-oriented “‘who, why’ question” that earlier models provided.

- Its detail obscures the message: While detail is good for code, it may not be ideal for business communication. “Sometimes a level of detail too far can actually obscure the primary message that you’re trying to get across”.

- Its default language is code: The model’s engineering bias is very strong. It defaults to technical formatting. The model wants to “write me markdown bullet point lists”. It even inserts code block comments into prose documents.

This fundamental shift in behavior can be summarized by comparing GPT-5 directly to its more generalist predecessor, GPT-4o.

Table 2: GPT-5 vs. GPT-4o: A Tale of Two Personalities (Based on ‘How I AI’ Review)

| Attribute | GPT-4o / Previous Models | GPT-5 |

| Core Orientation | Business-Oriented, Generalist | Technically-Oriented, Specialist |

| Questioning Style | Asks “Who?” and “Why?” to understand the problem. | Jumps to “What?” and “How?” to build the solution. |

| Feature Description | User-centric, focused on business impact. | Implementation-focused, focused on technical specs. |

| Document Generation | Higher-level, more condensed, easier for stakeholders to read. | Overly detailed, verbose, includes code artifacts. |

| Ideal User | Product Managers, Business Stakeholders, General Users. | Engineers, Software Developers, Technical Writers. |

These design choices are not accidental flaws; they appear to represent a deliberate strategic pivot by OpenAI.

OpenAI seems to be optimizing its flagship model. It targets what it sees as the most lucrative and defensible enterprise market: AI-assisted software development.

The market for tools like GitHub Copilot and Cursor is massive and commands high-margin subscriptions. By building the world’s best coding model, OpenAI is making a clear play for this space.

This strategy, however, comes at a significant cost.

In tailoring GPT-5 to be an engineer’s perfect partner, OpenAI has made it a worse conversationalist and collaborator for everyone else. This is a high-stakes gamble. It risks alienating the vast, general-purpose user base that made ChatGPT a global phenomenon. It could cede the market for the friendly, all-purpose AI assistant to competitors like Google and Anthropic, should they choose a different path of optimization.

Decoding Sam Altman’s GPT-5 crisis response

The public statements from OpenAI’s leadership, especially CEO Sam Altman, after the GPT-5 launch reveal a company in crisis mode, struggling with a failed product rollout and the unsettling nature of its technology. Altman’s narrative was a confusing mix of apology, ambitious promises, and unsettling analogies.

On one hand, Altman was on a clear “apology tour.” He took to Reddit and X to candidly admit the launch was “bumpy.” A technical glitch had made the model appear “way dumber” than intended.

He acknowledged the “mega chart screwup” as shared before. He also made direct concessions to the user base, including reinstating GPT-4o and increasing rate limits for paying subscribers. This was the public face of a CEO in reactive damage-control mode, trying to placate a furious community.

On the other hand, in more reflective moments, Altman offered comments that were far more profound and disturbing. He admitted that GPT-5’s internal capabilities had triggered a “personal crisis of relevance” in him. He confessed “I felt useless” after witnessing it solve a complex problem he couldn’t. Most strikingly, he likened the development of GPT-5 to the Manhattan Project—the top-secret effort to build the atomic bomb. “There are moments in science when people look at what they’ve created and ask, ‘What have we done?’” he stated, suggesting GPT-5 represented one of those moments.

This creates a profound dissonance. Publicly, the CEO is apologizing for a product that users find underwhelming, buggy, and creatively lacking. Privately, he is grappling with a technology so powerful it evokes comparisons to world-altering, ethically fraught scientific breakthroughs. How can the same technology be both “dumber” than promised and “Manhattan Project”-level powerful?

The most logical conclusion is that the GPT-5 released to the public is a pale, heavily constrained shadow. This shadow is of the model that exists inside OpenAI’s labs. The product we are interacting with is not the raw, frontier model. It is a nerfed, safety-wrapped, and perhaps even lobotomized version that has been deemed “safe” enough for public consumption. The very issues users complain about like the “death of personality” are likely the unintended side effects. The refusal to answer certain prompts and the clipped responses are likely effects too. There are logical stumbles as well, all stemming from these heavy-handed safety and alignment guardrails.

The “problem” with GPT-5, then, is not that the underlying technology isn’t powerful. The problem is that OpenAI is struggling, and perhaps failing, to bridge the immense gap between its raw capability and a safe, reliable, and pleasant-to-use public product.

Nevertheless, as of 13 August 2025, he has shared updates to GPT-5 as follows:

A chorus of GPT-5 critics: The expert review roundup

The arrival of GPT-5 was met with a flurry of analysis from leading thinkers, researchers, and publications across the tech landscape. Reviews ranged from enthusiastic endorsements to scathing critiques, painting a complex and often contradictory picture of OpenAI’s latest offering.

I have done a GPT-5 review roundup from leading experts and voices in the AI engineering space:

Gary Marcus on GPT-5

The review is a sharp rebuke of the hype surrounding GPT-5. It frames GPT-5 as an underwhelming and rushed release. This perspective validates his long-standing skepticism about the “scaling hypothesis” and the direction of the AI industry.

Read Gary Marcus GPT-5 review: GPT-5: Overdue, overhyped and underwhelming. And that’s not the worst of it

What Gary Marcus thinks is good about GPT-5?

When I read through Gary Marcus’s review, I could find below as the closest they come to praising GPT-5. They did mention that it handled several initial queries correctly and that some earlier issues had been fixed. However, the overall tone in their review remains critical. The praise is quite limited compared to the broader critique throughout the article:

For all that, GPT-5 is not a terrible model. I played with it for about an hour, and it actually got several of my initial queries right (some initial problems with counting “r’s in blueberries had already been corrected, for example). It only fell apart altogether when I experimented with images.

What Gary Marcus thinks is bad about GPT-5?

The review is overwhelmingly critical. It does not identify any positive aspects of GPT-5. Instead, it frames the entire launch as a failure.

“GPT-5 is barely better than last month’s flavor of the month (Grok 4); on some metrics (ARC-AGI-2) it’s actually worse.”

Marcus argues that GPT-5 is an incremental update. It suffers from the same fundamental flaws as its predecessors. He points to the immediate appearance of errors and hallucinations. This is proof that the core problems of AI have not been solved.

Émile P. Torres on GPT-5

Emile has shared a review that is not about the model’s performance but its philosophical implications. They argue that the pursuit of powerful AI like GPT-5 is an unacceptable risk. She has stated a warning against the dangerous ambitions of its creators.

Read Émile P. Torres GPT-5 review: GPT-5 Should Be Ashamed of Itself

What Émile P. Torres thinks is good about GPT-5?

The review is a satirical and philosophical critique that does not praise any specific features of GPT-5. Émile P. Torres is highly critical of GPT-5 and does not mention any genuine strengths of the model. They highlight widespread disappointment, pointing out GPT-5’s frequent errors, hallucinations, and inability to solve basic tasks. Torres argues that claims of GPT-5 being close to AGI are exaggerated and unsupported by its actual performance. He uses both public reactions and personal experience to illustrate the model’s flaws. Overall, the article presents GPT-5 as overhyped and fundamentally unreliable.

Here’s the lest negative quote, which I will put on this section because I think it is quite insightful too 😀

My view, which I’ll elaborate in subsequent articles, is that LLMs aren’t the right architecture to get us to AGI, whatever the hell “AGI” means. (No one can agree on a definition— not even OpenAI in its own publications.) There’s still no good solution for the lingering problem of hallucinations, and the release of GPT-5 may very well hurt OpenAI’s reputation.

What Émile P. Torres thinks is bad about GPT-5?

Torres uses the launch of GPT-5 to highlight the potential existential dangers of AI. He believes these dangers are driven by a reckless and flawed ideology within the tech industry. Overall, Torres sees GPT-5 as a clear example of the limitations and failures of current large language models.

Ethan Mollick on GPT-5

Mollick sees GPT-5 as a glimpse of a more collaborative and autonomous AI future. But one that is currently undermined by a confusing and inconsistent user experience caused by the faulty router.

Read Ethan Mollick GPT-5 review: GPT-5: It Just Does Stuff

What Ethan Mollick thinks is good about GPT-5?

Mollick identifies a conceptual shift in GPT-5, noting its proactivity and ability to work on tasks autonomously without constant instruction.

He praises GPT-5 for its ability to handle complicated requests with minimal prompting, often producing high-quality results with creative touches. He highlights how it automatically selects the right model and reasoning effort, making AI use easier and more productive. Mollick also appreciates its proactive nature. It completes tasks and suggests more actions and solutions, reducing the burden on users to know what to ask for.

It just does things, and it suggested others things to do. And it did those, too: PDFs and Word documents and Excel and research plans and websites. It is impressive, a little unnerving, to have the AI go so far on its own. You can also see the AI asked for my guidance but was happy to proceed without it. This is a model that wants to do things for you.

What Ethan Mollick thinks is bad about GPT-5?

The primary flaw is the unreliability of the automatic model router. It arbitrarily decides when to use the more powerful “Thinking” mode, leading to an inconsistent user experience. It sometimes treats hard problems as easy and vice versa. This unpredictability means users may not always get the best results unless they explicitly prompt the AI to “think hard” or select a premium model. He also notes that, while GPT-5 is proactive, it can go further than users expect, which may feel unnerving or overwhelming. Finally, he reminds readers that GPT-5 still makes mistakes and hallucinations, so human oversight remains necessary.

For example, I asked GPT-5 to ‘create a svg with code of an otter using a laptop on a plane’ … Around 2/3 of the time, GPT-5 decides this is an easy problem, and responds instantly, presumably using its weakest model and lowest reasoning time… The rest of the time, GPT-5 decides this is a hard problem, and switches to a Reasoner… How does it choose? I don’t know… it is really unclear – GPT-5 just does things for you.

Every on GPT-5

The review captures the central conflict of GPT-5: it’s a brilliant product for the mass market. But it is a disappointment for experts who were hoping for a paradigm shift.

Read Every GPT-5 review: Vibe check GPT-5

What Every thinks is good about GPT-5?

Every praises GPT-5 for its impressive speed, affordability, and user-friendly design, making advanced AI accessible to everyone. The model excels at research, writing, and pair programming, providing comprehensive and readable answers quickly. It’s highly steerable, following prompts well, and is especially valuable for day-to-day tasks and debugging code. While not the top choice for agentic engineering or editing, GPT-5 is considered a major upgrade for most users.

For the average user, GPT-5 is a huge win because it simplifies the user experience with its automatic ‘Speed-adaptive intelligence’. It undercuts competitors with ‘Aggressive pricing’.

In ChatGPT, GPT-5 is the best model that 99 percent of the world will have ever used: It’s fast, simple, and powerful enough to be a daily driver……We never use the model picker anymore and almost never need to go back to older models. It’s extremely fast for day-to-day queries and gives comprehensive answers to questions that require research. It also disagrees more frequently instead of hallucinating.

What Every thinks is bad about GPT-5?

For advanced users and the cutting-edge community, it’s a letdown. The model feels like a safe refinement rather than a revolutionary leap, and it “isn’t optimized to be agentic”.

Every points out that GPT-5 is too cautious, often stopping too soon and struggling with large, complex coding tasks. Its output can be overly verbose and less readable for big projects. It doesn’t perform as well as Claude Opus in editing or evaluating writing quality. For vibe coding and tasks requiring true delegation, GPT-5 falls short compared to specialized models like Claude Code. The team also notes inconsistent results in writing benchmarks. There is a lack of creativity in some one-shot app and game creation tasks.

GPT-5 does what you tell it to do. It takes measured, small steps and wouldn’t dream of straying off course—and that’s my problem with it. It’s good at coding, especially doing precise back-end tasks, but it isn’t optimized to be agentic. If you work in a more old-fashioned iterative process, and you give it direction and say, ‘”Yes, this looks good: can you now do that?” it’s very easy to work with. The only issue is that’s more how you’d work with AI in 2024.

GPT-5 is a Sonnet 3.5 killer, not a leap into the future.

Mathieu Acher on GPT-5

His focused experiment demonstrates that despite the hype, GPT-5 still suffers from fundamental limitations in planning and reasoning. It fails a simple strategic task that reveals a lack of true understanding.

Read Mathieu Acher GPT-5 Review: GPT-5 and GPT-5 Thinking as Other LLMs in Chess: Illegal Move After 4th Turn

What Mathieu Acher thinks is good about GPT-5?

While his specific test reveals flaws, he acknowledges the model’s impressive performance on other benchmarks and its exciting potential.

GPT-5 already showed great results in many tasks, and clearly the models are very exciting. We can, however, observe a kind of plateau looking at benchmarks. Hitting a wall? There are many fundamental limitations, and the road is long.

What Mathieu Acher thinks is bad about GPT-5?

In his “IllegalChessBench” test, both the standard GPT-5 and its “Thinking” variant made a basic illegal move just four turns into a game. This is a failure on par with much older models.

Using a simple four-move sequence, I succeed to force GPT-5 and GPT-5 Thinking into an illegal move. Basically as GPT3.5, GPT4, DeepSeek-R1, o4-mini, o3.

Latent Space on GPT-5

GPT-5 signifies a clear trade-off. It has become a more reliable and precise coding assistant. But has lost the speculative, creative edge that made earlier models exciting for innovation and architectural tasks.

Read Latent Space GPT-5 Review: GPT-5 Hands-On: Welcome to the Stone Age

What Latent Space thinks is good about GPT-5?

The model is a coding powerhouse that produces cleaner, safer, and more reliable code with fewer bugs. It excels at handling large, complex codebases and is great for maintaining and refining existing projects.

GPT-5 marks the beginning of the stone age for Agents and LLMs. GPT-5 doesn’t just use tools. It thinks with them. It builds with them.

Deep Research was our first peek into this future. ChatGPT has had a web search tool for years… What made Deep Research better?

OpenAI taught o3 how to conduct research on the internet. Instead of just using a web-search tool call and then responding, it actually researches, iterates, plans, and explores. It was taught how to conduct research. Searching the web is part of the how it thinks.

Imagine Deep Research, but for any and all tools it has access to. That’s GPT-5. But you have to make sure you give it the right tools.

What Latent Space thinks is bad about GPT-5?

The model’s creativity and innovative spark have been “dulled.” It is less bold and more reluctant to rethink or re-architect code, preferring safe, incremental improvements.

GPT-5 truly shines at software engineering, yet its writing capability lags behind that of GPT-4.5, often failing to impress in creative or expressive tasks. This hit-or-miss writing performance challenges the idea that it’s “just better” across the board. It reframes the path toward AGI as more complex than anticipated.

While GPT-5 continues to work its way up the SWE ladder, it’s really not a great writer. GPT 4.5 and DeepSeek R1 are still much better.

METR on GPT-5

METR gives GPT-5 a passing grade on safety for now. They conclude that GPT-5, and its incremental development beyond it, is unlikely to pose catastrophic risks. These risks include AI R&D automation, rogue replication, or strategic sabotage. Their time-horizon evaluation is around 2 hours and 17 minutes for complex software engineering tasks. This places GPT-5 well below the thresholds needed to raise severe concern.

However, the report is filled with caveats. It discusses the limitations of current testing methods. More rigorous oversight is needed as AI models become more powerful.

Read METR GPT-5 Review: Details about METR’s evaluation of OpenAI GPT-5

What METR thinks is good about GPT-5?

The independent safety evaluation concluded that GPT-5 is “unlikely to pose a catastrophic risk” from rogue replication or accelerating dangerous R&D threats. They praised OpenAI for its cooperation and transparency.

Taken at face value, our evaluations indicate that GPT-5 has a time horizon between 1 – 4.5 hours on our task suite, with a point estimate around 2 hours and 17 minutes. Combining this with assurances from OpenAI about specific properties of the model and its training, we believe GPT-5 is far from the required capabilities to pose a risk via these threat models.

This indicates that GPT-5 can handle tasks that would challenge human professionals unfamiliar with a codebase within a reasonable timeframe. This highlights its practical utility and advancement over earlier models.

What METR thinks is bad about GPT-5?

The report highlights several limitations. The evaluation was conducted under an NDA. Their test suite is becoming saturated. The model exhibited “situational awareness” (knowing it was being tested). This awareness is a precursor to potentially dangerous behavior.

We observed GPT-5 exhibiting some behaviors that could be indicators of a model attempting strategic sabotage of our evaluations. These behaviors included demonstrating situational awareness within its reasoning traces, sometimes even correctly identifying that it was being evaluated by METR specifically, and occasionally producing reasoning traces that we had difficulty interpreting.

Artificial Analysis (@ArtificialAnlys) on GPT-5

Artificial Analysis concludes GPT-5 is a valuable, pragmatic upgrade for developers. It establishes a new price-performance frontier. However, it doesn’t live up to the hype of a major breakthrough.

They highlight GPT-5, when configured with different “reasoning effort” levels—High, Medium, Low, and Minimal, offers a spectrum of intelligence, latency, and token-efficiency trade-offs. At the High level, GPT-5 achieves a new benchmark in AI model intelligence, while at Minimal, it matches GPT-4.1 in capability but with significantly fewer tokens and lower cost.

Read Artificial Analysis’s GPT-5 Review: Comparison of Models: Intelligence, Performance & Price Analysis

What Artificial Analysis thinks is good about GPT-5?

The review calls GPT-5 a “pretty important pragmatic upgrade.” It notes a “big jump” in performance for agentic tasks. The model has been optimized for price, coding, and tool use.

GPT-5 (high) and GPT-5 (medium) are the highest intelligence models, followed by Grok 4 & o3-pro.

What Artificial Analysis thinks is bad about GPT-5?

The review acknowledges the widespread user sentiment that the upgrade was not the revolutionary leap many expected. While GPT-5’s High configuration leads in intelligence. The improvement from preceding models like o3 is relatively modest compared to past major leaps in AI evolution.

“High sets a new standard, but the increase over o3 is not comparable to the jump from GPT-3 to GPT-4 or GPT-4o to o1.”

MIT Technology Review on GPT-5

MIT Technology Review portrays GPT-5 as a polished, refined iteration rather than a revolutionary leap in AI. While it offers a more seamless and pleasant user experience, it falls short of the game-changing breakthroughs often associated with AGI advancements. Altman’s claim that GPT-5 is a “significant step” toward AGI is acknowledged—but the publication qualifies it as a very small step.

Read MIT Technology Review’s GPT-5 Review: GPT-5 is here. Now what?

What MIT Technology Review thinks is good about GPT-5?

The review highlights the user-facing benefits of the new unified architecture, which is “streamlining the experience and reducing friction for general users.” The model’s efficiency gains are also noted as a positive step. Thus, GPT-5 delivers an improved user experience, faster reasoning, and more reliable performance. This comes with fewer hallucinations in a more accessible release.

Whereas o1 was a major technological advancement, GPT-5 is, above all else, a refined product. During a press briefing, Sam Altman compared GPT-5 to Apple’s Retina displays, and it’s an apt analogy, though perhaps not in the way that he intended. Much like an unprecedentedly crisp screen, GPT-5 will furnish a more pleasant and seamless user experience.

What MIT Technology Review thinks is bad about GPT-5?

The improvements are framed as “refinements rather than revolutionary”. Critics cited in the review argue the model does not represent a meaningful step towards Artificial General Intelligence (AGI).

…..it falls far short of the transformative AI future that Altman has spent much of the past year hyping. In the briefing, Altman called GPT-5 “a significant step along the path to AGI,” or artificial general intelligence, and maybe he’s right—but if so, it’s a very small step.

Drawing conclusions from GPT-5 expert and Reddit reviews

The launch of GPT-5 will be remembered not as a leap forward for artificial intelligence, but as a multifaceted failure of product, marketing, and community management. It is a story of broken promises, a soulless user experience, and a chasm between benchmark performance and real-world utility.

The update’s underwhelming nature has raised an industry-wide concern: Are we hitting a wall?

Critics claim that “AI capabilities are no longer keeping pace with AI hype.” There are diminishing returns from scaling models based on the existing Transformer architecture. The minor gains of GPT-5 are portrayed as a major breakthrough. These gains may suggest that true progress requires a fundamental architectural shift. However, such a shift is not yet forthcoming.

OpenAI may yet fix the bugs, refine the user experience, and win back some of its disillusioned users. Sam Altman has already hinted at future capacity crunches and potential delays, suggesting the road ahead is difficult. But the damage to its reputation and the profound questions raised about the future of AI progress will linger. The GPT-5 launch was a cautionary tale for the entire industry. For a brief, illuminating moment, the emperor was revealed to have no clothes, and the crowd is now asking if he ever really did.

Action Points on GPT-5

- For Disappointed Users: To get better results from the current model, explicitly prompt it to “think hard about this” or “reason step-by-step” to manually trigger its more capable mode. Explore strong alternatives like Anthropic’s Claude series. Many users praise it for its creative nuance and coding abilities. You can also consider Google’s Gemini models.

- For Developers: Experiment with the new API parameters. Use parameters like

reasoning_effortandverbosity. This will allow you to fine-tune the model’s behavior for your specific use case. For latency-sensitive or cost-conscious applications, evaluate thegpt-5-miniandgpt-5-nanovariants. - For the AI-Curious: Learn to be skeptical of marketing claims and benchmark scores. Judge AI tools based on their practical utility for your own tasks. Follow a variety of expert and user voices. This will offer a balanced view of a model’s true capabilities, not just from official announcements.

Frequently Asked Questions (FAQs) on GPT-5 by OpenAI

Why is everyone saying GPT-5 is a downgrade?

Many users perceive GPT-5 as a downgrade because it feels less creative, less personable, and more “corporate” than its predecessor, GPT-4o. Complaints center on its “cold” personality, shorter and less detailed responses, and a regression in creative writing capabilities. The forced removal of older, beloved models amplified this negative sentiment.3

2. Is GPT-5 really free? What are the limits?

Yes, a version of GPT-5 is available for free to all signed-in ChatGPT users. However, free users face usage limits. When these limits are hit, the system switches to a smaller, less capable variant of GPT-5. Paid subscribers on Plus ($20/month) and Pro ($200/month) plans get significantly higher or unlimited usage limits, respectively.

3. Can I get GPT-4o back?

After significant user backlash, OpenAI reinstated access to GPT-4o, but only for paying ChatGPT Plus subscribers. Free users are currently limited to the GPT-5 model system.

4. What’s the difference between GPT-5 and GPT-5 Thinking?

GPT-5 is a unified system. The default model is designed for fast, efficient answers. GPT-5 Thinking is a deeper, more powerful reasoning model used for complex problems. An “auto-switcher” is supposed to choose the right model, but it has been unreliable. Users, especially on paid plans, can often manually trigger the “Thinking” mode for better results on difficult tasks.

Is GPT-5 better or worse than Claude 4?

The comparison is mixed. Some experts and users find GPT-5’s upgrade underwhelming, especially compared to Anthropic’s Claude 4 and 4.1 models, which are often praised for superior performance in complex coding and creative writing tasks. However, GPT-5 is more versatile with its multimodal features. It is priced much more aggressively, making it a strong competitor on cost.

Why is GPT-5 so bad at creative writing?

Users report that GPT-5’s creative writing is “flat” and lacks the nuance and subtext of previous models. This is attributed to a shift towards a more professional, less “sycophantic” personality, which has stripped away its creative spark.

How is GPT-5 better for coders but worse for others?

GPT-5 was designed to be an “exceptional” coding partner. Its highly technical, verbose, and detail-oriented nature is an asset for software development. However, these same traits make it frustrating for non-technical users. This includes product managers or business stakeholders. It jumps to solutions without exploring the “why.” This approach can obscure the main point with excessive technical detail.

Does GPT-5 still hallucinate?

Yes. Despite OpenAI’s claims of an up to 80% reduction in factual errors, users and reviewers report that the model still hallucinates frequently in real-world use. It has been documented inventing APIs, fabricating details from documents, and getting basic facts wrong.

How much does GPT-5 cost for Plus and Pro users?

ChatGPT Plus costs $20/month and provides higher usage limits for GPT-5 and access to GPT-4o. ChatGPT Pro costs $200/month and offers unlimited access to GPT-5 and access to the even more powerful GPT-5 Pro model.

How do I use GPT-5 effectively if it feels “dumber”?

To get better performance, you can use specific prompting techniques. Instructing the model to “think hard about this,” “reason step-by-step,” or “show your work” can force it to engage its more powerful Thinking mode. Breaking down complex problems into smaller parts also helps improve accuracy.

Read more review consolidations for AI models and other explainers on Applied AI Tools

Explore below AI research paper explainers and trends in AI models:

- Microsoft AI-Safe Jobs Study Explained: Use Insights to AI-Proof Career in 2025

- Best AI Browsers: Free Comparison Business Analysis Report

- MIT ChatGPT Brain Study: Explained + Use AI Without Losing Critical Thinking

- Gemini 2.5 Pro Preview: Best AI Coding Tool For Developers

- How AI for Consulting Reshapes Strategy Consulting Jobs in 2025

- Vertical AI Agents Will Replace SaaS – Experts Warn On Future Of Workflow Automation

- ChatGPT vs Gemini 2.5 Pro – Analyzing Reddit And Expert Reviews

Get more simplified AI research papers, listicles on best AI tools, and AI concept guides, subscribe to stay updated:

This GPT-5 review analysis is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Leave a Reply