The competition in the AI models space across the globe has shifted dramatically to cost reduction. DeepSeek models have shown that it is possible to use cheaper chips to deliver better performance.

Recenly, new entrants like Alibaba’s Qwen2.5-Max is also making waves with exceptional outputs in comparison to Claude.

Now, OpenAI is taking notice and catching up to this race with its latest OpenAI o3-mini model. OpenAI mentions how it is the most cost-effective AI model in their ‘reasoning series’.

This development is particularly relevant for developers, researchers, and businesses looking to harness AI for complex problem-solving tasks.

This blog is part of our series that covers popular and latest AI model. Today, we help everyone understand about OpenAI o3-mini

- What is OpenAI o3-mini.

- Technical nuances of OpenAI o3-mini in jargon-free language

- How to get started with OpenAI o3-mini for practical applications.

Key technical details of OpenAI o3-mini

OpenAI’s o3-mini is part of the o-series, which emphasizes reasoning over traditional language processing. Unlike models that focus solely on generating text, o3-mini is designed to tackle tasks requiring deep analytical thinking.

OpenAI o3-mini architecture

OpenAI’s o3-mini is built on a dense transformer architecture.

Dense transformer architecture is a type of neural network used in natural language processing. It consists of an encoder and a decoder. The encoder processes input data, like a sentence, to create a contextual understanding. While the decoder generates the output, like, a translated sentence. It uses multi-head attention to focus on different words in the input at once, helping the model understand relationships better.

For example, when translating “The cat sat on the mat,” the model can pay attention to “cat” and “mat” simultaneously. This approach helps produce a precise translation. This architecture is essential for creating advanced AI models like GPT and BERT.

OpenAI o3-mini uses all its parameters for each input token. This ensures robust performance across various applications.

Reasoning Modes

The model features adjustable reasoning effort levels—low, medium, and high. This allows users to improve for speed or accuracy depending on their needs. For example, a low setting provides quick responses suitable for straightforward queries. While a high setting delivers detailed analyses at the cost of increased response time.

Functionality Enhancements

It includes features like function calling, developer messages, and structured outputs. These make it easier for developers to integrate into applications. This is particularly beneficial in technical fields like mathematics and coding.

OpenAI o3-mini performance metrics

The performance of AI models is often gauged through various metrics that show their capabilities in real-world scenarios. For OpenAI’s o3-mini, key performance indicators include:

Mathematics Performance

In evaluations like AIME 2024, o3-mini matches or surpasses its predecessors depending on the reasoning effort employed. At high effort, it outperforms both o1 and o1-mini models.

PhD-Level Science Evaluation:

The model excels in complex subjects like biology and chemistry. It achieves higher accuracy rates than previous versions at both low and high reasoning efforts.

Coding Efficiency

In competitive programming settings (e.g., Codeforces), o3-mini consistently achieves higher Elo scores compared to earlier models, showcasing its enhanced coding capabilities.

These metrics are critical. They demonstrate not only the model’s effectiveness but also its reliability in handling complex tasks. This is a vital factor for users relying on AI in professional settings.

Unique features of OpenAI o3-mini you must know

What distinguishes Open AI o3-mini from other industry models is its specialized focus on reasoning capabilities.

While many AI models prioritize speed and basic language processing, Open AI o3-mini integrates advanced reasoning techniques that allow it to:

- Simulate Reasoning: This feature enables the model to pause and reflect before generating responses, akin to human thought processes. This contrasts with traditional models that give immediate outputs without such reflective analysis.

- Deliberative Alignment: A new safety technique that enhances the model’s ability to evaluate user prompts against safety guidelines effectively. This ensures that responses are not only accurate but also safe from potential misuse or harmful content.

Comparative Analysis with Open AI o3-mini and other industry leading AI models

When comparing OpenAI o3-mini with other leading AI models like Qwen2.5-Max, DeepSeek R1, Claude, and other OpenAI versions (e.g., GPT-4), several distinctions emerge:

| Feature/Model | OpenAI o3-mini | Qwen2.5-Max | DeepSeek R1 | Claude |

|---|---|---|---|---|

| Total Parameters | ~200 billion | ~300 billion | 671 billion | ~175 billion |

| Context Window | 200K tokens | 128K tokens | 128K tokens | 80K tokens |

| Performance | High in STEM tasks | Competitive | Superior in coding | Balanced |

| Reasoning Focus | Strong | Moderate | Moderate | General |

This table highlights how Open AI o3-mini stands out.

It focuses on reasoning and efficiency. It also maintains a competitive edge in coding and STEM applications.

4 key advantages of using OpenAI o3-mini

The adoption of OpenAI o3-mini offers several advantages:

Cost Efficiency

Designed to be more affordable than larger models while still delivering high performance.

OpenAI’s o3-mini model is designed to be more affordable compared to its other models, particularly the o1-mini. Here’s a cost comparison:

- o3-mini: $1.10 per million input tokens and $4.40 per million output tokens (source).

- o1-mini: Approximately 20 times more expensive than non-reasoning models. The Open AI o1-mini Input token price: $3.15, Output token price: $12.60 per 1M Tokens (source).

- GPT-4o: Costs about $2.50 per million input tokens and $10 per million output tokens for the ‘everyday tasks’ model.

Thus, o3-mini is roughly two times cheaper to run than GPT-4o (everyday tasks). It is also 65% less expensive than o1-mini per input token. The focus on cost efficiency allows OpenAI to make advanced reasoning capabilities more accessible to a broader audience. This includes free users of ChatGPT. This is a significant shift from the previous model access limitations.

Speed

The OpenAI o3-mini model is designed to provide significantly faster response times compared to its predecessor, o1-mini.

According to OpenAI, o3-mini delivers responses that are 24% quicker, with an average response time of 7.7 seconds compared to 10.16 seconds for o1-mini. This improvement in speed is particularly beneficial for users requiring quick and efficient answers in coding, math, and science tasks.

Additionally, o3-mini maintains high accuracy levels. It optimizes for reduced latency. This makes it a valuable tool for developers and enterprises looking to enhance their AI applications efficiently.

Enhanced reasoning capabilities

OpenAI o3-mini delivers clearer and more accurate answers compared to its predecessor, o1-mini. It reduces major errors by 39% in complex scenarios. The model features adjustable reasoning effort levels—low, medium, and high. This flexibility allows users to optimize for speed or accuracy based on their specific needs.

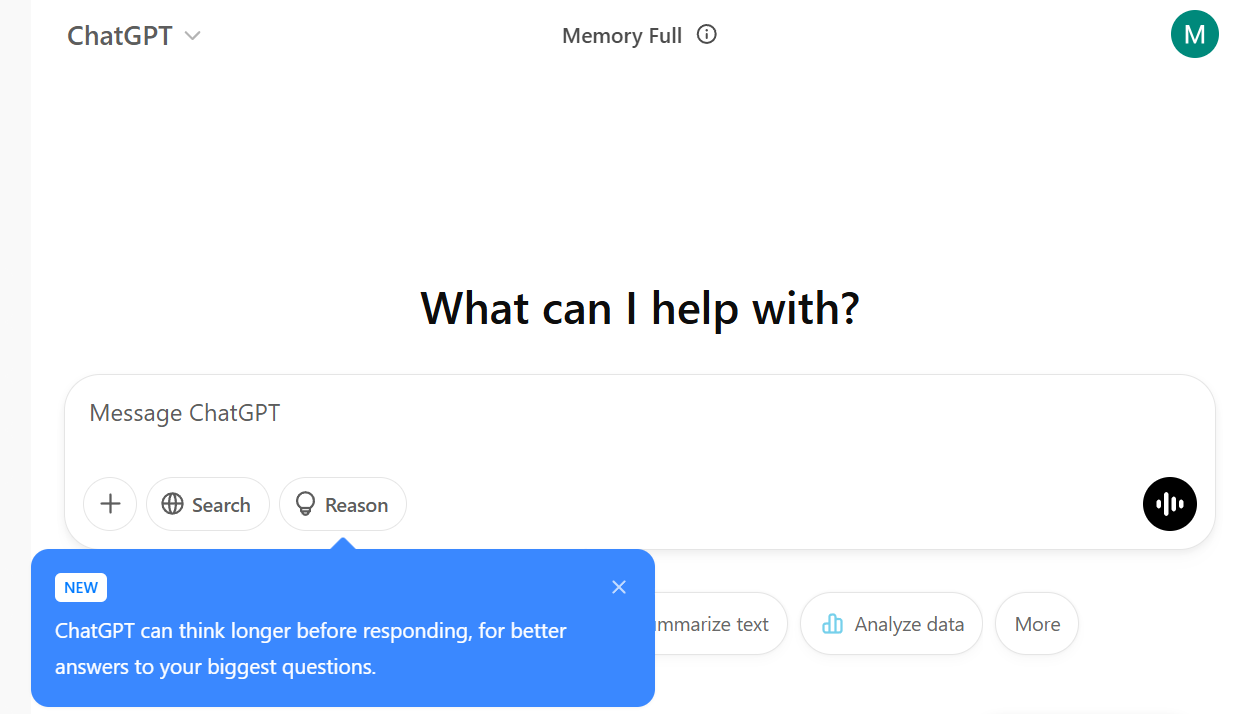

Free access by OpenAI

For the first time, OpenAI has made cutting-edge reasoning technology available to free-tier users through the ‘Reason’ option in ChatGPT. This democratization of access ensures that all students, regardless of their financial resources, can benefit from advanced AI tools in their studies

Practical Applications of Open AI o3-mini AI model

OpenAI o3-mini is particularly well-suited for various practical applications:

STEM education

Assisting students with complex math and science problems by providing detailed explanations and solutions.

It shows an impressive 83.6% accuracy rate on AIME 2024 competition math questions. It also shows a 77% accuracy rate on PhD-level science questions. Thus, OpenAI’s O3-mini can help students grasp challenging concepts more effectively. This high level of performance can significantly improve learning outcomes in technical subjects

Software development

Streamlining coding processes by offering real-time assistance with programming tasks.

- Function calling: OpenAI’s o3-mini allows developers to integrate external functions and APIs seamlessly. This integration enables the model to perform specific tasks, such as data retrieval or executing commands. This feature streamlines workflows by automating repetitive tasks.

- Structured outputs: The model generates outputs in a well-defined JSON format. This makes it easier for developers to use the results in applications without additional formatting.

- Adjustable Reasoning Levels: Developers can choose from low, medium, and high reasoning effort levels. This choice allows them to optimize for speed. They can also optimize for accuracy based on the complexity of the task at hand. This flexibility is essential for different stages of development, from rapid prototyping to detailed debugging.

Research Assistance

The OpenAI o3-mini model integrates with search functionalities, allowing users to receive real-time answers with linked sources. This feature enhances the research process by providing immediate access to relevant information and supporting materials.

Getting Started with OpenAI o3-mini

To begin using OpenAI o3-mini:

- Access through ChatGPT: Users can utilize the model via the “Reason” feature available in ChatGPT’s free tier.

- API Integration: Developers can access various API endpoints to integrate the model into their applications seamlessly.

- Experiment with Reasoning Modes: Users should explore different reasoning effort levels. This will help them find the optimal balance between speed and accuracy for their specific needs.

Have you used OpenAI’s o3-mini model? – Let us know your experience in the comments!

OpenAI’s o3-mini represents a significant advancement in AI reasoning capabilities, making it an invaluable tool across various sectors.

If you are an expert in AI models, do share corrections, further explanations, and opinions in the comments.

At Applied AI Tools, we want to make learning accessible. You can discover how to use the many available AI software for your personal and professional use. If you have any questions – email to content@merrative.com and we will cover them in our guides and blogs.

Learn more about AI concepts:

- Learn what is tree of thoughts prompting method

- Make the most of ChatGPT – 5 Free ChatGPT Features For Prompt Management

- Join prompt engineering and Generative AI communities to stay in touch with the latest in artificial intelligence.

- Learn what the experts and influencers are saying about AI Agents and AGI – 20+ insightful quotes on AI Agents and AGI from AI experts and leaders

- Looking for ChatGPT alternatives? – Explore 30 User-Friendly ChatGPT UI tools

You can subscribe to our newsletter to get notified when we publish new guides – shared once a month!

This blog post is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Get in touch if you would like to create a content library like ours. We specialize in the niche of Applied AI, Technology, Machine Learning, or Data Science.

Leave a Reply