Small language models use cases focus on balancing using AI for workflows while being sustainable and efficient. Unlike their larger counterparts, that is, large language models (LLMs), SLMs work with significantly fewer computational resources. They do so while maintaining many of the impressive capabilities of LLMs.

This efficiency translates to faster processing speeds and reduced energy consumption. These qualities make them ideal for widespread deployment across various devices—even those with limited processing power!

I have covered what are small language models in detail. It includes its features, benefits, limitations, and popular SLM examples.

In this guide, I will focus on the small language model use cases and examples of its real world applications.

I think learning and tracking about SLM is important. What began as theoretical research has blossomed into a rich ecosystem of practical tools solving real-world problems. Companies like Nomic AI, Deci, and Microsoft have developed lightweight models that carry out specialized tasks with remarkable effectiveness.

Today, SLMs allow real-time language translation on smartphones. They allow voice assistants to respond instantly and give coding suggestions as you type. Speed, privacy, and efficiency is what make SLMs ideal for various practical applications. This makes them serve as a crucial step in making AI accessible to everyone.

- Learn key small language model use cases with real-world examples.

- Broad level categories of SLM applications.

- FAQs on adopting small language models in real world.

How do small language models work?

The creation of these efficient SLMs often involves sophisticated model compression techniques applied to larger, more complex models.

These techniques aim to reduce the model’s size while preserving its accuracy. Here are some example small language model optimization techniques:

- Pruning involves removing less critical or redundant parameters from the neural network. This is akin to trimming unnecessary branches from a tree. This allows for more focused growth.

- Quantization reduces the precision of the data used by the model. It shows weights and activations with fewer bits. This is like using rounded numbers for quick estimations.

- Low-rank factorization decomposes large matrices into smaller ones. This simplifies complex operations.

- Knowledge distillation involves training a smaller “student” model. This model mimics the behavior of a larger, more knowledgeable “teacher” model.

These techniques effectively “slim down” the AI recipe, making it faster and easier to execute without sacrificing the core functionality.

Small language model application market landscape

Think of small language models as compact, specialized tools, and not as giant, all-purpose factories. They do specific jobs extremely well while using fewer resources.

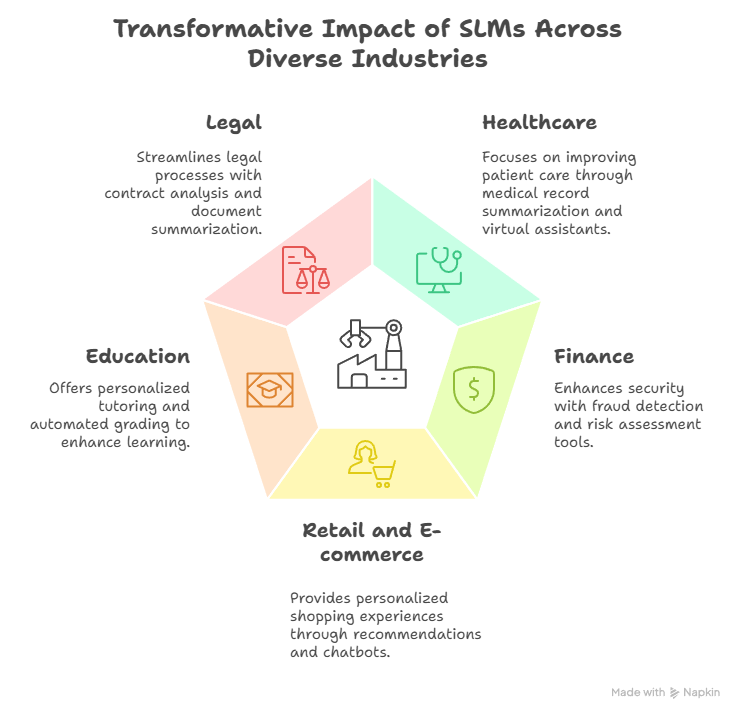

Based on this, we can organize SLM applications into 2 major categories:

- By industry: SLMs serve specific needs in healthcare by processing medical notes. In finance, they analyze market trends. In education, they create personalized learning materials. They also serve many other sectors which we will expand in further sections.

- By function: SLMs excel at on-device applications (while also preserving privacy). They enhance user experiences through responsive interfaces. They help with coding tasks through intelligent completion. SLMs also augment information retrieval systems through Retrieval Augmented Generation (RAG) (I will explain what that is in further section!).

Each small language model use case I have described will cover:

- Environment in which they operate

- Primary advantage they give

- Specific industry they serve.

Small language models for on-device applications

An important advantage of Small Language Models is their ability to execute processing directly on the device. This concept is known as on-device processing.

This localized approach offers a primary advantage – that is, reduced latency, meaning faster response times.

When a device processes information locally, the delay linked to sending data to a remote server is eliminated. Receiving a response from a remote server is no longer necessary. This leads to quicker interactions.

Furthermore, on-device processing enhances privacy.

When data is processed locally, it remains on the user’s device. This reduces the need to send sensitive information to the cloud. This minimizes the risk of data breaches and ensures greater control over our personal information.

Another crucial advantage is offline functionality.

Devices equipped with SLMs can continue to work even without an internet connection, providing uninterrupted access to key features.

Think of using a map application with offline maps. Or consider a translation tool while traveling in an area with no network coverage. SLMs can power such offline capabilities.

Finally, processing data locally reduces the need for constant data transfer. This leads to lower data costs for us! Hence, by reducing reliance on cloud services, we can save on mobile data consumption and internet bandwidth.

Here are some key real-world small language models use cases presently adopted:

Using small language models for smart voice assistants

Siri or Alexa may finally become smart! 😀

Smart assistants and voice control are significantly enhanced by the integration of SLMs. These models allow faster response times for voice commands. They improve user privacy by processing voice recognition directly on the device.

- SLMs help understand you better: Because SLMs grasp language more flexibly, you wouldn’t need rigid commands. Imagine telling your lamp, “Make it feel cozy in here.” It would adjust the brightness and warmth appropriately. The lamp figures out what “cozy” means in context.

- SLMs can explain themselves: Confused why the heat suddenly turned off? A thoughtful thermostat could explain its schedule. It could also give the reasoning in plain English. This is possible thanks to its onboard SLM reasoning about its own state. No more opaque automation!

- SLMs enhance privacy and reliability: The SLM runs locally. Your commands and data stay within your device. They are not constantly streamed to the cloud. This boosts privacy. It also means your device keeps working even if your internet goes down. Your device will still work if a company shuts down its servers.

Small language model for smart assistants example: Thoughtful Lamp

I found this very interesting paper that built this by creating a ‘state model. They used automated techniques to train an SLM to understand that model. The SLM was then linked to natural language commands and explanations. They successfully created a ‘thoughtful’ lamp and thermostat running on simple hardware like a Raspberry Pi.

You can read the small language model research paper here: Thoughtful Things: Building Human-Centric Smart Devices with

Small Language Models by University of Texas at Austin

This approach is still developing. It points towards a future where our interactions with technology feel more natural and intuitive. It also respects our privacy.

Using small language models for translation on mobiles

Real-time translation on mobile devices is another area where SLMs excel. Their ability to give rapid responses makes them ideal for instant translation of spoken or written language. This ability includes translating a foreign language menu using the phone’s camera or facilitating real-time conversations in different languages.

This on-device translation ability breaks down communication barriers – without breach of privacy. This is particularly useful in situations where internet access is limited or expensive.

Small language model for language translation example: NVIDIA’s Nemotron-4-Mini-Hindi-4B

For example, NVIDIA has developed Nemotron-4-Mini-Hindi-4B, a 4-billion-parameter SLM tailored for Hindi, India’s most widely spoken language. This model trained using a blend of real-world and synthetic Hindi data, alongside English data, to enhance its translation capabilities. Its compact architecture allows for deployment on NVIDIA GPU-accelerated systems. This design facilitates rapid and accurate translations, without the need for extensive infrastructure.

Building upon this, Tech Mahindra used the Nemotron Hindi model to create Indus 2.0, an AI model focusing on Hindi and its various dialects. Indus 2.0 uses high-quality fine-tuning data to improve translation accuracy. It enables businesses in sectors like banking, education, and healthcare to offer services in multiple languages. By doing this, they can broaden their reach and inclusivity.

The adoption of SLMs like Nemotron-4-Mini-Hindi-4B signifies an important change in favor of sovereign AI. This shift focuses on developing AI infrastructure based on local datasets. These datasets show specific dialects, cultures, and practices. This approach enhances translation accuracy and ensures cultural relevance. As a result, it fosters more meaningful and effective communication across diverse populations.

Using small language models for predictive texting on phones

SLMs analyze the context of typing to suggest the next word. They correct errors more intelligently and quickly than traditional ways. This leads to a smoother and faster typing experience for users. Furthermore, SLMs allow offline functionality in various apps.

For example, a travel guide app can incorporate an offline chatbot. This chatbot is powered by an SLM. It can answer common questions about a city even without an internet connection. This ability to work offline extends the reliability of mobile apps. You can avoid buying of local sims or joining unknown WiFi networks during international travel. It also enhances usability in situations with unreliable network access.

Using small language models for Internet of Things

Beyond mobile phones, SLMs are finding increasing applications in the domain of IoT and embedded systems.

By deploying SLMs on IoT devices, organizations can achieve real-time data processing and decision-making at the edge. This reduces latency and enhances operational efficiency. For example, in industrial settings, SLMs can be used for predictive maintenance. They analyze equipment data locally to expect failures before they occur. This localized processing minimizes the need for constant cloud communication, leading to faster responses and reduced bandwidth usage.

Small language model for IoT example: IoT Greengrass by AWS

For example, AWS IoT Greengrass deploys SLMs on edge devices – check its telecom use case. It enables functionalities like natural language processing and user profiling directly on IoT devices. This ability allows for more personalized and autonomous operations.

Another use of SLM is in smart homes to enhance devices with natural language understanding. Their compact size allows them to be integrated into various smart home appliances. This integration enables voice control of lights, thermostats, and entertainment systems. This allows users to interact with their homes more intuitively and naturally, moving beyond simple commands to more conversational interactions.

Using small language models for manufacturing automation

SLMs are used in manufacturing and logistics for tasks like equipment monitoring. They are employed in predictive maintenance and voice-controlled workflows.

For example, workers on a factory floor can use voice commands to control machinery. Real-time alerts get generated based on equipment monitoring data analyzed by an SLM.

Small language models for manufacturing automation example: Rockwell Automation’s FT Optix Food & Beverage Model

Collaborating with Rockwell Automation, Microsoft added the FT Optix Food & Beverage SLM to the Azure AI catalog. This model assists frontline manufacturing workers by providing AI-driven recommendations and insights into specific processes and machinery. It aims to enhance troubleshooting and operational efficiency on the factory floor.

Using small language models for business process automation

Microsoft is actively integrating Small Language Models (SLMs) into industry-specific applications to enhance automation and efficiency. By collaborating with partners like Bayer and Rockwell Automation, Microsoft has developed specialized AI models tailored to unique sector needs.

Small language model for business automation example: Bayer’s E.L.Y. Crop Protection Model

In partnership with Bayer, Microsoft introduced the E.L.Y. Crop Protection SLM, designed to support farmers in making informed decisions about crop treatments and pesticide applications. This model uses Bayer’s extensive agricultural data, which includes thousands of real-world inquiries about crop protection labels. The model also incorporates regulatory and environmental information. It is scalable to various farm operations and can be customized for regional and crop-specific requirements.

Using small language models for self-driving cars

In automotive applications, SLMs are important for self-driving cars and advanced driver-assistance systems (ADAS).

While large AI models give advanced features, they need significant computing power, raising vehicle costs. Researchers are now developing smaller multimodal AI models that are efficient and need fewer resources to process inputs like images or sensor data.

These models reduce the need for cloud reliance, lower hardware costs, and consume less energy. Thus, it makes advanced AI more accessible for autonomous driving.

For example, SLMs can enhance the in-car experience by enabling voice control for navigation and music. They give real-time information on points of interest or traffic, ultimately boosting convenience and safety.

Small language model for vehical automation example: Cerence’s CaLLM Edge

Cerence, in partnership with Microsoft, has developed CaLLM Edge, an embedded Small Language Model (SLM) designed to enhance in-car automation. Built upon Microsoft’s Phi-3 small language model family and fine-tuned with Cerence’s extensive automotive dataset, CaLLM Edge features 3.8 billion parameters and a 4k context size. This compact design allows seamless integration into vehicle head units. It enables drivers to control functions like navigation and climate using natural language commands.

CaLLM Edge supports both embedded-only and hybrid deployments. In embedded mode, it operates independently without cloud connectivity, ensuring data privacy by processing information within the vehicle. The hybrid approach combines on-device processing with cloud capabilities, offering flexibility and enhanced functionality.

By integrating CaLLM Edge, automakers can give drivers advanced AI-powered experiences. These experiences are reliable, private, and efficient thanks to small language models. All this, regardless of connectivity constraints!

Using small language models for on-device document assistance

Dealing with lengthy documents on your phone can be cumbersome.

Wouldn’t it be great if your device instantly summarizes articles? It answers questions about a report? Or suggest relevant queries?

Well, LLMs can already do this.

But what if we get this service, all without sending your data to the cloud?

This is becoming possible thanks to advances in small language models (SLMs) for engaging with documents.

Small language models for document analysis example: SlimLM

Researchers are developing SLMs, and these include the SlimLM series mentioned in recent studies.

You can read the small language model research paper here: SlimLM: An Efficient Small Language Model for On-Device Document Assistance).

They are specifically designed for document assistance tasks directly on mobile devices. Larger models often run in the cloud. In contrast, these SLMs are optimized to operate within the memory constraints of smartphones. They are also optimized for processing constraints.

How does it work?

These specialized SLMs are trained on vast amounts of text and then fine-tuned on datasets specifically built for document-related tasks. This training enables them to execute functions like:

- Summarization: Quickly generate concise summaries of long texts.

- Question Answering: Find and give answers to your questions based on the document’s content.

- Question Suggestion: Propose relevant questions you might want to ask about the document.

The key advantage is that all this processing happens on your device.

This approach significantly enhances user privacy because your documents and queries don’t need to leave your phone. It also means you can use these features without an internet connection. You can avoid potential costs related to cloud-based AI services.

These on-device SLMs can effectively handle reasonably long documents (hundreds of tokens). They execute comparably to other models of similar size. This offers a practical balance between ability and efficiency on current mobile hardware. While larger SLM variants offer more power, even smaller versions prove effective for many common tasks. This technology leads to smarter document interactions while being private. The interaction is more responsive, all right in the palm of your hand!

Use of small language models for privacy-focused enterprises

SLMs are designed to operate efficiently on local devices or on-premises servers, minimizing reliance on external cloud services. This localized processing significantly reduces the risk of data breaches. Sensitive information remains within the organization’s controlled environment, preventing unauthorized access. Additionally, the compact nature of SLMs allows for targeted training on specific datasets, enhancing both performance and security.

Small language models for enterprises example: Softbank and Aizip

A notable example I found is the collaboration between Aizip, Inc. and SoftBank Corp., resulting in a customized SLM integrated with a Retrieval Augmented Generation (RAG) system. This solution operates entirely on an iPhone 14, enabling SoftBank employees to access internal documents securely without cloud connectivity. Remarkably, it addressed 97% of employee inquiries effectively, matching the response quality of larger cloud-based models.

Small language models for enterprises example: Aporia Guardrails

Similarly, Aporia’s Guardrails integrates SLMs between chatbots and users to intercept inaccurate or inappropriate responses, enhancing privacy controls. This technology prevents manipulation of AI systems and ensures that sensitive data is not exposed during interactions.

Time has listed it as one of the best inventions of 2024, read here: Keeping Chatbots in Check

Adopting SLMs allows enterprises to harness AI’s benefits without compromising on data privacy.

Organizations deploy models that process information locally. This helps keep compliance with stringent data protection regulations. It also builds trust with stakeholders. Furthermore, the efficiency of SLMs translates to reduced operational costs and enhanced system performance.

Small language models for improving user experience

Beyond their efficiency, Small Language Models (SLMs) enhance human interaction with technology by understanding nuanced language. They are capable of responding to these subtleties instead of relying on rigid commands. Based on this, I have shared some small language model use cases that focus on end-user experience:

Using small language models for conversational AI

A key area where SLMs improve user experience is in conversational AI and chatbots, making them accessible for small businesses. Their cost-effectiveness and low latency lead to engaging customer service, supporting technical support and virtual assistants.

SLMs can be fine-tuned to specific knowledge bases, providing quick, accurate answers to common questions. They offer faster response times and lower computational costs compared to large language models (LLMs).

Example of small language model for conversational AI use case: Mistral-7B for breast cancer decision support

A compelling example I found is of using an open-source small language model (Mistral-7B) for breast cancer decision support. The researchers did not rely on vast general training data. They fine-tuned the model using specific medical guidelines related to breast cancer treatment. This approach significantly improved the chatbot’s ability to answer clinical questions accurately and in line with current protocols.

The study tested the chatbot’s responses against a benchmark of breast cancer care questions. Results showed that the fine-tuned SLM provided better evidence-based answers compared to general-purpose models like GPT-3.5 or GPT-4, particularly in clinical decision-making contexts.

This research highlights how domain-specific tuning of SLMs can lead to high-performing, explainable, and locally deployable AI tools. These models not only improve user trust but also reduce risks linked with “hallucinated” responses—common in general-purpose LLMs.

Using small language model for personalization

SLMs allow for improved personalization as they can be customized to give contextual and precise information. SLMs remember user preferences and past interactions to offer more tailored support.

Example of small language model for personalization: Palona AI

Palona AI, a startup founded by former leaders from Google and Meta, exemplifies the effective use of SLMs in personalization.

Palona develops AI-driven customer support agents that embody the unique brand personality of each enterprise. For example, an electronics retailer might deploy a “wizard” persona, while a pizza shop could introduce a “surfer dude” character. These agents handle tasks like processing orders, addressing inquiries, and suggesting products. They keep a conversational tone that aligns with the brand’s identity. There are many such persona-based startups coming up.

The success of Palona’s approach lies in the integration of SLMs with high emotional intelligence (EQ). By training models on principles from human sociology, these AI agents can engage in interactions that feel authentic and human-like. This level of personalization fosters stronger customer relationships and enhances overall satisfaction.

Implementing such SLM-based solutions is accessible even for non-technical businesses. Palona’s system can be seamlessly integrated into websites, mobile apps, or phone lines, providing tailored responses across various communication channels. Early adopters include brands like Wyze and Pizza My Heart. They have reported improved customer engagement. and increased sales conversions. This demonstrates the tangible benefits of SLM-driven personalization – learn more on VentureBeat

Small language models for specific industries

Now, let’s focus on industry-wise application of small language models.

How finance industry is using small language models today?

SLMs focus on domain-specific data, allowing for more precise and relevant outputs in financial contexts. This specialization enables them to comprehend complex financial terminologies. It enhances tasks like invoice processing, payment reconciliation, and compliance checks.

Due to this, banking and finance industry will enjoy below benefits of small language models:

Enhanced data privacy

Operating SLMs on in-house infrastructure ensures that sensitive financial data stays within the organization’s control. This approach addresses critical privacy and compliance concerns. This approach is particularly beneficial for institutions navigating stringent regulatory environments.

Operational efficiency

By streamlining processes and reducing the need for extensive human intervention, SLMs contribute to increased operational efficiency. Financial institutions can automate routine tasks, allowing professionals to focus on strategic decision-making.

Risk and compliance management

Private SLMs offer a controlled environment for managing risk and compliance. They give accurate and context-aware insights. These insights support adherence to financial regulations. This ability is crucial for FinTech companies aiming to keep robust compliance frameworks.

Example of small language models in BFSI industry: Sarvam AI and Infosys

Infosys and Sarvam AI have collaborated to develop Infosys Topaz BankingSLM. They also developed Infosys Topaz ITOpsSLM. These are two Small Language Models (SLMs) aimed at enhancing AI capabilities in banking and IT operations.

Using NVIDIA’s AI stack, Sarvam AI processed over 2 million pages of data. They created these models, which seamlessly integrate with Infosys’ enterprise applications. These SLMs have demonstrated superior performance and outperform comparable models of similar size. These models are setting new standards for efficiency and precision in enterprise AI solutions.

Using small language models in education industry

In the field of education, SLMs offer opportunities for personalized learning and improved efficiency. Key areas of automation with SLM include:

- Personalized tutoring and learning by adapting lessons to individual student needs.

- Automated grading and feedback on assignments like essays can free up educators’ time.

- Generating educational content like practice questions and summaries.

- Support language learning by providing real-time feedback on pronunciation and grammar.

- Manage student data and automate repetitive workflows in managing it.

These applications give more tailored and effective learning experiences to students and considers differences in learning abilities.

Examples of using small language models for education: Khan Academy and MIcrosoft’s Khanmigo chatbot

An exemplary application of small language model in education is Khan Academy’s AI-powered assistant, Khanmigo. Developed in partnership with Microsoft, Khanmigo uses SLMs to give personalized tutoring and support for students and educators. This tool offers tailored assistance, helping learners grasp complex concepts and allowing teachers to streamline lesson planning and student engagement.

Initiatives are underway to teach students how to build their own small language models. This fosters a deeper understanding of AI and its applications. By engaging in hands-on projects, students develop critical thinking and technical skills, preparing them for future technological landscapes, learn more: MIT Technology Review

Using small language models in legal and compliance industry

The legal industry is also exploring the benefits of SLMs for various tasks.

- Automate contract analysis, allowing for quick identification of key clauses and potential risks.

- Handle legal research by efficiently searching through vast amounts of legal documents.

- Summarize documents to create concise summaries of lengthy legal texts.

- Automated legal writing by suggesting appropriate language for legal documents.

These applications can significantly improve efficiency and accuracy in the legal profession by automating time-consuming tasks.

Well, LLMs and can also do all of the above tasks, as I have covered here on this blog:

So, why bother with using small language models for legal workflows?

A significant advantage of SLMs is their ability to operate within an organization’s secure environment. This reduces the need to send sensitive information to external servers. This localized processing ensures that confidential client data remains protected, aligning with stringent legal industry standards.

Moreover, SLMs offer enhanced explainability and transparency. This is crucial in the legal field where understanding the rationale behind AI-generated outputs is essential. Their streamlined architecture allows for easier auditing and compliance with regulatory requirements.

Example of small language model adoption in legal industry – JuriBERT

For example, I came across JuriBERT, a family of BERT-based language models tailored for French legal text. To know what BERT is, check out the FAQs at the end of this blog.

The authors showcase that smaller BERT models (like the ones in JuriBERT) can perform as well as larger models. They can even outperform generic models in specific tasks.

This is because domain-specific language, like legal French, has more predictable and constrained linguistic patterns. These patterns allow smaller models to learn efficiently without needing massive capacity.

It’s specifically designed to support legal professionals with more precise text understanding and classification in their domain. Developed to help law professionals, JuriBERT focuses on domain-specific language, enhancing tasks like legal document classification and information retrieval.

The idea builds on earlier work like LegalBERT-Small. This work also showed that a reduced-size model could outperform larger ones in legal NLP tasks. The key factor is that it must be trained on the right data.

Small language models for coding and software development

SLMs give faster inference times and reduced latency, making them ideal for real-time coding assistance and integration into development environments. Their smaller footprint allows for deployment on local machines, enhancing data privacy and security—a critical consideration in enterprise settings.

Additionally, SLMs can be fine-tuned to specialize in specific programming languages or frameworks. This results in more accurate and contextually relevant code suggestions.

Small language model example for coding: Alibaba’s Qwen2.5-Coder

A notable example is Alibaba’s Qwen2.5-Coder, a model designed to help developers by offering code completions and recommendations. Despite its relatively modest parameter count, Qwen2.5-Coder delivers performance comparable to larger models like GPT-4o, demonstrating the efficacy of well-trained SLMs in coding tasks.

Small language model for coding example: PerfRL framework

The PerfRL framework integrates SLMs with reinforcement learning to enhance code optimization. This approach enables the model to learn from environmental feedback. These includes unit tests, and leads to more efficient and reliable code enhancements. PerfRL has shown to achieve results on par with state-of-the-art models while requiring fewer computational resources.

Learn more by reading the SLM research paper: PerfRL: A Small Language Model Framework for Efficient Code Optimization

Retrieval Augmented Generation (RAG) with small language models

Retrieval Augmented Generation (RAG) is a technique to improve language models’ capabilities. It allows retrieving relevant documents or data from external sources. Then, it incorporates this information into the output generation process of language models. This fusion allows AI systems to produce responses that are not only contextually rich but also grounded in factual data.

In essence, it’s like providing the language model with relevant reference materials before it formulates an answer to a query.

The primary advantage of RAG is its ability to mitigate issues like misinformation. It also reduces hallucinations that often plague standalone generative models.

For this, small language models can be effectively used as the generation component within a RAG system. They retrieve information from the external source. Then, they use it to construct a more accurate and contextually appropriate response.

Since they are smaller and need fewer computational resources, SLMs can process the retrieved information and generate responses quickly.

There are specific use cases where SLMs are often a better choice for RAG compared to larger models. In scenarios where the knowledge base is focused and manageable, you can include a company’s internal documentation. Alternatively, it might involve a specific set of research papers. An SLM fine-tuned on that particular data can excel. It can give accurate and fast answers by leveraging the retrieved information within its specialized domain. This approach offers a balance between efficiency and accuracy for targeted knowledge retrieval and generation.

Impact on answer quality when using RAG with SLM:

The benefits of SLMs are clear. But, it is crucial to assess their impact on the quality of responses generated within RAG systems.

I came across this small langauge model research paper: The Impact of Quantization on Retrieval-Augmented Generation: An Analysis of Small LLMs

When SLMs are appropriately fine-tuned and integrated with effective retrieval mechanisms, they can perform comparably to their larger counterparts. This is particularly true in specific tasks. Nonetheless, certain considerations must be addressed:

- Contextual limitations: Due to their reduced parameters, SLMs may struggle with understanding queries. They may also struggle with generating responses for highly complex or nuanced queries. Ensuring that the retrieval component supplies comprehensive and relevant context can mitigate this limitation.

- Knowledge cut-off: SLMs might not encompass the most recent information if not regularly updated. Incorporating dynamic retrieval approaches that access up-to-date external data sources can help keep the relevance of generated content.

Small language model with RAG example: Shorenstein Properties and Egnyte

Shorenstein Properties employs RAG with small language models (SLMs) to automate file tagging. They also use it to extract key insights from lengthy property documents. This boosts decision speed and accuracy. Egnyte integrates SLMs into its AI system to enhance document search, improve compliance, and streamline data governance.

These lightweight models help organizations efficiently manage large volumes of unstructured data. They do so without the heavy resource demands of LLMs. This makes RAG faster, more affordable, and highly effective for enterprise-scale knowledge management.

Frequently Asked Questions (FAQs) on small language model use cases

1. When to use a small language model?

Use a small language model (SLM) when you need fast, cost-effective, and privacy-friendly AI solutions for specific tasks. SLMs are ideal for edge devices, offline environments, or scenarios with limited computational power. These include chatbots, on-device assistants, and industry-specific tasks.

2. What are SLM parameters?

SLM parameters are the numerical values (weights) that the model learns during training. They define how the model processes and generates language. SLMs typically have millions to a few billion parameters. They are smaller and more efficient than large language models like GPT-4. GPT-4 can have hundreds of billions of parameters.

3. Is BERT a small language model?

BERT is not considered a small language model. While it is smaller than today’s massive models, BERT-base still has 110 million parameters. Nonetheless, distilled versions like DistilBERT (66M parameters) are closer to what qualifies as an SLM.

4. What is the full form of BERT?

BERT stands for Bidirectional Encoder Representations from Transformers. BERT is a transformer-based model designed by Google. The model is used for understanding the context of words in search queries. It’s also used in natural language tasks.

5. How to train a SLM?

To train a small language model, you need a curated dataset. You also need a model architecture, like a small transformer. Additionally, you need training infrastructure, like GPUs or TPUs, and optimization tools like PyTorch or TensorFlow. You fine-tune the model using techniques like supervised learning or reinforcement learning on domain-specific data.

6. What is the best small language model?

The best SLM depends on your use case. Leading options include Phi-2 by Microsoft, Mistral 7B, Gemma by Google, and TinyLlama. These models offer a strong balance between size, speed, and accuracy, and execute well in limited-resource environments.

7. How to create SLM?

To create a small language model, start by defining the scope and size (number of parameters). Next, select a lightweight architecture like a mini transformer. Gather or curate a clean dataset for training. Train the model on your hardware or a cloud platform. Finally, fine-tune the model for specific use cases.

8. Can I build my own small language model?

Yes, you can build your own SLM using open-source frameworks like Hugging Face Transformers, PyTorch, or TensorFlow. You’ll need domain data, training resources, and skill in machine learning. Many tutorials and pre-built architectures are available to simplify the process.

9. What are examples of small language models?

Examples include Phi-2 (Microsoft), TinyLlama, DistilBERT, Mistral 7B, Gemma, GPT-2 Small, and CaLLM Edge. These models typically range between 50 million to a few billion parameters and are designed for efficient performance.

10. Where are small language models used?

SLMs are used in embedded systems, customer support bots, and mobile apps. They also feature in healthcare diagnostics and manufacturing assistance. You will find them in automotive systems and privacy-sensitive enterprise tools. They’re preferred in scenarios needing quick responses, low latency, or offline functionality.

Which small language models use case you find most interesting?

The small language market size has grown to USD 7.76 billion in 2023. From 2024 to 2030, it is further projected to grow at a CAGR of 15.6%.

Small language models are the reason AI will become accessible to everyone and enter multiple use cases.

IT service industry giant Infosys understands this opportunity.

We are deepening our work in generative AI. We are working with clients to deploy enterprise generative AI platforms, which become the launch pad for clients’ usage of different use cases in generative AI. We are building a Small Language Model leveraging industry and Infosys’ data sets. This will be used to build generative AI applications across different industries. We have launched multi-agent capabilities to support clients in deploying agent solutions using generative AI.

Our generative AI approach is helping clients drive growth and productivity impact across their organizations. We are partnering with clients to build a strong data foundation which is critical for any of these generative AI programs. One example, we’re working with a logistics major using Topaz to power their operational efficiency improvements. Concurrently, we are supporting their digital transformation journey to help them deliver exceptional services for their customers.

– Salil Parekh, CEO at Infosys

If you are an entrepreneur or product maker, let us know what you think about SLM opportunity. Please share your thoughts in the comments!

Learn more about AI models to understand their use case and science behind their optimizations in easy language:

- Vertical AI Agents Will Replace SaaS – Experts Warn On Future Of Workflow Automation

- Stanford’s S1 model – How Stanford built low-cost open source rival to OpenAI’s o1

- What is OpenAI o3-mini – technical features, performance benchmarks, and applications

- What is Alibaba’s Qwen2.5-Max – technical features, performance benchmarks, and applications

- Gemini 2.5 Pro is now free – learn how to make the most of it for daily productivity

- Learn NotebookLM for beginners – 2025 Guide With FAQs Solved And Real Examples

You can subscribe to our newsletter to get notified when we publish new guides:

This blog post is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Get in touch if you would like to create a content library like ours. We specialize in the niche of Applied AI, Technology, Machine Learning, or Data Science.

Leave a Reply