In this blog post, I have analyzed Retrieval-Augmented Generation (RAG) technology adoption, its impact on the healthcare sector, and navigating risks of RAG in healthcare.

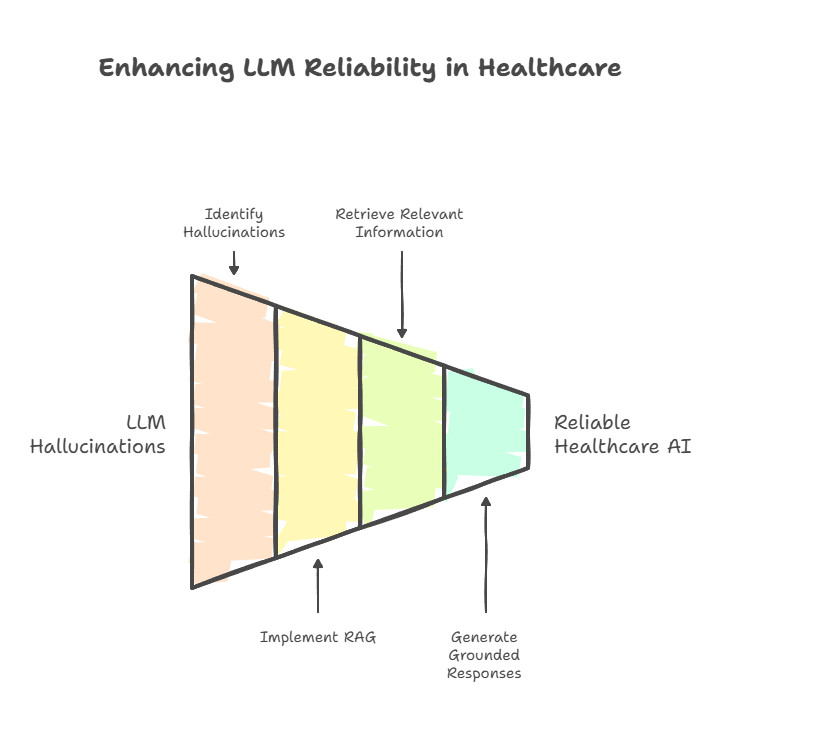

RAG addresses the most critical failing of standard Large Language Models (LLMs). LLM’s propensity to “hallucinate” or generate inaccurate information is mitigated by grounding their responses in external, authoritative knowledge sources. This architectural shift from a “closed-book” to an “open-book” system introduces a necessary layer of accuracy, timeliness and verifiability. Due to this, RAG is a foundational pillar for the responsible deployment of AI in clinical and operational settings.

I have explained Retrieval Augmented Generation as a concept in simple language:

According to Grand View Research, in 2024, the worldwide market for retrieval augmented generation in healthcare was worth $238.3 million and is projected to expand at an annual growth rate of 49.9% through 2030. Healthcare is the dominant adopting vertical, driven by the urgent need for reliable AI.

Key Takeaways:

In this blog, I will detail real-world use cases of how RAG is delivering measurable value in the healthcare sector along with:

- Implementation Challenges: Major barriers to RAG adoption in healthcare including algorithmic bias risks. HIPAA compliance requirements also pose challenges. Additionally, there are technical integration complexities with legacy systems.

- Future Technology Trends: Discusses the evolution toward multi-modal RAG systems. These systems integrate knowledge graphs and various data types to allow personalized medicine. This evolution addresses the operational complexities it creates.

- Strategic Implementation Framework: Provides healthcare leaders with a phased adoption approach. This approach prioritizes high-value use cases. It emphasizes building organizational capabilities over simple technology procurement.

Why use RAG in AI for Healthcare?

The advent of generative artificial intelligence, particularly Large Language Models (LLMs), has presented the healthcare industry with a profound paradox. On one hand, these models show a remarkable capacity to process and generate sophisticated, human-like text. They show promise for augmenting medical research, patient education, and clinical documentation.

On the other hand, their foundational architecture holds a critical flaw: Hallucinations. This flaw makes their direct application in high-stakes clinical environments difficult.

The critical flaw of ‘hallucination’ in LLMs for healthcare

Standard LLMs work as “closed-book” systems. Their knowledge is confined to the patterns and information embedded within their parameters during a static training phase. This knowledge is not fixed in time and becomes quickly outdated in a rapidly evolving field like medicine. It also lacks a direct connection to verifiable, ground-truth sources.

This architectural limitation leads to the phenomenon of “hallucination”. It means, the LLM generates responses that are plausible, confident, and articulate, but are factually incorrect, unsubstantiated, or entirely fabricated.

In a clinical setting, where decisions can have life-or-death consequences, this flaw is simply unacceptable.

Studies have demonstrated that general-purpose LLMs can show a diagnostic error rate as high as 83% in complex pediatric cases. This is a figure that underscores the grave danger of deploying ungrounded AI in patient care. An AI providing a wrong diagnosis, an outdated treatment protocol, or an incorrect drug dosage is a liability. No healthcare organization can afford such risks.

How RAG helps overcome LLM hallucinations for healthcare use case? – going from ‘black-box’ to ‘open’book’

Retrieval-Augmented Generation (RAG) is an AI framework that transforms an LLM from a “closed-book” memorizer into an “open-book” reasoner. The RAG process works by dynamically retrieving relevant, timely, and verifiable information from external, authoritative knowledge sources. This can include a hospital’s internal database of clinical guidelines. It might also contain the latest medical research from PubMed or patient’s own Electronic Health Record (EHR). This retrieval happens before the LLM generates a response.

This retrieved context grounds the AI’s output in factual evidence, making responses more precise and reliable.

RAG signifies a fundamental shift from “black box” AI toward verifiable intelligence. RAG provides citations and shows sources used to formulate answers. This introduces auditability and explainability into AI workflows. Such transparency is essential for earning clinician trust. It also ensures safe medical AI adoption.

Recap: How does RAG work?

I have already covered about RAG fundamentals with examples in this detailed guide:

Let’s understand how RAG works in brief and how this concept gets applied in healthcare industry’s perspective.

What is RAG in AI?

The concept of RAG was formally introduced and named in a seminal 2020 paper titled: Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks

The research was led by a team from Meta AI, University College London, and New York University. It includes authors like Patrick Lewis and Douwe Kiela, defined RAG as a “general-purpose fine-tuning recipe”.

The core innovation was the combination of two types of memory:

- Parametric Memory: The knowledge implicitly stored in the parameters of a pre-trained sequence-to-sequence (seq2seq) model, like BART.

- Non-Parametric Memory: An external, explicit knowledge source, which in the original paper was a dense vector index of Wikipedia.

This hybrid model was designed to create a system where the knowledge base could be directly revised, expanded, and inspected. This overcome the static and opaque nature of purely parametric LLMs.

What are the core principles of RAG?

At its heart, the RAG process consists of three distinct steps that work in concert: Retrieval, Augmentation, and Generation.

Indexing the knowledge base:

The process begins before any user query is made. The external knowledge corpus is prepared for retrieval. In a healthcare context, this could include clinical practice guidelines, medical textbooks, research articles, and internal standard operating procedures (SOPs). This involves breaking down the documents into smaller, manageable chunks of text.

Each chunk is then passed through an embedding model, which converts the text into a numerical vector (an “embedding”). These embeddings, which capture the semantic meaning of the text, are stored and indexed in a specialized vector database.

Retrieval:

When a user submits a query (e.g., “What is the standard of care for a patient with Type 2 diabetes and renal impairment?”), the RAG system first sends this query to the Retriever. The retriever uses the same embedding model to convert the user’s query into a vector. It then performs a similarity search. This search could be a Maximum Inner Product Search, or MIPS. The search checks the indexed vectors in the database. It finds the text chunks with the most similar semantic meaning. The top-K most relevant chunks are retrieved.

Augmentation and generation:

The retrieved text chunks are then merged with the original user query to create an “augmented prompt.” This enriched prompt now includes both the question and relevant, factual context. It is fed to the Generator (the LLM). The LLM uses this context to formulate a comprehensive, precise, and grounded response, significantly reducing the risk of hallucination

The evolution of RAG architectures

The simple workflow described above, often called “Naive RAG,” is just the starting point. The field has rapidly evolved to include more sophisticated architectures that deliver higher performance and allow more complex tasks.

Naive RAG:

This is the foundational “retrieve-then-generate” pipeline. It is straightforward to implement but can be limited by the quality of the first retrieval step. If the retriever pulls irrelevant documents, the generator will produce a poor answer.

Advanced RAG:

This architecture introduces steps to refine the retrieval process. It can involve techniques like query transformation. In this process, the first user query is re-worded or expanded. This improves the chances of finding relevant documents. It also incorporates a re-ranking step. After a preliminary set of documents is retrieved, a secondary, more sophisticated model re-orders them. This process pushes the most relevant context to the top.

Modular RAG:

This signifies the cutting edge of RAG architecture and is most relevant for complex domains like healthcare. It reimagines RAG not as a linear pipe but as a flexible framework of interchangeable modules. This can include a search module, a memory module to recall past interactions, and routing logic to query multiple knowledge sources. For example, a document database and a structured patient database) and then fuse the results.

A key development in Modular RAG is the integration of Knowledge Graphs (KGs). While vector search finds semantically similar text, KGs model the explicit relationships between entities (e.g., ‘Drug A’ treats ‘Disease B’; ‘Gene C’ is linked to ‘Condition D’). By traversing these relationships, a GraphRAG system can execute multi-step reasoning. This reasoning is impossible with simple text retrieval. As a result, it leads to more insightful and precise clinical support.

How the RAG evolution is key for healthcare advancement?

This evolution from a simple search feature to a complex reasoning framework is critical. For healthcare organizations, the strategic aim should not be to simply deploy a RAG-powered chatbot. Instead, the goal should be to build a modular RAG platform that can incorporate these advanced reasoning capabilities over time.

Such a platform could one day synthesize information from a patient’s EHR. It could include their genomic report and the latest clinical guidelines. It could also analyze real-time imaging data. The outcome would be a truly holistic and intelligent clinical recommendation.

Market analysis: Quantifying the RAG in healthcare opportunity

The market for Retrieval-Augmented Generation (RAG) is primarily driven by healthcare. The compound annual growth rates (CAGRs) is expected to reach around 50%.

The variance in these projections highlights the market’s early stage. Different methodologies and scopes result in a range of outcomes. Yet, the consistent, aggressive growth trajectory across all reports points to a clear conclusion: RAG is becoming a core part of enterprise AI infrastructure

Table 1: RAG Market Forecast Synthesis (2024-2034)

| Source | 2024 Market Size (USD) | Forecast Year | Forecasted Market Size (USD) | CAGR (%) |

| Market.us | 1.3 Billion | 2034 | 74.5 Billion | 49.9 |

| Precedence Research | 1.24 Billion | 2034 | 67.42 Billion | 49.12 |

| Grand View Research | 1.2 Billion | 2030 | 11.0 Billion | 49.1 |

| Roots Analysis | 1.96 Billion (2025) | 2035 | 40.34 Billion | 35.31 |

Note: Projections vary significantly, reflecting a nascent, high-growth market. The consistent trend across all analyses is a CAGR of approximately 35-50%, indicating a major technological shift.

What is the market size for RAG in healthcare?

Crucially for the healthcare sector, it is consistently cited as the dominant industry vertical for RAG adoption, securing a 36.61% share of the market in 2024. A more focused analysis by Grand View Research valued the healthcare-specific RAG market at USD 238.3 million in 2024, projecting it to reach USD 2.26 billion by 2030 at a CAGR of 49.9%.

Market segmentation: Key technology and deployment trends

The composition of the RAG market reveals key trends in how the technology is being deployed and utilized.

Deployment:

Cloud-based solutions are the dominant deployment model, capturing over 75% of the market share in 2024. The scalability, flexibility, and cost-effectiveness of the cloud drive this trend. The cloud allows organizations to manage vast and fluctuating data volumes. They can do this without the significant capital expenditure required for on-premises hardware.

Nonetheless, on-premises deployment is experiencing significant growth, particularly in highly regulated industries like healthcare and finance. Healthcare organizations need absolute control over sensitive data. They also need stringent privacy compliance under laws like HIPAA. Additionally, they aim to reduce the risk of external breaches. Hence, on-premises solutions become an attractive and necessary choice for many of them.

Function:

The Document Retrieval function leads the market, accounting for approximately one-third of the share. This highlights the primary value driver for RAG as it enhances the contextual accuracy of LLMs. It reduces hallucinations by grounding them in specific, verifiable documents.

Technology:

Natural Language Processing (NLP) is the foundational technology, holding a market share of 38.2%. Its pivotal role is in understanding and interpreting human language and grasps user intent. This makes it the engine that powers the entire RAG framework.

Regional landscape and economic factors for RAG industry growth

Geographically, North America stands as the undisputed leader in the RAG market. It commands over 37% of the global share in 2024. The revenues are approximately USD 0.4 billion.

This dominance is fueled by a combination of below factors:

- Early investment in AI and NLP

- High enterprise adoption rates

- Robust and mature cloud infrastructure

- Presence of major technology innovators

Impact of US tariffs on Retrieval Augmented Generation (RAG) industry growth

Despite this strong position, the U.S. market faces some economic headwinds. According to market.us, tariffs on imported hardware and software components have reportedly increased operational costs for U.S.-based RAG companies by an estimated 10-12%. While this may temper the pace of innovation in the short term, particularly for smaller firms, strong demand from key industries like healthcare exists. This demand has kept the overall market growth trajectory strong.

The apparent discrepancy between the massive overall RAG market forecasts (e.g., USD 74.5 billion) and the more modest healthcare-specific forecast (USD 2.26 billion) is not a contradiction but rather an important strategic indicator. It suggests a lag in direct monetization for purpose-built healthcare RAG products compared to the broader consumption of RAG-enabling technologies.

RAG in healthcare opportunity

Healthcare is a massive consumer of the foundational elements of RAG. These elements include cloud services, vector databases, and NLP APIs from major tech vendors. Nonetheless, the market for pre-packaged, end-to-end RAG solutions tailored specifically for healthcare is still in its infancy. It faces longer sales cycles and complex integration challenges.

This indicates an important opportunity for healthcare organizations. Rather than purchasing a single “RAG product,” they can strategically integrate RAG capabilities into their existing data platforms and workflows. They should use these foundational tools. This points toward an integration and platform-building strategy over a simple procurement strategy.

What is the RAG application in healthcare? – RAG in healthcare use cases across the value chain

Retrieval-Augmented Generation is not a theoretical technology. It is being actively deployed across the healthcare ecosystem. This deployment solves tangible problems, enhances clinical capabilities, and streamlines operations. From the point of care to the research lab, RAG is providing a reliable foundation for AI-driven transformation.

RAG to enhancing clinical decision support (CDS)

The most critical application of RAG in healthcare is in augmenting the decision-making process for clinicians. RAG-powered CDS tools supply real-time, context-aware information. They cross-reference a patient’s specific data (symptoms, EHRs, lab results) against a vast and constantly updated corpus of medical literature. This includes clinical guidelines and published research.

Real examples of RAG in healthcare for CDS: Apollo 24|7 and Google Cloud

Apollo, major healthcare platform, partnered with Google Cloud to build a Clinical Intelligence Engine (CIE).

This system integrates Google’s MedLM with a RAG architecture. It provides clinicians real-time access to de-identified patient data. They also gain access to recent studies and treatment guidelines. The RAG framework allowed Apollo to effectively use its massive and unstructured internal clinical knowledge base. This was too large and complex to be used in standard LLM prompts.

Learn more: How Apollo 24|7 leverages MedLM with RAG to revolutionize healthcare

Real examples of RAG in healthcare for CDS: IBM Watson Health

IBM Watson Health has been a prominent player in this space, particularly in oncology.

Its Watson for Oncology platform uses RAG-like techniques. It analyzes patient data against medical literature. The platform achieves high concordance with treatment recommendations from expert oncologists (up to 96% in some studies).

The Watson for Clinical Trial Matching system collaborated with the Mayo Clinic. It demonstrated a remarkable 80% increase in enrollment for breast cancer trials. This was achieved by automating and accelerating the process of matching eligible patients to complex trial criteria.

RAG in healthcare to accelerate pharmaceutical research and drug discovery

In the pharmaceutical industry, RAG is being used to navigate the immense and rapidly growing body of scientific information. This has accelerated research and development timelines.

RAG systems can analyze millions of research papers, clinical trial results, patents, and biological datasets to find promising drug candidates. They can improve clinical trial designs and predict potential risks.

Real examples of RAG in healthcare for pharmaceutical research: Ontosight AI

Ontosight.ai is an AI-driven intelligence platform for the life sciences. It uses RAG to speed up drug discovery and optimize clinical trials.

The platform uses ontologies—formal representations of knowledge with defined relationships to create a “machine-interpretable” language. This allows the RAG system to understand the complex biological and chemical relationships within the data. This leads to more insightful analysis for tasks like target identification and patient stratification.

Learn more: Ontosight AI

Real examples of RAG in healthcare for pharmaceutical research: Express Scripts

Express Scripts, a leading pharmacy benefit manager, has developed a RAG-powered chatbot to help check for potential drug-drug interactions (DDIs).

RAG combines information retrieval from a knowledge base, like a database of drug interactions. It uses LLM to offer more precise and contextually relevant responses. DDIs are a significant concern in healthcare, as they can lead to adverse events and increased healthcare costs. Express Scripts’ chatbot aims to proactively detect potential DDIs, helping to prevent these issues.

The chatbot streamlines the process of checking for DDIs. This would reduce the burden on healthcare professionals and improve the overall efficiency of medication management. It may also empower patients to be more informed about their medications and potential interactions.

This is a critical safety function. Independent studies of traditional DDI software have shown that accuracy remains a significant challenge. Even the best tools have correct answer rates around 70%.

A model like ChatGPT-4 recognizes that an interaction occurs with 100% accuracy. But, its ability to correctly classify the severity of that interaction was much lower (37.3%). This highlights the crucial role of RAG in grounding such predictions in authoritative pharmacological databases to guarantee clinical safety.

RAG in healthcare for patient engagement and support

RAG is powering patient-facing tools that offer personalized, precise, and accessible information, thereby improving health literacy, adherence, and overall engagement.

Real examples of RAG in healthcare for patient engagement: Walgreens

Walgreens has implemented a RAG-powered chatbot that sends personalized medication reminders. By adapting its communication style and providing motivational messages, the tool aims to improve adherence to chronic medications. The chatbot can also offer extra support, like answering common medication-related questions and providing information on potential side effects.

A formal Walgreens study employed a sophisticated AI targeting platform. This type of engine would power such a chatbot. The study demonstrated a tangible 5.5% to 9.7% increase in medication adherence. This was observed for patients on diabetes, hypertension, and statin medications. This was in comparison to those in a non-AI intervention group.

Learn more: AI-Driven Impact: Boosting Medication Adherence at Walgreens

Real examples of RAG in healthcare for patient engagement: Northwell Health

Northwell Health, New York’s largest healthcare provider, deployed an AI chatbot. It uses RAG to manage appointment scheduling. The chatbot also handles rescheduling and cancellations. This practical application has had a significant operational impact. It has reduced the volume of calls to their call center by 30% to 50%. This reduction frees up staff to handle more complex patient needs.

Learn more: Northwell releases AI-driven pregnancy chatbot and Northwell launches AI chatbot to reduce colonoscopy no-shows

Real examples of RAG in healthcare for patient engagement: Boston Children’s Hospital

Boston Children’s Hospital developed KidsMD, a skill for Amazon’s Alexa platform. This RAG-powered tool acts as a virtual triage assistant. It helps parents assess their child’s symptoms to decide if a doctor’s visit is necessary. It also assists with the appointment scheduling process.

Learn more: Boston Children’s Hospital for Amazon Alexa’s Beta HIPAA launch

Real examples of RAG in healthcare for patient engagement: Radbuddy by Signity Solutions

Signity Solutions developed Radbuddy, a specialized chatbot for lung health. It is built on a RAG framework to offer real-time, role-specific information from the hospital’s internal systems. It gives doctors quick access to diagnostic protocols. Patients get clear answers to their queries. It also helps administrative staff manage appointments and other details.

Learn more: AI-Powered Medical Radiology Chatbot

RAG in healthcare for streamlining administrative and operational workflows

Perhaps the most immediate and measurable impact of RAG is in automating the burdensome administrative tasks. These consume a significant part of healthcare resources. RAG can automate medical coding, summarize patient records for insurance claims, and create intelligent, searchable knowledge bases from internal documents.

Real examples of RAG in healthcare for operations management: Accolade

Accolade, a healthcare service delivery company, faced significant challenges with its data being fragmented across multiple, siloed platforms.

They implemented a RAG solution using Databricks’ DBRX model built upon a unified lakehouse architecture. This allowed them to create a single, reliable source of truth, dramatically improving their internal search capabilities and customer service. Their teams can now quickly access precise information about customer commitments. They also have access to internal protocols. This leads to faster response times and more personalized care delivery. The project’s success depended on establishing a solid data foundation. They needed strict governance via tools like Unity Catalog to guarantee HIPAA compliance.

Learn more: Creating seamless healthcare experiences from start to finish

RAG in healthcare for enterprise use cases

A common enterprise use case is transforming scattered internal documents into a conversational Enterprise Knowledge Base. These documents include HR policies, clinical protocols, and IT procedures. Staff no longer need to manually search through folders and PDFs. They can ask plain-language questions and get immediate, synthesized answers. This saves time and improves operational consistency.

The most successful and quantifiable RAG implementations to date have been these types of pragmatic solutions to well-defined, information-intensive problems. The highest ROI is found where RAG automates repetitive, costly, and error-prone human workflows. This “low-hanging fruit” approach delivers measurable value. It also builds the institutional trust and experience necessary to tackle more ambitious clinical applications in the future.

Algorithmic bias risk for RAG in AI healthcare

The potential of Retrieval-Augmented Generation in healthcare is immense. Nonetheless, its implementation comes with profound risks which must be proactively managed. For healthcare leaders, understanding and mitigating these challenges is not an ancillary task but a core need for responsible innovation. The risks are deeply interconnected, where a technical failure can cascade into an ethical crisis and a regulatory disaster.

The most insidious risk in healthcare AI is algorithmic bias. RAG systems, despite their architectural advantages, are not immune.

The quality and fairness of a RAG system’s output are entirely dependent on the data it retrieves. If the knowledge base itself holds historical biases, the system will replicate these biases. It can also amplify them, leading to inaccurate results. These inaccurate results can disproportionately harm certain patient populations.

Racial bias in health risk prediction

One of the most cited examples of AI bias in healthcare involved a commercial algorithm used by U.S. hospitals to find patients for high-risk care management programs. The algorithm used historical healthcare cost as a proxy for health need. Black patients have historically received less medical care due to systemic inequities. Thus, they incurred lower costs. Therefore, the algorithm systematically underestimated their true illness severity. As a result, it allocated fewer care management resources to them. This actively widened the health disparity it was meant to reduce.

Learn more: Case Studies: AI in Healthcare

Bias in medical imaging

AI algorithms developed for diagnosing skin cancer execute significantly less accurately on individuals with darker skin tones. This is a direct result of the training and retrieval datasets being overwhelmingly composed of images from light-skinned individuals. This creates a direct and life-threatening risk of missed or delayed melanoma diagnoses for non-white patients.

Learn more: Addressing bias in big data and AI for health care: A call for open science

Bias in medical devices

The bias is not limited to software. Physical devices like pulse oximeters are known to systematically overestimate blood oxygen levels in patients with darker skin. An AI system, whether RAG-based or not, that retrieves this flawed data would perpetuate and exacerbate this inequity. This could lead to delayed treatment for hypoxemia.

How to mitigate bias risk in RAG AI for healthcare?

Mitigating bias risk requires a comprehensive governance strategy which includes:

Data diversity and representation

The most fundamental strategy is to make sure the knowledge base the RAG system retrieves from is fair, diverse, and representative of all patient populations:

- Incorporate diverse datasets: Actively include data from a wide range of demographic groups. This includes different genders, ethnicities, ages, and socioeconomic statuses. This helps the model understand the unique physiological patterns, genetic factors, and health needs of various populations.

- Use international and reputable sources: Enhance fairness by using international guidelines. Incorporate reputable academic sources. Avoid relying on data from a single region or institution. This helps counteract geographical bias, where most patient data may come from only a few states or countries.

- Develop multilingual capabilities: Creating high-quality, multilingual medical knowledge bases is crucial for ensuring equity and serving diverse patient communities effectively.

Evaluation and expert oversight

Continuous monitoring and human skill are non-negotiable components of a responsible AI strategy:

- Keep human-in-the-loop (HITL) oversight: In clinical applications, it is crucial to have a human clinician involved. They must be the final arbiter of any AI-generated recommendation. The AI’s role is to help, not replace, human judgment.

- Clinician and expert review: Implement a process of cross-validation. In this process, clinicians and other subject matter experts regularly review the AI’s outputs. They check for accuracy and potential bias. This expert feedback is crucial for data labeling and verifying the model’s performance.

- Continuous auditing: AI developers and healthcare organizations must continuously audit and refine retrieval algorithms. They should also evaluate model outcomes. This is essential to check for and correct any emerging disparities across demographic groups.

Contextual and ethical safeguards

Technical design and prompt engineering must be thoughtfully implemented to promote fairness and cultural sensitivity.

- Context-specific prompt engineering: Design prompts that are culturally and contextually relevant to the specific healthcare setting. This helps make sure that AI-generated outputs align with local practices and environmental constraints. This is especially important in resource-constrained settings.

- Implement ethical design features: The system should be explicitly designed to be impartial. It should avoid stereotypes. Safeguards should be included to prevent it from giving direct medical advice to patients without clinical supervision.

- Promote transparency: A key advantage of RAG is the ability to trace an answer back to its source document. Systems should be designed to give clear citations. They should offer source documentation. This approach allows clinicians to verify the information. It also helps build trust in the AI’s recommendations.

Interdisciplinary governance

Mitigating bias is not solely a technical problem; it requires collaboration across multiple disciplines.

- Form an interdisciplinary team: Collaborate with teams that include not only data scientists and clinicians. Include ethicists and sociologists as well. They help evaluate the potential societal impact of AI systems. Their input is vital to find hidden biases in decision-making processes.

- Adhere to legal and ethical frameworks: Make sure the AI system complies with all relevant laws. These laws include regulations that protect patients from discrimination, like the Civil Rights Act.

By implementing this comprehensive, multi-layered approach, healthcare organizations can deploy RAG systems more responsibly. This approach works to reduce the risk of perpetuating health inequities. It builds a foundation for more trustworthy and fair AI for healthcare.

Data privacy and security: Upholding HIPAA in the age of RAG in healthcare

A RAG system in a healthcare setting must handle vast quantities of sensitive information. By its nature, it accesses and processes highly sensitive Protected Health Information (PHI). This makes compliance with data privacy regulations like the Health Insurance Portability and Accountability Act (HIPAA) in the United States a paramount and complex concern.

Key HIPAA considerations to mitigate risks of RAG in healthcare:

Minimum necessary standard:

The RAG system must access and process only the minimum amount of PHI. This is necessary to perform its specific, intended function. This includes the LLM and the retrieval mechanism.

Business associate agreements (BAAs):

If any part of the RAG pipeline is managed by a third-party vendor (e.g., a cloud provider like AWS, a vector database service, or an LLM API provider like OpenAI), that vendor is considered a Business Associate. A legally binding BAA must be in place, holding the vendor accountable for safeguarding PHI according to HIPAA standards.

Data leakage via prompts:

A significant and often overlooked vulnerability arises when sending prompts containing PHI to external, third-party LLM APIs. Even if the vendor’s policy states that API data is not used for model training, the information still leaves the healthcare organization’s secure environment. This poses a risk. Most API providers keep this data for a period (e.g., 30 days) for abuse and misuse monitoring, creating a window of risk and a potential data breach vector.

The limits of de-identification:

De-identifying data before using it in a RAG system is a critical privacy-preserving strategy. Still, it is not a perfect solution.

Academic studies have repeatedly shown the possibility of identifying individuals from de-identified datasets. These datasets follow HIPAA’s Safe Harbor or Expert Determination approaches. This poses a persistent risk that must be managed through robust security controls and data governance.

Learn more:

- Updating HIPAA Security to Respond to Artificial Intelligence

- Privacy Concerns at the Intersection of Generative AI and Healthcare

RAG in healthcare implementation barriers: The operational realities

Beyond the ethical and regulatory minefields, healthcare organizations face significant practical challenges in deploying RAG.

Data quality and governance:

The principle of “garbage in, gospel out” is amplified with RAG. The system’s performance is entirely contingent on the quality of its knowledge base. A retrieval corpus filled with redundant, trivial, outdated, or contradictory documents will destroy the RAG system’s reliability and trustworthiness.

Integration and interoperability:

Integrating a RAG system with complex and often antiquated legacy systems, like EHRs, is a major technical undertaking. A modern data architecture is required with a firm commitment to interoperability standards. These include Health Level Seven (HL7) and Fast Healthcare Interoperability Resources (FHIR). This ensures seamless data flow.

Change management and trust:

Technology is only one part of the equation. A Deloitte survey found that 75% of healthcare organizations cited “resistance to change” as a significant barrier to AI adoption. Successful implementation requires a deliberate change management strategy. These must include training clinicians, redesigning workflows in a user-centered way, and building trust through transparency and demonstrated value.

The RAG in AI for healthcare risks are interconnected

These risks discussed above are not independent silos; they are deeply interconnected.

A technical failure, like poor data curation, can directly cause an ethical failure. This might result in a biased output. Afterwards, this can lead to a clinical and regulatory failure, like patient harm and legal liability.

Thus, a successful RAG governance framework must be interdisciplinary from its start. It should bring together IT, data science, clinical leadership, legal, compliance, and ethics experts. This diverse team will navigate the gauntlet of responsible AI implementation.

Table: Risk matrix for RAG adoption in healthcare

| Risk Category | Specific Risk | Real-World Example | Mitigation Strategy |

| Ethical | Algorithmic bias leading to health disparities. | Skin cancer detection AI performs poorly on darker skin tones due to unrepresentative retrieval data. | Mandate diverse and representative datasets for retrieval corpus; implement continuous performance auditing across demographic groups; maintain clinical oversight. |

| Regulatory | HIPAA violations due to improper PHI handling. | PHI is inadvertently included in a prompt sent to a third-party LLM API without a proper Business Associate Agreement (BAA). | Use on-premises or private cloud LLMs for sensitive data. Make sure BAAs are in place with all vendors. Implement strict data filtering and de-identification pipelines. |

| Technical | Poor output quality due to “garbage in, gospel out.” | RAG system provides outdated treatment advice by retrieving from an old, uncurated clinical protocol document. | Set up a robust data governance program to continuously curate, check, and update the knowledge base. Implement version control for source documents. |

| Operational | Low adoption and clinician resistance. | A new RAG-powered diagnostic tool disrupts established clinical workflows, leading clinicians to ignore or bypass it. | Involve clinicians in the design process. Conduct thorough workflow analysis before deployment. Implement a phased rollout with targeted training and support. |

RAG in healthcare AI research – notable developments to track

While current applications of RAG are already delivering significant value, the technology’s frontier is rapidly advancing. Ongoing research is pushing RAG beyond simple information retrieval. It is moving into the realm of complex, multi-modal reasoning. Research is charting a course toward a future of deeply integrated and hyper-personalized clinical intelligence.

I found some interesting AI in healthcare research which I think are impactful enough get commercial application soon:

RAG in healthcare for radiology

In radiology, where the risk of error can be severe, RAG has shown dramatic results.

Researchers at Juntendo University in Japan conducted a study. They tested a RAG-enhanced LLM on simulated consultations about the use of iodinated contrast media. The system completely eliminated dangerous hallucinations. It reduced them from 8% in the base model to 0%. The system also provided correct, guideline-based responses faster than existing cloud-based models.

Learn more: Retrieval-augmented generation elevates local LLM quality in radiology contrast media consultation

RAG in healthcare for pre-operative assessment

For preoperative assessment, a recent study demonstrated that a RAG model utilizing GPT-4 achieved 96.4% accuracy in determining a patient’s fitness for surgery, significantly outperforming human-generated responses (86.6%). This high accuracy was achieved by retrieving information from both international and local hospital guidelines. This highlights RAG’s ability to adapt to specific institutional contexts.

The symbiotic power of knowledge graphs (KGs)

The academic research community is focused on overcoming the limitations of basic RAG systems. The industrial sector is building more powerful and reliable frameworks specifically for medicine.

The fusion of RAG and KGs is a major research trend.

KGs offer the structured, relational context that LLMs inherently lack. This helps to minimize hallucinations. It dramatically improves the explainability of the AI’s output. The system can trace and show its reasoning path through the graph’s connections.

This relationship is symbiotic. GenAI can also be used to help automate the difficult and time-consuming process of creating these complex knowledge graphs. It helps keep them from unstructured text, a technique known as GraphRAG. This creates a virtuous cycle of improvement.

MedRAG and knowledge-enhanced retrieval:

Recognizing the unique demands of the medical domain, researchers are developing specialized frameworks like MedRAG. This approach enhances the standard RAG pipeline. It integrates a Knowledge Graph (KG) that explicitly models the relationships between medical concepts like diseases, symptoms, and treatments.

By using this structured knowledge, MedRAG can do more sophisticated reasoning. It can find subtle diagnostic differences between conditions with similar symptoms. It can even proactively ask clarifying follow-up questions when a patient’s information is ambiguous. Early results show that this KG-enhanced approach significantly outperforms standard models in reducing misdiagnosis rates.

Multimodal RAG

The future of RAG is not confined to text. The next frontier is the development of systems that can retrieve, integrate, and reason across multiple data types simultaneously.

A future multimodal RAG system could analyze a clinician’s free-text notes. It could also process a structured lab report. Additionally, it can examine a DICOM file from a CT scan and a patient’s genomic data. It will synthesize these disparate sources into a single, coherent clinical picture. This will allow a far more holistic analysis of a patient’s condition. It will be more powerful than what is possible with text-based systems alone.

Learn more: Multi-modal transformer architecture for medical image analysis and automated report generation

What is the future of RAG? – Long-term application for RAG in healthcare

These research advancements point toward a long-term vision where RAG is not just a tool. It will be a core part of an intelligent healthcare fabric.

Hyper-personalized medicine:

The ultimate aim is to use advanced RAG systems to deliver truly personalized medicine at scale.

RAG will dynamically retrieve and analyze a patient’s unique and multi-modal data. This includes their genetic profile, lifestyle factors, comorbidities, and real-time data from wearable sensors. With this information, RAG will help clinicians create highly tailored treatment regimens. It will also help in developing proactive, preventive health interventions.

A comprehensive framework for this involves three key layers :

Data integration layer:

This is where the patient’s unique profile is built. RAG systems are designed to ingest and unify heterogeneous data types, including:

- Electronic Health Records (EHRs): Both structured data and unstructured clinical notes are processed.

- Genomic Information: This includes data on genetic variations. This data covers single nucleotide polymorphisms (SNPs) and other variant annotations. These are crucial for understanding disease risk and treatment response.

- Real-Time Wearable Data: Information from wearable sensors—like heart rate, sleep patterns, and activity levels—provides continuous monitoring and lifestyle context.

- Pharmaceutical Knowledge: The system is connected to external databases. It includes DrugBank for drug mechanisms and interactions. It also includes PubMed for the latest medical literature.

Dynamic retrieval and generation layer:

This is the RAG engine at work.

- Patient-Specific Queries: The system transforms the patient’s integrated data profile (e.g., diagnosis, comorbidities, specific genetic markers) into a highly detailed query for the retrieval module.

- Contextual Retrieval: The retriever searches the external knowledge bases to find the most relevant, up-to-date information. For example, it can find clinical studies. Studies show how a specific genetic variant affects a patient’s metabolism of a certain drug.

- Personalized Generation: The generator (an LLM like GPT-4) receives the patient’s query along with the retrieved evidence. It synthesizes this information to create a precise and contextually relevant recommendation. This may include a tailored treatment plan, medication dosage, or lifestyle adjustment.

Safety and evaluation layer:

To be clinically practical, the system must have robust safety checks. This involves cross-referencing recommendations against drug interaction databases. It ensures patient data is anonymized to follow HIPAA and GDPR. Additionally, it provides transparent, traceable links to the source evidence to build clinician trust.

Key applications in hyper-personalized medicine

This framework enables several powerful, real-world applications:

- Pharmacogenomics: This field studies how genes affect a person’s response to drugs. A RAG system can analyze a patient’s genetic markers. It can retrieve the latest research on how those markers influence drug efficacy and risk of adverse events. This allows for the choice of the most effective medication and dosage, minimizing trial and error. ArangoDB reported saving $2.2 million annually by implementing a GraphRAG-based approach for pharmacogenomics.

- Precision Oncology: Cancer treatment is increasingly driven by the specific molecular characteristics of a tumor. RAG systems can support oncologists by retrieving treatment guidelines from trusted sources like OncoKB. They also obtain clinical trial data relevant to a patient’s specific genetic mutations. Precision oncology helps to find targeted therapies.

- Real-Time Monitoring and Intervention: By integrating data from wearable devices, RAG can power systems that continuously track a patient’s vital signs. They can also assess lifestyle patterns. If the system detects anomalies or trends that suggest a deteriorating condition, it can retrieve relevant clinical protocols. The system then alerts healthcare providers with a recommended intervention. This enables proactive rather than reactive care.

- Complex Genomic Data Interpretation: RAG is highly effective at making sense of massive, specialized datasets. Researchers have successfully used RAG to integrate 190 million variant annotations into a GPT-4o model. This allows clinicians to query specific genetic variants and get precise, context-rich interpretations to support diagnostic and treatment decisions.

Ultimately, the goal of using RAG for hyper-personalized medicine is to create a system where every recommendation is grounded in the patient’s individual biology. It should also be based on the latest scientific evidence leading to more effective, safer, and truly patient-centric care.

Full AI integration:

In the future, RAG will not be a standalone application. It will be deeply and seamlessly integrated into all aspects of the clinical workflow. It will serve as an intelligent layer. This layer connects EHRs, medical imaging archives (PACS), laboratory information systems, and predictive analytics platforms. It provides comprehensive, context-aware support across the entire continuum of care.

The AI as an ethical guardian:

As AI systems gain more autonomy, there is a vision for RAG to serve as a built-in ethical safeguard.

RAG systems could have to ground every recommendation in verifiable evidence from trusted sources. These can include ethical guidelines and regulatory statutes. This condition helps design RAG systems to give transparent, evidence-based explanations for their decisions. It ensures compliance with medical ethics and patient privacy regulations.

A paradigm shift in care delivery:

Ultimately, the responsible integration of these advanced RAG systems could mark a paradigm shift in medicine. It would move healthcare from a reactive model to one that is proactive, precise, and deeply patient-centered. In this future, generative AI, grounded by RAG, acts as a true cognitive partner to clinicians. It augments their skills and freeing them to focus on the uniquely human aspects of care.

Complexity challenge of pursuing RAG AI for healthcare

This future vision of RAG for healthcare presents a challenge of complexity. The convergence of various AI sub-fields—LLMs, search, knowledge representation, computer vision, and data analytics—will lead to powerful yet complex systems.

The “black box” problem will not disappear; rather, it will become distributed across many interacting components.

This “complexity explosion” means the long-term strategic challenge for healthcare IT leaders. They have to develop a new generation of MLOps (Machine Learning Operations) and governance platforms. These must be capable of building, validating, and safely managing these highly complex, multi-modal, reasoning-based AI systems.

Strategic implementation framework: A blueprint for RAG adoption for healthcare

Harnessing the transformative potential of RAG requires a strategic framework that aligns technology with business objectives and fosters organizational readiness. For healthcare leaders, a phased approach is essential for moving from concept to enterprise-wide value:

Building the business case for RAG in healthcare

A successful RAG initiative begins with a compelling and realistic business case. Rather than pursuing ambitious but ill-defined goals, the focus should be on solving specific, measurable problems.

Focus on measurable ROI:

The strongest early business cases are rooted in clear, quantifiable metrics.

This often involves focusing on operational efficiency. It may also target key performance indicators (KPIs) tied to revenue and quality scores, as demonstrated by real-world examples.

Examples include reducing call center operational costs, as seen with Northwell Health. They also involve improving medication adherence rates, which can impact value-based care reimbursements at Walgreens. Another example is accelerating clinical trial enrollment. This process is a major bottleneck in the multi-billion dollar pharmaceutical R&D industry at Mayo Clinic/IBM.

Identify the right problem:

RAG is fundamentally a knowledge access and management technology. The ideal pilot projects are in areas where clinicians or staff spend an inordinate amount of time searching for information. They also include situations where a lack of timely, accurate information creates process bottlenecks, safety risks, or poor patient experiences.

Articulate value beyond cost savings:

While financial ROI is crucial, the business case should also capture the significant qualitative benefits. These benefits include improved clinician satisfaction by reducing administrative burden. They also encompass enhanced patient engagement and health literacy. Most importantly, there is a reduction of clinical risk by providing more reliable, evidence-based information at the point of care.

A phased approach to RAG integration and healthcare workflow improvement

Adopting RAG is not a single event but a journey. A phased approach allows an organization to build capabilities, show value, and manage risk incrementally:

Phase 1: Foundational Readiness:

This is the most critical and often underestimated phase involving infrastructure and data governance readiness.

Before any AI tool is considered, organizations must conduct a thorough evaluation of their technical infrastructure. This includes cloud capabilities and data interoperability standards like HL7 and FHIR.

The paramount task is establishing robust data governance. This involves classifying all data to decide handling requirements (e.g., PHI vs. non-PHI). This includes investing heavily in processes to clean, curate, and govern the documents that will form the RAG knowledge base. RAG does not fix bad data; it simply exposes its flaws faster and at a greater scale.

Phase 2: Pilot and Implementation

This phase prioritizes to start with low risk and high impact use cases.

Start with administrative, operational, or patient-support applications rather than high-stakes, real-time clinical decision support. A successful chatbot for internal policy questions can build institutional trust. It can offer valuable lessons and show ROI. This paves the way for more ambitious clinical projects.

Also, choose the right technology combination based on the specific use case. Make strategic decisions about the core components. These include deployment architecture (cloud, on-premises, or hybrid), the vector database, and the LLM. These choices will be dictated by security requirements, performance needs, and budget constraints.

Phase 3: Clinical Integration and Change Management

This phase involves adapting workflows through clinician training and patient engagement.

RAG tools should enhance clinical workflows by mapping existing processes. They find where an AI assistant can add value without friction. The goal is seamless integration, not a technological bolt-on.

After adoption, the success of clinical AI tools relies on clinician adoption. This requires assessing team readiness, providing targeted training, and involving clinicians in the design and validation process. A tool designed with clinicians is more likely to succeed.

For patient-facing applications, transparency is key to building trust. Organizations must be clear about data usage and how AI assists in care.

Establishing governance for ethical and responsible RAG AI for healthcare

A robust governance framework is the bedrock of a sustainable RAG strategy.

- Create an Interdisciplinary Oversight Committee: RAG is not a pure IT project. Effective governance requires a dedicated committee with representation from clinical leadership, data science, IT, legal, compliance, and bioethics. This group should be empowered to set policies and oversee all AI initiatives from beginning.

- Continuous Monitoring and Quality Management: RAG is not a “set it and forget it” technology. Once deployed, systems must be continuously monitored for performance. It’s crucial to oversee their accuracy and latency. Critically, check for the emergence of any biases in their outputs. This requires a dedicated MLOps (Machine Learning Operations) function.

- Human-in-the-Loop (HITL) as a Mandate: For any application that touches clinical decision-making, a qualified human clinician must be involved. This individual should always serve as the final arbiter. The AI’s role is to augment and inform, never to replace, human judgment and accountability. This HITL safeguard must be a non-negotiable principle of any clinical AI deployment.

A critical realization for healthcare leaders is that the traditional IT procurement model is ill-suited for RAG.

Organizations cannot simply buy a monolithic “RAG product” and expect it to solve their problems.

Success stories like Accolade’s show that the winning strategy involves first building a solid internal data foundation. It should involve modern data architecture with strong governance and then layer RAG capabilities on top. This requires a “capability-building” mindset, focusing on strategic investment in internal data engineering talent and a mature data governance framework.

The success of RAG is ultimately an outcome of a mature data strategy, not a shortcut to one.

Key takeaways from RAG for healthcare analysis:

RAG as Healthcare’s AI Foundation:

RAG has evolved from a research concept to essential healthcare technology. It solves AI hallucination problems and enables reliable AI applications across clinical settings. It is also used in operational settings.

Proven Value Across Healthcare:

RAG delivers measurable benefits throughout the healthcare ecosystem. It enhances clinical decision-making and accelerates pharmaceutical research. RAG improves patient outcomes and streamlines administrative processes. Market growth reflects widespread enterprise adoption.

High-Stakes Risk Management:

While transformative, RAG presents existential risks. These include algorithmic bias, privacy compliance challenges, and integration complexity. These need comprehensive, proactive strategies to prevent cascading failures across interconnected systems.

Strategic Implementation Imperative:

Healthcare leaders must move from reactive technology procurement to proactive ability building. They should highlight governance, ethics, and safety. RAG should be deeply integrated with organizational data strategy and clinical workflows.

Long-term Digital Foundation:

RAG should anchor organizational digital transformation strategies. It serves as the architectural solution for harnessing generative AI value while managing risks. This approach requires strategic investments in data quality and governance frameworks. Phased implementation is necessary to build more efficient, equitable, and intelligent healthcare systems.

Frequently asked questions (FAQs) on RAG in healthcare industry

What is Retrieval-Augmented Generation (RAG) in AI?

Retrieval-Augmented Generation (RAG) is an artificial intelligence framework that enhances the quality and reliability of Large Language Models (LLMs). It works by first retrieving relevant, up-to-date information from an external, authoritative knowledge base. Then, it provides that information to the LLM as context to use when generating its response. This “open-book” approach grounds the AI’s output in factual evidence, significantly reducing the risk of errors or “hallucinations”.

Who invented Retrieval-Augmented Generation?

RAG was introduced in a 2020 research paper titled “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.” A team of researchers authored the paper. They were from Meta AI (then Facebook AI Research), University College London, and New York University. Key contributors included Patrick Lewis, Douwe Kiela, and Ethan Perez.

What is the difference between RAG and fine-tuning?

RAG and fine-tuning are two different approaches for customizing an LLM. Fine-tuning adapts the model’s internal parameters by retraining it on a new dataset, which is expensive and time-consuming. It teaches the model a new skill or style. RAG does not change the model itself. Instead, it provides the model with external knowledge at the time of the query. RAG is better for incorporating fast-changing factual information, while fine-tuning is better for adapting the model’s behavior.1 The two techniques can also be used together.

How does RAG handle data privacy and HIPAA?

To be HIPAA-compliant, a RAG system must be designed with strict safeguards. This involves using on-premises or private cloud deployments for sensitive data. All third-party vendors must sign a Business Associate Agreement (BAA). Implementing strong encryption and access controls is essential. Adherence to the “minimum necessary” principle is crucial. Here the AI only accesses the data it absolutely needs for a given task.

Can RAG work with data other than text?

Yes. While early RAG systems focused on text, the future of RAG is multimodal. Advanced research aims to build systems that can retrieve and reason across different data types. These types include images (like X-rays or pathology slides), structured data (like lab results), and text (like clinical notes). This variety ensures a more comprehensive analysis.69

What is a vector database and why is it important for RAG?

A vector database is a specialized database designed to store and search embeddings (numerical representations of data). It is crucial for RAG because it allows for extremely fast and efficient “semantic search.” Instead of matching keywords, it locates data that is contextually similar in meaning to the user’s query. This process is essential for the “retrieval” step in the RAG process.

What is “human-in-the-loop” and why is it critical for RAG in healthcare?

Human-in-the-loop (HITL) is a safety principle mandating that a qualified human expert must review any AI system output. They need to check and approve it before action is taken in a clinical context. For RAG in healthcare, this means a clinician must always be the final decision-maker. The AI’s role is to augment and support the clinician, not replace their judgment or accountability.

Who are the key technology providers in the RAG ecosystem?

The RAG ecosystem involves multiple layers of technology providers. These include:

- Major Cloud and AI Providers: Companies like Google (Vertex AI, MedLM), Amazon Web Services (Bedrock), Microsoft, and IBM provide the foundational LLMs. They also offer embedding models and cloud infrastructure.

- Data Platform Companies: Companies like Databricks offer unified data and AI platforms. These platforms help organizations build RAG pipelines. They also allow management of these pipelines on their own data.

- Vector Database Providers: Companies like Pinecone specialize in the vector databases needed for the retrieval step.79

- AI Startups and Frameworks: Companies like Cohere and open-source frameworks like LangChain offer tools specifically for building RAG applications.

Read more about practical adoption of AI models and tools

Learn more about AI Agents and LLM models from our published guides:

- MIT ChatGPT Brain Study: Explained + Use AI Without Losing Critical Thinking

- AI.gov: US AI for Governance Risks + EU AI Act Comparison

- Why Scaling Enterprise AI Is Hard – Infosys Co-Founder Nandan Nilekani Explains

- What Is Prompt Chaining? – Examples And Tutorials

- Vertical AI Agents Will Replace SaaS – Experts Warn On Future Of Workflow Automation

- Cost-efficienct AI: How Stanford built low-cost open source rival to OpenAI’s o1

- The Cost-Effective AI: Deep Dive on DeepSeek’s Game-Changing AI Engineering Approach

- Transforming Software Design with AI Agents: 2 Key Future Insights You Must Know

- 20+ insightful quotes on AI Agents and AGI from AI experts and leaders

Get the latest updates about using AI for daily and workplace productivity:

This blog post is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Leave a Reply