In 2026, Enterprise AI Agents will become a prime focus for the next in AI adoption. As of today, AI can generate hyper-realistic images and videos. It can debug code and write sonnets. All these tasks are accomplished with efficiency and accuracy never seen before.

For the enterprise, yet, this magical accuracy was often fleeting.

The interaction model was transactional. The intelligence was ephemeral. The utility was bounded by the tyranny of the prompt box. Organizations found themselves with a ‘brain in a jar.’ It was a disconnected oracle. It could answer questions but could not do work.

As we pivot toward the strategic horizon of 2026, the industry stands on the precipice of a fundamental architectural shift. The novelty of ‘chatting’ with a machine is fading, replaced by the imperative of ‘working’ with one.

IBM’s 2026 predictions show the end of the single-purpose bot. These predictions are corroborated by a chorus of industry analysts and technical benchmarks. They signal the ascent of the Agentic Enterprise.

This is not merely an incremental upgrade in model size or parameter counts. It is a qualitative leap from ‘stateless’ prediction engines to ‘stateful’ autonomous agents. These agents are capable of long-term memory, multi-step reasoning, and decisive action within the digital and physical infrastructure of the corporation.

The distinction is profound. A chatbot talks; an agent acts.

A chatbot is a “read-only” interface to information; an agent is a “read-write” interface to the enterprise.

This is a significant shift — explored across multiple platforms of this transition in this blog. I have dissected the cognitive architectures, economic restructuring, and operational revolutions that will define the business landscape of 2026.

The Death of the Single-Purpose ChatBot

I think this year we move past the hype of generation to the concrete utility of orchestration. Let’s explore how the ‘Silicon Workforce’ is being built, governed, and deployed. This workforce fundamentally alters the metabolism of the modern organization.

The Limitations of the ‘Stateless’ Era

To understand the magnitude of the shift predicted for 2026, one must analyze the ‘Level 1’ AI maturity model. This model dominated the early generative AI boom. In this model, the organization treats the AI as a disconnected oracle. The interaction is purely transactional: a human submits a prompt, and the model returns a completion based on pre-trained weights. Once the session ends, the intelligence evaporates. This is called the ‘Stateless’ era.

This architecture suffers from two fatal flaws that have stalled enterprise adoption beyond pilot phases: Hallucination and Amnesia.

- Hallucination arises from a lack of grounding. The model predicts the next statistically likely word rather than retrieving a verified fact.

- Amnesia arises from a lack of state. A user might spend hours context-setting with a bot about a specific project. However, the system ‘resets’ once the context window is exceeded or the browser tab is closed.

Even as context windows expand to 1 million or 2 million tokens (as seen in models from Google and Anthropic), the fundamental limitation remains.

‘Resetting’ the intelligence after every interaction prevents the formation of the thematic continuity required for meaningful work.

A human employee does not need to be re-introduced to their job description every morning; a Level 1 AI does. IBM’s predictions argue that by 2026, organizations boasting about ‘having a chatbot’ will be viewed as technologically stagnant. The raw access to LLMs is becoming commoditized—a utility akin to electricity or cloud storage. The competitive advantage has migrated up the stack to the system architecture that surrounds the model.

The Shift from Scale to Architecture

The industry narrative has shifted.

The focus has changed from ‘Scale is all you need’ (the pursuit of ever-larger parameter counts) to ‘Architecture is all you need’ (the pursuit of reliability and agency).

This architectural turn focuses on wrapping the stochastic core of an LLM in deterministic layers of control, memory, and verification.

This evolution is driven by the realization that intelligence alone is insufficient for enterprise value.

Value is derived from the application of intelligence to workflow.

A brilliant intern who can’t access the company email or update the CRM is of limited utility. Similarly, a brilliant LLM that can’t execute API calls is merely a conversationalist. The 2026 vision is one where the AI stops being something you ask, and becomes something that does.

The Agentic Maturity Model

IBM maps this evolution through the Agentic AI Maturity Model. It predicts where organizations will land in 2026. The predictions are based on their current architectural choices.

Learn more: Agentic AI Maturity Model

| Maturity Level | Description | Capabilities | Limitations | Timeline |

| Level 1 | Model-Centric | Static models, prompt-driven, single-turn inference. | Hallucinations, Amnesia, no operational feedback loop. | 2023-2024 |

| Level 2 | RAG-Enabled | Retrieval-Augmented Generation, access to static documents. | Limited reasoning, read-only access to data. | 2024-2025 |

| Level 3 | Task-Based Agents | Single-purpose automation (e.g., “Book a meeting”), simple tool use. | Brittle, fails outside narrow scope, no long-term memory. | 2025 |

| Level 4 | Orchestrated Agents | Multi-agent collaboration, supervisor-worker hierarchies, limited memory. | Complexity in governance, “too many cooks” problem. | 2025-2026 |

| Level 5 | Autonomous Agents | Long-term memory, self-correction, comprehensive tool use, goal-driven. | High risk, requires “AI Sovereignty” and strict guardrails. | 2026+ |

The leap from Level 3 to Level 5 is the core transformation. It shows the move from ‘Assistance’ to ‘Autonomy.’ While assistance implies a human-led process where AI provides support, autonomy implies an AI-led process where humans offer oversight. This shift requires us to completely reimagine the software stack.

We must move from interfaces designed for human clicks to those designed for agentic execution.

The Cognitive Architecture of the 2026 Enterprise AI Agent

If the chatbot was a ‘brain in a jar,’ the agent of 2026 is a nervous system connected to the hands and eyes of the enterprise.

This transformation is powered by three critical architectural pillars: Long-Term Memory, Multi-Step Reasoning, and Tool Use.

Solving the ‘Goldfish Brain’: The Tripartite Memory System

The most significant technical breakthrough enabling the agentic enterprise is the solution to the ‘Goldfish Brain’ problem. For an AI to function as a digital employee, it must remember. It needs to know not just what happened five minutes ago. It also needs to know what the strategic goals of the quarter are. Additionally, it must understand what the specific preferences of a client are. Finally, it must be aware of how earlier attempts to solve a problem succeeded or failed.

IBM and the broader research community have converged on a memory architecture that mimics human cognition, dividing memory into three distinct types:

1. Episodic Memory: The Narrative of Experience

Episodic memory is the autobiographical record of the agent. It stores the ‘what, where, and when’ of interactions.

- Function: It allows an agent to recall, ‘Last Tuesday, the client rejected the proposal because the pricing was too high.’

- Enterprise Value: This prevents the frustration of repetitive feedback. If a human corrects an agent’s output once, episodic memory ensures the agent does not repeat the error. This prevents mistakes in future sessions. It provides the continuity required for long-term relationships, whether with customers or internal stakeholders.

2. Semantic Memory: The Knowledge Graph

Semantic memory stores generalized facts and concepts derived from experiences and data. It is the agent’s ‘textbook knowledge.’

- Function: While episodic memory stores the specific rejection of a proposal, semantic memory distills the general rule: “This client is price-sensitive regarding SaaS subscriptions.”

- Enterprise Value: This layer is often modeled using structured knowledge graphs or vector databases. It allows the agent to reason across different domains. For a legal agent, semantic memory holds the understanding that “Force Majeure clauses differ in Civil Law vs. Common Law jurisdictions,” knowledge that applies generally regardless of the specific contract being reviewed.

3. Procedural Memory: The “Muscle Memory” of Workflow

Perhaps the most transformative for automation is procedural memory—the knowledge of how to do things.

- Function: This is the storage of skills and action sequences. It is not knowing that SAP is an ERP. It is understanding the exact sequence of API calls. This includes authentication handshakes and field entries needed to create a buy order in SAP.

- Enterprise Value: Procedural memory allows agents to execute complex, multi-step workflows automatically without deliberating on every single click. Over time, agents ‘learn’ to optimize these paths. They find the most efficient route through a software ecosystem to achieve a goal.

Here’s a difference between Episodic, Semantic, and Procedural Memory:

The Reasoning Engine: From Probability to Planning

The second pillar is the shift from System 1 to System 2 thinking.

- System 1 (Chatbots): Relies on fast, intuitive pattern matching. It predicts the next token based on surface-level correlations. It is fast but prone to logical fallacies and hallucinations.

- System 2 (Agents): Relies on slow, deliberate reasoning. When an agent receives a request, it does not answer immediately. It engages in a ReAct Loop (Reason + Act).

The ReAct Loop Mechanism:

- Thought: The agent analyzes the user’s request and breaks it down into sub-goals. “The user wants a comparison of Q3 earnings for Competitor A and Competitor B.”

- Plan: The agent formulates a plan. “Step 1: Search for Competitor A’s Q3 report. Step 2: Search for Competitor B’s Q3 report. Step 3: Extract ‘Net Income’ and ‘Revenue’. Step 4: Calculate the delta. Step 5: Format as a table.”

- Action: The agent executes Step 1 using a tool (e.g., a web search or database query).

- Observation: The agent reads the result. If the data is missing, it refines the plan (e.g., “Search for the investor presentation instead”).

- Synthesis: Only after all steps are complete does the agent show the final answer to the user.

This Multi-Step Reasoning allows agents to handle ambiguity and complexity that would break a standard chatbot. It enables “Bounded Autonomy.” Here, the agent is free to navigate the path to the solution as long as it stays within the guardrails set by the enterprise.

Tool Use: The Hands of the Machine

The final component is the ability to interact with the world. An agent is a system that wraps an LLM with Tools.

The ‘Read-Write’ Shift:

Generative AI was “Read-Only”—it could read data and generate text. Agentic AI is “Read-Write”—it can read data, reason about it, and then write changes back to the system of record. It can send emails, update CRM records, deploy code, and transfer funds.

Agent-Friendly Interfaces:

We are seeing a shift in software design from ‘Human-First’ to ‘Agent-Readable’. Applications are exposing their functionality via APIs specifically designed for AI agents to consume.

Retailers, for example, are providing access to their product catalogs and inventory data via APIs. This allows buying agents to surface, compare, and buy products without human intervention.

Vibecoding and Personal Software:

Advanced agents are capable of ‘Vibecoding’ — writing ephemeral, single-purpose software on the fly to solve immediate problems. A user might say, “I need a way to track the arrival times of guests for the event tonight.”

The agent doesn’t look for an existing app. It writes a simple, temporary web interface, deploys it, and collects the data. Then, it deletes the app when the event is over.

This ability bridges the gap between rigid enterprise software and the fluid, ad-hoc needs of daily business operations.

IBM’s Strategic Vision — Orchestrating the Future

IBM’s positioning for 2026 is centered not on building the largest model, but on building the most robust Orchestration Layer. Their thesis is that the enterprise environment is too complex for a single “God Model” to manage. Instead, the future belongs to Multi-Agent Systems governed by a sophisticated control plane.

Watsonx Orchestrate: The Operating System for Agents

At the heart of IBM’s strategy is watsonx Orchestrate.

This platform is designed to be the ‘Operating System’ for the agentic workforce. It addresses the ‘sprawl’ problem — as enterprises deploy dozens or hundreds of specialized agents, they risk creating a chaotic, unmanageable ecosystem. Watsonx Orchestrate provides the governance, integration, and logic to make these agents work in concert.

Key Capabilities:

Agentic Workflows:

Developers can build standardized, reusable flows that sequence multiple agents. This replaces brittle scripts with adaptive logic. If the “Invoice Processing Agent” fails, the Orchestrator can reroute the task to a human or retry with different parameters.

The Supervisor Agent:

This concept involves a high-level agent that acts as a manager. It receives a complex user request, decomposes it, and assigns sub-tasks to specialized ‘worker’ agents.

For example, a ‘Supply Chain Supervisor’ might delegate legal checks to a ‘Contract Agent.’ He or she might delegate financial checks to a ‘Risk Agent.’ Logistics checks might be delegated to a ‘Shipping Agent.’ Their findings are then synthesized into a recommendation for the human user.

Pre-Built Agent Catalog:

IBM has curated a library of over 100 domain-specific agents (HR, Procurement, Sales) and 400+ tools. This allows organizations to assemble workflows from ‘Lego blocks’ rather than building every agent from scratch.

The ‘Open’ Ecosystem Strategy

IBM’s approach is notably ‘Open.’

They recognize that clients will likely use models from multiple providers (OpenAI, Anthropic, Meta, Mistral). Watsonx Orchestrate is designed to be model-agnostic. It allows an enterprise to use Llama 3 for on-premise low-latency tasks. It also enables the use of GPT-4 for complex reasoning tasks. All these tasks are managed under the same governance roof.

This strategy aligns with the ‘AI Sovereignty’ trend. Enterprises demand control over their infrastructure. They refuse to be locked into a single model provider.

The Competitor Landscape for AI Agentic Enterprise

IBM is not alone in this vision. The industry is witnessing a fierce battle for the ‘Control Plane’ of the AI agentic enterprise.

Writer (Palmyra-X5):

Writer has positioned itself as a leader in ‘Full-Stack Generative AI’ for the enterprise. Their Palmyra-X5 model features a 1-million-token context window and is purpose-built for agentic reasoning. Writer emphasizes ‘graph-based RAG’ and high-accuracy tool use, targeting specific verticals like healthcare and finance where hallucination is unacceptable.

HPE (Hewlett Packard Enterprise):

HPE is focusing on the intersection of AI and hybrid cloud. Their ‘Alfred agent’ demonstrates the power of agents in IT operations, autonomously managing server provisioning and optimization. Their strategy leverages their strength in on-premise infrastructure, appealing to security-conscious clients.

Learn more: The agentic reality check: Preparing for a silicon-based workforce by Deloitte Insights

AWS and Salesforce:

These giants are integrating agentic capabilities directly into their massive ecosystems. Salesforce’s ‘Agentforce’ aims to put an agent in every CRM workflow. AWS Bedrock provides the infrastructure for building custom agents.

IBM’s differentiator in this crowded field is its focus on Governance and Hybrid Cloud. IBM is focusing on “highly regulated” industries such as finance, healthcare, and government. It has a historical stronghold in these sectors. IBM believes that ‘Trust’ will be the winning argument for 2026 rather than just ‘Capability’.

Case Studies in Transformation — The Agentic Enterprise AI in Action

The theory of agentic AI is compelling, but the reality is even more so. Leading global organizations are already deploying these architectures to fundamentally redesign their operations, providing a glimpse of the 2026 standard.

Toyota: From Green Screens to Agentic Logistics

Toyota Motor North America (TMNA) provides a quintessential example of the shift from legacy automation to agentic intelligence. The automotive giant faced a common challenge for mature enterprises. They had a fragmented data landscape. Additionally, they relied on ‘green screen’ legacy systems for vehicle delivery tracking.

The Problem:

Identifying delays in the vehicle delivery lifecycle was a manual, reactive process. Logistics planners had to switch between multiple systems. They correlated data on spreadsheets. They used human intuition to guess where bottlenecks might occur. By the time a delay was identified, it was often too late to fix it without significant cost.

The Agentic Solution:

Partnering with AWS and Deloitte, Toyota deployed an agent-driven experience for the Vehicle Delivery ETA workflow. This system does not just ‘report’ on the status of vehicles; it actively manages them.

- Autonomous Monitoring: The agent continuously scans the logistics network. It tracks the lifecycle of every vehicle produced for the North American market.

- Proactive Reasoning: Unlike a rules-based alert, the agent reasons about the context. It considers weather patterns, traffic conditions, and historical vendor performance to predict delays before they happen.

- Decisive Action: When a risk is identified, the agent acts. It autonomously drafts and sends emails to logistics providers, requesting specific interventions (e.g., ‘Place vehicle VIN#123 in Bin A for expedited truck loading’).

- Communication: It at the same time updates the dealership, explaining the delay and the remediation steps being taken.

- The ‘Overnight’ Effect: Crucially, the agent performs these tasks 24/7. As Jason Ballard, VP of Digital Innovations at Toyota, notes, “The agent can do all these things before the team member even comes in in the morning”.

The Outcome:

The role of the human logistics planner has shifted from “data chaser” to ‘exception handler.’ They no longer spend their days searching for problems. They start their day with a report of problems that have already been solved or routed for their expert review. This improves both employee satisfaction (eliminating drudgery) and customer satisfaction (more reliable ETAs).

Learn more: AWS, Deloitte, and Toyota

S&P Global and Dun & Bradstreet: The Supply Chain Nervous System

In the financial and information services sector, the focus is on managing risk through high-velocity data synthesis.

S&P Global and Dun & Bradstreet have integrated IBM’s watsonx Orchestrate to create agents. These agents act as the nervous system for supply chain and procurement.

The ‘Ask Procurement’ Agent:

Developed by Dun & Bradstreet, this agent dramatically reduces the friction of supplier vetting. In a traditional workflow, evaluating a new supplier involves days of manual research. This includes checking financial reports, verifying ESG compliance, and searching for adverse media coverage.

The Agentic Workflow:

- Retrieval: A human procurement officer asks the agent to ‘Evaluate Supplier X.’ The agent instantly retrieves proprietary data from the D&B Data Cloud, accessing over 590 million records.

- Synthesis: It analyzes financial stability, fraud potential, and ESG scores at the same time.

- Risk Assessment: It synthesizes a 360-degree risk assessment, highlighting red flags that a human might miss in a mountain of data.

- Integration: The agent integrates this data directly into the client’s ERP, automating the onboarding process.

The Impact:

This system reduces procurement task time by approximately 20%.

But the deeper value is Continuous Monitoring.

The agent doesn’t just check the supplier once; it maintains a persistent ‘watch.’ If the supplier’s financial health degrades or a new fraud alert is issued months later, the agent proactively alerts the human officer. This transforms risk management from a “snapshot” process to a “streaming” process.

Learn more: S&P Global and IBM Deploy Agentic AI to Improve Enterprise Operations

IBM AskHR: The Internal Digital Employee

IBM’s own internal use of agentic AI, via the AskHR system, demonstrates significant efficiency gains. These gains are possible when agents are deployed across a massive workforce.

Powered by watsonx Orchestrate, this agent handles over 10 million annual interactions.

Beyond the FAQ:

Most HR chatbots are simple FAQ machines (“Where is the holiday policy?”). AskHR is a transactional agent integrated with the enterprise’s core systems: Workday, SAP, and Concur.

- Capabilities: It can do over 80 distinct tasks. It can generate job verification letters, process employee transfers, update organizational structures, and handle compensation changes.

- Intelligent Routing: It uses intelligent prompt classification to distinguish between a simple query (System 1) and a complex operation requiring multi-step execution (System 2).

- Manager Support: It has specialized workflows for managers. These workflows allow them to execute administrative changes via chat. Before, this required navigating complex ERP menus.

The Results:

- 94% Containment: The vast majority of inquiries are resolved without human intervention.

- Cost Reduction: A 40% reduction in HR operational costs over four years.15

- Adoption: A 99% adoption rate among managers, proving that when agents actually work, users embrace them enthusiastically.

This internal deployment acts as a blueprint for the ‘digital employee.’ It is a system that works alongside humans. It handles the bureaucratic friction of the enterprise. Meanwhile, humans focus on high-touch personnel management and strategy.

Check out the case study by IBM: Transforming HR support with agentic AI

The Economics of the Silicon AI Workforce

The shift to agentic AI is driven by a compelling economic logic that extends beyond simple labor arbitrage. It signifies a fundamental restructuring of the cost of work and a redefinition of productivity.

The Cost-Per-Task Arbitrage

The economic disparity between human and agentic labor for routine digital tasks is becoming too large to ignore. As we approach 2026, the cost per interaction for an AI agent is projected to be $0.25 to $0.50, compared to $3.00 to $6.00 for a human agent.

Table: The Economic Arbitrage of Agentic AI (Projected 2026)

| Metric | Human Agent | AI Agent (2026) | Impact |

| Cost per Interaction | $4.00 – $8.00 | < $0.50 | ~90% Savings |

| Availability | 8-10 hours/day | 24/7/365 | 3x Capacity |

| Response Time | Minutes/Hours | Instant/Milliseconds | ~99% Reduction |

| Training Time | Weeks/Months | Instant (once deployed) | Zero Marginal Cost |

| Scalability | Linear (Hire more people) | Exponential (Spin up instances) | Infinite Elasticity |

For a business handling 50,000 monthly interactions, the cost differential is roughly $300,000 (human) versus $25,000 (AI).

This significant reduction in the cost of cognitive labor enables enterprises to deploy intelligence in new areas. These areas were formerly deemed too expensive for human attention.

- Hyper-Personalization: Agents can draft a personalized, researched email for every single customer, not just the top 1% of accounts.

- Micro-Optimization: Agents can watch every single SKU in a supply chain for efficiency gains. This task would need an army of human analysts.

The “J-Curve” of AI ROI

The return on investment (ROI) for agentic AI follows a ‘J-Curve.’

The Dip:

Initially, organizations may see a productivity dip or flatline. This occurs as they invest in the ‘Agent Factories,’ governance structures, and data cleaning needed to build these systems. This is the ‘trough of disillusionment’ where the friction of integration is high.

The Ascent:

Once the ‘Silicon Workforce’ is operational, the ROI scales non-linearly. Unlike human teams, managing them becomes harder as they grow. This is the ‘Brooks’ Law’ of diminishing returns. Agent fleets, yet, gain from network effects. An agent monitoring a data pipeline can detect inefficiencies and self-correct, creating value that compounds over time without extra cost.

The Productivity Multiplier: Superagency

Surveys show that treating AI as a ‘collaborative partner’ rather than a tool leads to 88% higher productivity. This phenomenon is known as Superagency.

A single human orchestrator, armed with a fleet of specialized agents, can achieve the output of a 10-person team.

For example, in software development, agents like Writer’s Palmyra-X5 act as ‘junior developers.’ They can read a codebase of 1 million tokens, understand the architectural context, and suggest refactors or write unit tests. The senior human developer shifts from writing boilerplate code to reviewing and guiding the architecture, effectively multiplying their output.

The ‘Flattening’ of the Hierarchy

The rise of the agentic workforce brings profound disruption to traditional organizational structures, particularly middle management.

Middle management has historically functioned as the information router of the enterprise. It gathered data from the bottom. Then, it synthesized the data and reported it to the top.

In 2026, AI agents assume this role. Agents offer real-time, unbiased, and granular reporting via dashboards, rendering the manual “status update” meeting obsolete.

This leads to a flattening of the workforce hierarchy.

Entry-level employees, empowered by agentic tools, can execute strategic directives with a level of competence once reserved for senior staff.

However, this creates a risk identified by Forrester: the ‘hollowing out’ of the career ladder. If agents do the ‘grunt work,’ how do junior employees learn the ropes to become senior leaders?

Overcoming Governance, Reliability, and the Trust Barrier

As agents move from “chat” (low risk) to “action” (high risk), the primary constraint on adoption shifts. It changes from capability to trust.

An agent that can autonomously wire funds is a significant liability if not properly governed. It can also remove database records or communicate with customers.

Autonomy without Verification is Liability

The industry is moving toward Bounded Autonomy, where agents function within strict, pre-defined limits.

Hard Guardrails:

Agents are programmed with “hard” stops. For example, a procurement agent may be authorized to buy supplies up to $5,000 autonomously. However, any buying request above $5,000 triggers a ‘human-in-the-loop’ escalation. This ensures that high-stakes decisions always have human oversight.

Observability:

Unlike opaque black-box models of the past, 2026 agents need to “show their work.”

Platforms like watsonx.governance provide detailed traceability. They record exactly why an agent made a decision. They also note the data points used and tools executed. This ‘audit trail’ is essential for compliance and debugging.

The ‘Death by AI’ Legal Risk

Gartner predicts that by the end of 2026, “death by AI” legal claims could exceed 2,000. This is due to insufficient guardrails in physical or high-stakes AI systems

This grim prediction underscores the necessity of Explainable AI (XAI).

The Black Box Problem:

In regulated industries like healthcare (diagnosis) and finance (loan approval), it is not enough for an agent to be right. It must be provably right.

The “System 2” reasoning capabilities of agents are crucial here. They allow the agent to articulate its ‘Chain of Thought‘ for audit purposes.

If an agent denies a loan, it must be capable of citing the specific policy. The agent should also mention the data points that led to that decision. This process ensures compliance with Fair Lending laws.

AI Sovereignty: Controlling the Brain

A major trend for 2026 is AI Sovereignty. Organizations demand to own and control their AI brains. They prefer this over renting them from a centralized provider.

Executives (93%) agree that controlling the AI infrastructure is essential for resilience.

Small Language Models (SLMs):

We are seeing a shift toward smaller, domain-specific models that run on-premise or in private clouds. These small language models are fine-tuned on the enterprise’s own data. This makes sure that sensitive IP never leaves the corporate perimeter.

Learn more about Small Language Models here:

Hybrid Architectures:

The standard architecture of 2026 is hybrid.

An enterprise might use a massive public model, like GPT-5 or Claude 4, for general reasoning and creative tasks. However, it may switch to a sovereign, private agent for handling sensitive customer PII (Personally Identifiable Information) or trade secrets.

The Enterprise AI Agent Imperative

IBM’s insight that we are ‘moving past single-purpose’ is not just a product announcement. It is a forecast of a fundamental industrial evolution. The single-task bot was a novelty; the multi-step, memory-enabled agent is a necessity.

For the modern enterprise, the mandate is clear: Stop building chatbots.

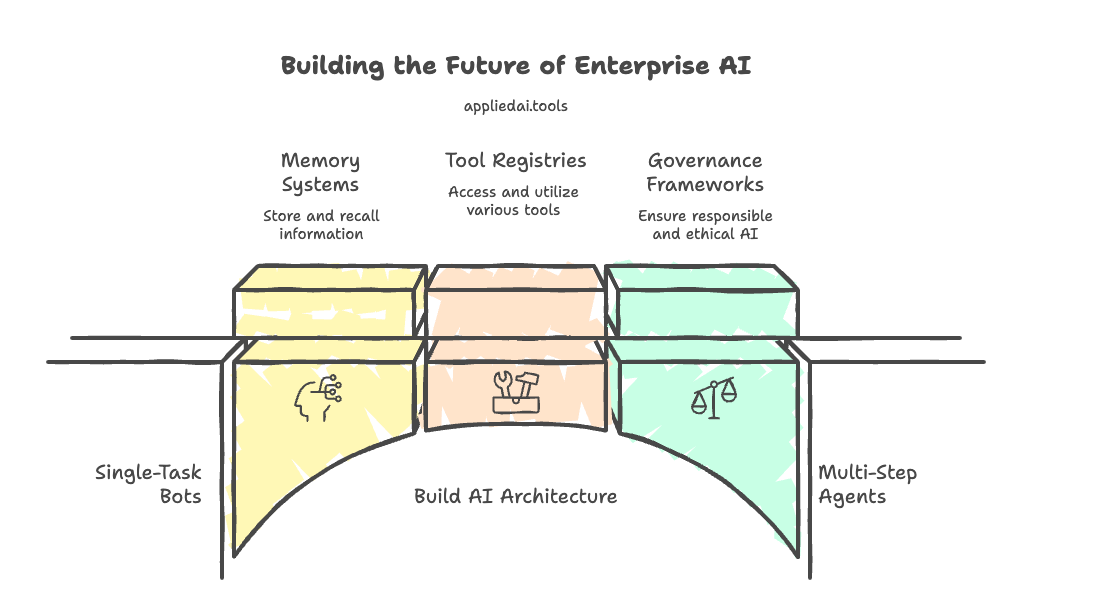

Start building the architecture—the memory systems, the tool registries, and the governance frameworks—that will support the silicon workforce of 2026.

Those who succeed will not just automate their business; they will accelerate it to the speed of thought. Those who fail will find themselves managing a workforce of amnesiacs in an era of total recall.

Key Terminology and Learn More on AI Agents

Key Definitions:

- Agentic AI: AI systems capable of autonomous goal pursuit, planning, and tool use, distinct from passive Q&A models.

- Bounded Autonomy: A governance framework where agents have freedom to act within strictly defined operational limits/guardrails.

- Chain of Thought (CoT): A prompting/reasoning technique where the AI details its logical steps before arriving at a conclusion.

- Episodic Memory: Storage of specific past interactions/events to provide continuity.

- Procedural Memory: Storage of “how-to” knowledge for executing workflows and using tools.

- System 2 Reasoning: Slow, deliberate, logical processing (planning) vs. System 1 (fast, intuitive prediction).

- Vibecoding: The generation of ephemeral, personalized software applications by AI to solve immediate, niche user needs.

- Watsonx Orchestrate: IBM’s platform for managing, sequencing, and deploying multi-agent workflows.

More articles on AI Agents:

- OpenAI’s Why Language Models Hallucinate AI Research Paper Explained

- Why GPT-5 Launch Failed: User Feedback Analysis [Reddit/X vibe checks + expert reviews]

- OpenAI o3 vs o4-mini: Reddit And Expert Review Analysis On Upgrades

- What is OpenAI o3-mini: technical features, performance benchmarks, and applications

- 10 Insights from Athens Summit with Greek PM Kyriakos Mitsotakis and DeepMind CEO Demis Hassabis

- No-Code AI Agents? LangChain Launches Open Agent Platform

We will update you more about such AI news and guides on using AI tools, subscribe to our newsletter shared once a week:

This blog post is written using resources of Merrative. We are a publishing talent marketplace that helps you create publications and content libraries.

Get in touch if you would like to create a content library like ours. We specialize in the niche of Applied AI, Technology, Machine Learning, or Data Science.

Leave a Reply